Kubernetes-部署EFK日志收集+告警

一、简介

1. 关于云原生中日志

随着现在各种软件系统的复杂度越来越高,特别是部署到云上之后,再想登录各个节点上查看各个模块的log,基本是不可行了。因为不仅效率低下,而且有时由于安全性,不可能让工程师直接访问各个物理节点。而且现在大规模的软件系统基本都采用集群的部署方式,意味着对每个service,会启动多个完全一样的POD对外提供服务,每个container都会产生自己的log,仅从产生的log来看,你根本不知道是哪个POD产生的,这样对查看分布式的日志更加困难。

所以在云时代,需要一个收集并分析log的解决方案。首先需要将分布在各个角落的log收集到一个集中的地方,方便查看。收集了之后,还可以进行各种统计分析,甚至用流行的大数据或maching learning的方法进行分析。当然,对于传统的软件部署方式,也需要这样的log的解决方案,不过本文主要从云的角度来介绍。

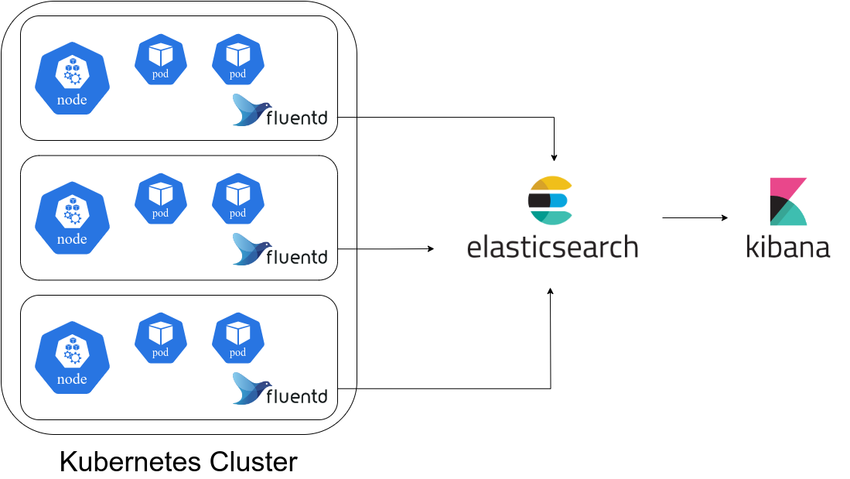

2. 架构介绍

关于什么是EFK,首先 E 代表的是:Elasticsearch,他是一个搜索引擎,负责存储日志并提供查询接口,同样可以理解为存储日志的数据库,F 代表的是:Fluentd,负责从 Kubernetes 搜集日志,每个 node 节点上面的 fluentd 监控并收集该节点上面的系统日志,并将处理过后的日志信息发送给 Elasticsearch,同样收集日志的工具还有 Filebeat 和 logstash 每个都有不同的用处,Filebeat 还可以充当 sidecar 边车模式运行在 pod 中他们挂在同一个日志目录通过这种方式收集日志来推送到 Elasticsearch 中,而 logstash 支持的功能比较多对于日志的处理比较更加细致所以相对来说更加吃系统资源,K 代表的就是 Kibana 他提供了一个 Web GUI,用户可以浏览和搜索存储在 Elasticsearch 中的日志。

3. Elastalert 告警功能

Elastalert是Yelp公司基于python开发的ELK日志告警插件,Elastalert通过查询Elasticsearch中的记录与定于的告警规则进行对比,判断是否满足告警条件。发生匹配时,将为该告警触发一个或多个告警动作。告警规则由Elastalert的rules定义,每个规则定义一个查询。

工作原理

周期性的查询Elastsearch并且将数据传递给规则类型,规则类型定义了需要查询哪些数据。

当一个规则匹配触发,就会给到一个或者多个的告警,这些告警具体会根据规则的配置来选择告警途径,就是告警行为,比如邮件、企业微信、飞书、钉钉等

二、部署

1. 创建本地类型的 PVC

[root@k8s-master EFK]# cat local-storage.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: local-storage

annotations:

storageclass.kubernetes.io/is-default-class: "true" # 设置为默认 StorageClass

provisioner: kubernetes.io/no-provisioner

volumeBindingMode: WaitForFirstConsumer

reclaimPolicy: Retain

[root@k8s-master EFK]# cat elasticsearch-data-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: data-es-cluster-0

spec:

capacity:

storage: 100Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: local-storage

local:

path: /data/elasticsearch/data-es-cluster-0 # 本地存储路径,确保路径存在且已挂载

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- k8s-node1 # 使用特定节点的名称

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: data-es-cluster-1

spec:

capacity:

storage: 100Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: local-storage

local:

path: /data/elasticsearch/data-es-cluster-1 # 本地存储路径,确保路径存在且已挂载

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- k8s-node2 # 使用特定节点的名称

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: data-es-cluster-2

spec:

capacity:

storage: 100Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: local-storage

local:

path: /data/elasticsearch/data-es-cluster-2 # 本地存储路径,确保路径存在且已挂载

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- k8s-node3 # 使用特定节点的名称

[root@k8s-master EFK]# 2. 部署 Elasticsearch

[root@k8s-master EFK]# cat elasticsearch-svc.yaml

kind: Service

apiVersion: v1

metadata:

name: elasticsearch

namespace: kube-logging

labels:

app: elasticsearch

spec:

selector:

app: elasticsearch

clusterIP: None

ports:

- port: 9200

name: rest

- port: 9300

name: inter-node

---

kind: Service

apiVersion: v1

metadata:

name: elasticsearch-cs

namespace: kube-logging

labels:

app: elasticsearch

spec:

selector:

app: elasticsearch

type: NodePort

ports:

- port: 9200

name: rest

nodePort: 30900[root@k8s-master EFK]# cat elasticsearch/elasticsearch-statefulset.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: es-cluster

namespace: kube-logging

spec:

serviceName: elasticsearch

replicas: 3

selector:

matchLabels:

app: elasticsearch

template:

metadata:

labels:

app: elasticsearch

spec:

initContainers:

- name: fix-permissions

image: busybox

imagePullPolicy: IfNotPresent

command: ["sh", "-c", "chown -R 1000:1000 /usr/share/elasticsearch/data"]

securityContext:

privileged: true

volumeMounts:

- name: data

mountPath: /usr/share/elasticsearch/data

- name: increase-vm-max-map

image: busybox

imagePullPolicy: IfNotPresent

command: ["sysctl", "-w", "vm.max_map_count=262144"]

securityContext:

privileged: true

- name: increase-fd-ulimit

image: busybox

imagePullPolicy: IfNotPresent

command: ["sh", "-c", "ulimit -n 65536"]

securityContext:

privileged: true

containers:

- name: elasticsearch

image: docker.elastic.co/elasticsearch/elasticsearch:7.2.0

imagePullPolicy: IfNotPresent

env:

- name: cluster.name

value: k8s-logs

- name: node.name

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: discovery.seed_hosts

value: "es-cluster-0.elasticsearch,es-cluster-1.elasticsearch,es-cluster-2.elasticsearch"

- name: cluster.initial_master_nodes

value: "es-cluster-0,es-cluster-1,es-cluster-2"

- name: ES_JAVA_OPTS

value: "-Xms10g -Xmx10g" # 最大小值和最大值必须要一致否则会报错 not equal to maximum heap size

ports:

- containerPort: 9200

name: rest

protocol: TCP

- containerPort: 9300

name: inter-node

protocol: TCP

resources:

requests:

cpu: "4" # 保证基础算力(根据实际负载调整)

memory: "12Gi" # 必须满足:JVM堆(10Gi) + Off-Heap(1Gi) + 系统预留(1Gi)

limits:

cpu: "8" # 防止突发负载时被压制

memory: "13Gi" # 略大于requests,避免OOM Killer

volumeMounts:

- name: data

mountPath: /usr/share/elasticsearch/data

- name: config

mountPath: /usr/share/elasticsearch/config/elasticsearch.yml

subPath: elasticsearch.yml

volumes:

- name: config

configMap:

name: es-config

volumeClaimTemplates:

- metadata:

name: data

labels:

app: elasticsearch

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: local-storage

resources:

requests:

storage: 100Gi配置文件

[root@k8s-app-1 Elasticsearch]# cat elasticsearch-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: es-config

namespace: kube-logging

data:

elasticsearch.yml: |

cluster.name: "docker-cluster" # 集群名称,多个节点组在一起形成一个集群时名称要统一

network.host: 0.0.0.0 # 绑定监听地址,0.0.0.0 表示所有网络接口都监听,允许远程访问

# 索引写入缓冲区大小,占 JVM 堆内存百分比,越大能缓存更多写操作减少磁盘写

indices.memory.index_buffer_size: 30%

# Fielddata 用于聚合、排序时的缓存大小,设置过大可能占用大量堆内存

indices.fielddata.cache.size: 10%

# 查询缓存大小,缓存查询结果减少重复计算,日志写入时可适当减少

indices.queries.cache.size: 5%

# 搜索线程池大小,控制并发搜索请求的线程数,写多读少时可适当减少,节省资源

thread_pool.search.size: 10

# 写线程池大小,控制并发写入请求的线程数,写入压力大时应增大此值

thread_pool.write.size: 5

# 写线程池队列大小,写请求在队列中的最大排队数,防止瞬时高峰丢请求,但队列过大可能导致内存积压

thread_pool.write.queue_size: 2000[root@k8s-master EFK]# mkdir -pv /data/elasticsearch-data/{0,1,2}

[root@k8s-master EFK]# kubectl create ns kube-logging

[root@k8s-master EFK]# kubectl apply -f .

[root@k8s-master EFK]# kubectl get pods -n kube-logging -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

es-cluster-0 1/1 Running 0 3m42s 10.233.123.11 k8s-app-0 <none> <none>

es-cluster-1 1/1 Running 0 2m39s 10.233.123.12 k8s-app-0 <none> <none>

es-cluster-2 1/1 Running 0 2m29s 10.233.123.13 k8s-app-0 <none> <none>查看集群状态信息

[root@k8s-master1 Elasticsearch]# kubectl -n kube-logging exec -it es-cluster-0 -c elasticsearch -- curl 127.0.0.1:9200/_cluster/health?pretty

{

"cluster_name" : "k8s-logs",

"status" : "green",

"timed_out" : false,

"number_of_nodes" : 3,

"number_of_data_nodes" : 3,

"active_primary_shards" : 13,

"active_shards" : 26,

"relocating_shards" : 0,

"initializing_shards" : 0,

"unassigned_shards" : 0,

"delayed_unassigned_shards" : 0,

"number_of_pending_tasks" : 0,

"number_of_in_flight_fetch" : 0,

"task_max_waiting_in_queue_millis" : 0,

"active_shards_percent_as_number" : 100.0

}2.1 创建索引生命周期管理策略

[root@k8s-master1 Elasticsearch]# kubectl -n kube-logging exec -it es-cluster-0 -c elasticsearch -- curl -X PUT "http://127.0.0.1:9200/_ilm/policy/watch-history-ilm-policy" \

-H 'Content-Type: application/json' \

-d'

{

"policy": {

"phases": {

"delete": {

"min_age": "30d",

"actions": {

"delete": {}

}

}

}

}

}

'创建一个名为

watch-history-ilm-policy的 ILM 策略策略内容是:

当索引的最小年龄(min_age)达到 30 天时

执行

delete动作 —— 删除该索引

2.2 查看索引生命周期策略

[root@k8s-master1 Elasticsearch]# kubectl -n kube-logging exec -it es-cluster-0 -c elasticsearch -- curl -X GET http://127.0.0.1:9200/_ilm/policy?pretty2.3 创建索引模版

关键点:

新创建的

k8s-app-*索引会自动绑定 ILM 策略。

[root@k8s-master1 Elasticsearch]# kubectl -n kube-logging exec -it es-cluster-0 -c elasticsearch -- curl -X PUT "http://127.0.0.1:9200/_template/k8s-app-template" -H 'Content-Type: application/json' -d'

{

"index_patterns": ["k8s-app-*"],

"settings": {

"index.lifecycle.name": "watch-history-ilm-policy",

"index.lifecycle.rollover_alias": "logs",

"number_of_shards": 1,

"number_of_replicas": 1

}

}

'2.4 查看刚刚创建的 k8s-app-template 模版

[root@k8s-app-1 Elasticsearch]# kubectl -n kube-logging exec -it es-cluster-0 -c elasticsearch -- curl -X GET "http://127.0.0.1:9200/_template/k8s-app-template?pretty"2.5 查看索引的生命周期管理状态

确保策略和模版应用到索引身上成功,检查输出中所有 k8s-app-* 索引的 managed 是否为 true

[root@k8s-app-1 Elasticsearch]# kubectl -n kube-logging exec -it es-cluster-0 -c elasticsearch -- curl -X GET "http://127.0.0.1:9200/k8s-app-*/_ilm/explain?pretty"不过现在检查应该不会显示,等后面把 fluentd 接入到 ES 中,成功收取到日志后才能看到,效果如下

[root@k8s-app-1 Elasticsearch]# kubectl -n kube-logging exec -it es-cluster-0 -c elasticsearch -- curl -X GET "http://127.0.0.1:9200/k8s-app-indc-event-live-server-2025.08.07/_ilm/explain?pretty"

{

"indices" : {

"k8s-app-indc-event-live-server-2025.08.07" : {

"index" : "k8s-app-indc-event-live-server-2025.08.07",

"managed" : true,

"policy" : "watch-history-ilm-policy",

"lifecycle_date_millis" : 1754555194053,

"phase" : "new",

"phase_time_millis" : 1754555716685,

"action" : "complete",

"action_time_millis" : 1754555716685,

"step" : "complete",

"step_time_millis" : 1754555716685

}

}

}如果索引已经存在了,那么使用如下命令可以将现有的索引应用到创建的 watch-history-ilm-policy 模版中

[root@k8s-app-1 Elasticsearch]# kubectl -n kube-logging exec es-cluster-0 -c elasticsearch -- curl -X PUT "http://127.0.0.1:9200/k8s-app-*/_settings" -H 'Content-Type: application/json' -d '

{

"index.lifecycle.name": "watch-history-ilm-policy",

"index.lifecycle.rollover_alias": "logs"

}'普通 deployment 使用 local 存储如下

[root@k8s-master EFK]# cat data-pvc.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: local-pv

spec:

capacity:

storage: 10Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: local-storage

local:

path: /data/test-pvc/01 # 本地存储路径,确保路径存在且已挂载

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- k8s-master # 使用特定节点的名称

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: local-pvc

spec:

storageClassName: local-storage

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi3. 制作 fluentd 镜像

[root@k8s-app-1 build]# cat Dockerfile

FROM quay.io/fluentd_elasticsearch/fluentd:v3.4.0

ADD fluent.conf /etc/fluent/

# 设置 RubyGems 使用国内镜像

RUN echo -e "install: --no-document\nupdate: --no-document\n:sources:\n- https://gems.ruby-china.com/" > /etc/gemrc

# 安装插件(特别注意顺序)

RUN gem install fluent-plugin-rewrite-tag-filter --no-document \

&& gem install fluent-plugin-prometheus --no-document \

&& gem install fluent-plugin-grok-parser --no-document \

&& gem install fluent-plugin-concat --no-document \

&& gem install fluent-plugin-multi-format-parser --no-document \

&& gem install fluent-plugin-detect-exceptions --no-document \

&& gem install fluent-plugin-kafka -v 0.12.3 --no-document

# 设置环境变量

ENV TZ=Asia/Shanghai

# 时区

RUN ln -snf /usr/share/zoneinfo/$TZ /etc/localtime

ENTRYPOINT ["/entrypoint.sh"][root@k8s-app-1 build]# cat fluent.conf

<source>

@type forward

@id input1

@label @mainstream

port 24224

</source>

<filter **>

@type stdout

</filter>

<label @mainstream>

<match docker.**>

@type file

@id output_docker1

path /fluentd/log/docker.*.log

symlink_path /fluentd/log/docker.log

append true

time_slice_format %Y%m%d

time_slice_wait 1m

time_format %Y%m%dT%H%M%S%z

</match>

<match **>

@type file

@id output1

path /fluentd/log/data.*.log

symlink_path /fluentd/log/data.log

append true

time_slice_format %Y%m%d

time_slice_wait 10m

time_format %Y%m%dT%H%M%S%z

</match>

</label>[root@k8s-app-1 build]# docker build . -t registry.cn-hangzhou.aliyuncs.com/tianxiang_app/custom-fluentd-v3.4:v14. 部署 Fluentd

如果有任何需要调整或补充的地方,请随时告诉我!

[root@k8s-master EFK]# cat fluentd/fluentd-configmap.yaml

kind: ConfigMap

apiVersion: v1

metadata:

name: fluentd-config

namespace: kube-logging

data:

fluent.conf: |-

@include system.conf

@include containers.input.conf

@include forward.input.conf

@include output.conf

@include fluentd.log.conf

system.conf: |-

<system>

root_dir /tmp/fluentd-buffers/

log_level warn

</system>

fluentd.log.conf: |-

<label @FLUENT_LOG>

<match **>

@type null

</match>

</label>

containers.input.conf: |-

# 采集 Kubernetes 容器日志

<source>

@id fluentd-containers.log

@type tail

path /var/log/containers/*.log # 从容器日志文件中采集(容器的 stdout/stderr 日志)

pos_file /var/log/es-containers.log.pos # 记录读取位置,避免重读

tag raw.kubernetes.* # 打标签,后续 filter/match 用

read_from_head true # 从文件头部开始读(适合初次启动)

<parse> # 解析日志格式

@type multi_format # 支持多种格式自动识别

<pattern>

format json # 优先尝试解析成 JSON 格式(标准容器日志)

time_key time # 日志中的时间字段是 time

time_format %Y-%m-%dT%H:%M:%S.%NZ # 时间格式

</pattern>

<pattern>

format /^(?<time>.+) (?<stream>stdout|stderr) [^ ]* (?<log>.*)$/ # 兼容解析不规范日志

time_format %Y-%m-%dT%H:%M:%S.%N%:z

</pattern>

</parse>

</source>

# 多行日志拼接(比如 Java 异常堆栈)

# multiline_start_regexp /^\d{4}-\d{2}-\d{2} \d{2}:\d{2}:\d{2}[\.\,]\d{3}/

# 匹配日志起始行(如 Java 异常),时间戳格式支持:

# 2024-05-09 14:23:45.123 或 2024-05-09 14:23:45,123

# 说明:以 YYYY-MM-DD HH:MM:SS.mmm 或 YYYY-MM-DD HH:MM:SS,mmm 开头的行为新日志

<filter raw.kubernetes.**>

@id filter_concat

@type concat

timeout 120s # 默认 60s,可根据需要调整

key log # 基于 log 字段合并

multiline_start_regexp /^\d{4}-\d{2}-\d{2} \d{2}:\d{2}:\d{2}[\.\,]\d{3}/

flush_interval 3s # 适当缩短刷新间隔,避免堆积

separator "" # 合并时不加额外分隔符

separator "\n" # 明确使用换行符作为分隔符

partial_value true

exclude_tag raw.kubernetes.var.log.containers.fluentd-* # 排除 Fluentd 自身日志

</filter>

# 清除日志里的 ANSI 颜色控制符(比如 ^[[34m)

<filter raw.kubernetes.**>

@id filter_remove_ansi_color

@type record_transformer

enable_ruby true

<record>

log ${record["log"].gsub(/\e\[[\d;]*m/, '')} # 用 Ruby 正则删除颜色码

</record>

</filter>

# 检测 Java 异常堆栈,保证异常信息被整体处理

<match raw.kubernetes.**>

@id raw.kubernetes

@type detect_exceptions

remove_tag_prefix raw # 把 tag 的 raw. 前缀去掉

message log # 指定日志字段

languages java,python,go # 启用 Java python 和 go 异常检测

multiline_flush_interval 5 # 5秒内等待多行合并

max_bytes 500000 # 单条日志最大500KB

max_lines 1000 # 最多合并1000行

</match>

# 根据日志中的 Pod 名、容器名等,补充 Kubernetes 元数据(比如 namespace、labels)

<filter kubernetes.**>

@id filter_kubernetes_metadata

@type kubernetes_metadata

</filter>

# 解析 log 字段(如果里面嵌套了一层 JSON,再展开出来)

<filter kubernetes.**>

@id filter_parser

@type parser

key_name log # 针对 log 字段解析

reserve_data true # 保留原本的字段

remove_key_name_field true # 开启解析后移除原 log 字段,使其变为 message

<parse>

@type multi_format

<pattern>

format json # 先尝试解析为 JSON

</pattern>

<pattern>

format none # 如果不是 JSON,直接原样保留

</pattern>

</parse>

</filter>

#<filter kubernetes.**> # 统一只保留 log,清掉 message(避免被其它插件写回 message

# @id normalize_log_field

# @type record_transformer

# enable_ruby true

# remove_keys message

# <record>

# log ${record['log'] || record['message']}

# </record>

#</filter>

# 添加记录转换器来提取标签并创建索引名: k8s-log-simulator-2025.04.01

<filter kubernetes.**>

@type record_transformer

enable_ruby true

<record>

# 将原始日志的time字段转为Elasticsearch需要的@timestamp

@timestamp ${record.dig('time') || Time.now.utc.iso8601(3)}

# 保留原始时间字段(可选)

#time ${record.dig('time')}

# 自动创建索引名称前缀,识别 pod spec 中的 k8s-app 标签

index_name k8s-app-${record.dig("kubernetes", "labels", "k8s-app") || "unknown"}-${Time.at(record.dig('time') ? DateTime.parse(record.dig('time')).to_time.utc.to_i : Time.now.to_i).strftime('%Y.%m.%d')}

</record>

</filter>

# 只搜集 lable 为 logging: 'true' 的 pod 日志

<filter kubernetes.**>

@id filter_log

@type grep

<regexp>

key $.kubernetes.labels.logging

pattern ^true$

</regexp>

</filter>

forward.input.conf: |-

<source>

@id forward

@type forward

</source>

output.conf: |-

<match kubernetes.**>

@id elasticsearch

@type elasticsearch

@log_level info

include_tag_key true

host elasticsearch

port 9200

validate_client_version true

logstash_format false # 禁用默认的logstash格式,因为我们自定义索引名

index_name ${index_name} # 使用前面创建的动态索引名

request_timeout 15s

# 明确指定时间字段和格式

time_key @timestamp

time_key_format %Y-%m-%dT%H:%M:%S.%NZ

include_timestamp true # 确保@timestamp被索引

suppress_type_name true

# 开启扁平化,如:kubernetes_container_name

flatten_hashes true

flatten_hashes_separator _

reload_on_failure true

reload_connections false

reconnect_on_error true

<buffer tag, index_name> # 修改buffer key包含index_name

@type file

path /var/log/fluentd-buffers/kubernetes.system.buffer

timekey 1h

timekey_wait 10m

timekey_use_utc true

flush_mode interval

flush_interval 5s

flush_thread_count 8

chunk_full_threshold 0.9

retry_type exponential_backoff

retry_timeout 1h

retry_max_interval 30

retry_max_times 30

chunk_limit_size 5M

total_limit_size 50M

overflow_action drop_oldest_chunk

</buffer>

<secondary>

@type file

path /var/log/fluentd-failed-records

time_slice_format %Y%m%d

time_slice_wait 10m

time_format %Y-%m-%dT%H:%M:%S.%NZ

</secondary>

</match>[root@k8s-master EFK]# cat fluentd/fluentd-daemonset.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: fluentd-es

namespace: kube-logging

labels:

k8s-app: fluentd-es

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: fluentd-es

labels:

k8s-app: fluentd-es

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

rules:

- apiGroups:

- ""

resources:

- "namespaces"

- "pods"

verbs:

- "get"

- "watch"

- "list"

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: fluentd-es

labels:

k8s-app: fluentd-es

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

subjects:

- kind: ServiceAccount

name: fluentd-es

namespace: kube-logging

apiGroup: ""

roleRef:

kind: ClusterRole

name: fluentd-es

apiGroup: ""

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: fluentd-es

namespace: kube-logging

labels:

k8s-app: fluentd-es

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

selector:

matchLabels:

k8s-app: fluentd-es

template:

metadata:

labels:

k8s-app: fluentd-es

kubernetes.io/cluster-service: "true"

spec:

priorityClassName: system-node-critical # 确保如果节点被驱逐,fluentd不会被驱逐,支持关键的基于 pod 注释的优先级方案。

serviceAccountName: fluentd-es

dnsPolicy: ClusterFirst

containers:

- name: fluentd-es

image: registry.cn-hangzhou.aliyuncs.com/tianxiang_app/custom-fluentd-v3.4:v1

env:

- name: FLUENTD_ARGS

value: --no-supervisor -q

- name: TZ

value: Asia/Shanghai

- name: K8S_NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

resources:

limits:

cpu: "0.5"

memory: "2048Mi"

requests:

cpu: "0.2"

memory: "200Mi"

volumeMounts:

- name: varlog

mountPath: /var/log

- name: containers

mountPath: /var/lib/docker/containers/ # 这里根据你 docker 的存储目录来定义

readOnly: true

- name: config-volume

mountPath: /etc/fluent

nodeSelector:

beta.kubernetes.io/fluentd-ds-ready: "true"

tolerations:

- operator: Exists

terminationGracePeriodSeconds: 30

volumes:

- name: varlog

hostPath:

path: /var/log

- name: containers

hostPath:

path: /var/lib/docker/containers/ # 这里根据你 docker 的存储目录来定义

- name: config-volume

configMap:

name: fluentd-config[root@k8s-master EFK]# kubectl apply -f fluentd/

5. 部署 Kibana

apiVersion: v1

kind: ConfigMap

metadata:

namespace: kube-logging

name: kibana-config

labels:

app: kibana

data:

kibana.yml: |-

server.name: kibana

server.host: "0"

i18n.locale: zh-CN # 设置默认语言为中文

elasticsearch:

hosts: ${ELASTICSEARCH_URL}

---

apiVersion: v1

kind: Service

metadata:

name: kibana

namespace: kube-logging

labels:

app: kibana

spec:

type: NodePort

selector:

app: kibana

ports:

- port: 5601

protocol: TCP

targetPort: 5601

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: kibana

namespace: kube-logging

labels:

app: kibana

spec:

replicas: 1

selector:

matchLabels:

app: kibana

template:

metadata:

labels:

app: kibana

spec:

containers:

- name: kibana

image: docker.elastic.co/kibana/kibana:7.2.0

imagePullPolicy: IfNotPresent

resources:

limits:

cpu: 1000m

requests:

cpu: 100m

env:

- name: ELASTICSEARCH_URL

value: http://elasticsearch:9200

ports:

- containerPort: 5601

volumeMounts:

- name: config

mountPath: /usr/share/kibana/config/kibana.yml #kibana配置文件挂载地址

readOnly: true

subPath: kibana.yml

volumes:

- name: config

configMap:

name: kibana-config

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: kibana

namespace: kube-logging

annotations:

nginx.ingress.kubernetes.io/proxy-body-size: "0"

labels:

name: kibana-ingress

spec:

ingressClassName: nginx

rules:

- host: k8s-kibana.localhost.com

http:

paths:

- pathType: Prefix

path: "/"

backend:

service:

name: kibana

port:

number: 5601[root@k8s-master EFK]# kubectl apply -f kibana/6. 部署 Elastalert

项目代码:https://github.com/zhentianxiang/elastalert2-feishu

6.1 准备配置文件

[root@k8s-master1 elastalert]# cat configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: elastalert-config

namespace: kube-logging

data:

k8s_app_error.yaml: |

# ========== 基础配置 ==========

es_host: elasticsearch # Elasticsearch服务地址(Kubernetes服务名或IP)

es_port: 9200 # Elasticsearch服务端口

# ========== 规则核心配置 ==========

name: k8s_error_log_helloworld # 规则唯一标识名称(用于日志和告警区分)

type: frequency # 规则类型:

# - frequency: 频率型(固定时间段内达到阈值触发)

# - any: 匹配到即触发

# - spike: 流量突增/突降

# - flatline: 流量低于阈值

# - change: 字段值变化时触发

# ========== 事件去重配置 ==========

query_key: # 定义去重字段(相同值的日志视为重复)

- "@timestamp" # 时间戳字段(其意思表示为用时间去判断亦或者理解为不去重,因为时间没有重复的)

- "message" # 日志内容字段(相同message视为重复事件)

realert: # 相同告警的最小间隔时间(防骚扰)

minutes: 5 # 0表示每次匹配都告警(适合关键错误)

# 若设为5,则5分钟内相同错误只告警一次

# ========== 告警触发条件 ==========

index: k8s-app-* # 监控的索引模式(支持通配符和日期模式)

num_events: 1 # 触发阈值(1表示匹配到1条即告警)

timeframe: # 统计时间窗口(与num_events配合使用)

minutes: 1 # 在1分钟内出现num_events次则触发

# ========== 高级配置(示例) ==========

# aggregation: # 告警聚合配置(将多条日志合并为一个告警)

# minutes: 5 # 5分钟内的匹配日志合并发送

# summary_table_fields: # 聚合告警中显示的字段

# - "message"

# exponential_realert: # 指数级告警间隔(用于逐渐降低频繁错误通知)

# hours: 1 # 每次重复告警间隔乘以2(1h→2h→4h...)

filter:

- query:

bool:

must:

- bool:

should: # 匹配任意一个标签(OR 关系)

- term: { kubernetes_labels_k8s-app.keyword: "nginx" }

- term: { kubernetes_labels_k8s-app.keyword: "log-simulator" }

- term: { kubernetes_labels_k8s-app.keyword: "your-app-1" } # 替换为您的其他标签

- term: { kubernetes_labels_k8s-app.keyword: "your-app-2" } # 替换为您的其他标签

minimum_should_match: 1 # 至少匹配一个标签

- query_string: # 必须是 ERROR 等级

query: 'message: "*ERROR*" OR message: "*Exception*" OR message: "*stacktrace*"'

analyze_wildcard: true

must_not:

- query_string:

query: 'message: "*INFO*"' # 排除包含 INFO 的日志

#query: 'uri:\/monitor\/getExceptionStatusList' # 这里排除了这个接口,使用了\进行转义

analyze_wildcard: true

alert:

- "elastalert.alerters.feishu.FeishuAlert"

# 这个时间段内的匹配将不告警,适用于某些时间段请求低谷避免误报警

#feishualert_skip:

# start: "01:00:00"

# end: "08:00:00"

feishu_webhook_url: "https://open.feishu.cn/open-apis/bot/v2/hook/e62596c6-e043-4a64-975d-xxxxxxxx"

feishu_alert_type: "card" # 默认就是 card

alert_text_args:

- "@timestamp" # 告警触发时间

- "message" # 错误日志

- "num_hits" # 错误数量

- "num_matches" # 规则命中数

- "kubernetes_host" # 主机名

- "kubernetes_namespace_name" # Kubernetes 命名空间

- "kubernetes_pod_name" # Kubernetes Pod 名称

- "kubernetes_container_image" # 容器镜像

- "kubernetes_container_name" # 容器名称

- "stream" # 日志流

- "_index" # 索引名称

kibana_base_url: "http://k8s-kibana.linuxtian.com/app/kibana"

feishu_card_template:

msg_type: interactive

card:

header:

title:

tag: plain_text

content: "🚨 k8s业务error异常告警"

template: red

elements:

- tag: div

text:

tag: lark_md

content: "**🕒 触发时间:**{{@timestamp_local}}" # 本地时间 UTC+8

- tag: div

text:

tag: lark_md

content: "**📦 命名空间:**{{kubernetes_namespace_name}}"

- tag: div

text:

tag: lark_md

content: "**🐳 Pod 名称:**{{kubernetes_pod_name}}"

- tag: div

text:

tag: lark_md

content: "**🧪 容器镜像:**{{kubernetes_container_image}}"

- tag: div

text:

tag: lark_md

content: "**📛 容器名称:**{{kubernetes_container_name}}"

- tag: div

text:

tag: lark_md

content: "**📈 日志流:**{{stream}}"

- tag: div

text:

tag: lark_md

content: "**🗂 索引名称:**{{_index}}"

- tag: div

text:

tag: lark_md

content: "**📊 错误数量:**{{num_hits}}"

- tag: div

text:

tag: lark_md

content: "**🎯 规则命中数:**{{num_matches}}"

- tag: div

text:

tag: lark_md

content: "**📝 错误日志:**{{message}}"

- tag: hr

- tag: action

actions:

- tag: button

text:

tag: plain_text

content: "🔍 查看 Kibana 日志"

type: default

url: "{{ kibana_url }}"

config.json: |

{

"appName": "elastalert-server",

"port": 3030,

"wsport": 3333,

"elastalertPath": "/opt/elastalert",

"verbose": false,

"es_debug": false,

"debug": false,

"rulesPath": {

"relative": true,

"path": "/rules"

},

"templatesPath": {

"relative": true,

"path": "/rule_templates"

},

"es_host": "elasticsearch",

"es_port": 9200,

"writeback_index": "elastalert_status"

}

# ElastAlert主配置文件,定义了规则目录、执行频率等全局设置

elastalert.yaml: |

rules_folder: rules # 规则目录

run_every:

seconds: 60 # 每 60 秒检查一次规则

buffer_time:

minutes: 5 # 缓冲时间为 5 分钟

max_running_instances: 5 # 允许最多并发 5 个规则执行

es_host: elasticsearch # Elasticsearch 主机

es_port: 9200 # Elasticsearch 端口

use_ssl: False # 是否使用 SSL 加密

verify_certs: False # 是否验证 SSL 证书

writeback_index: elastalert_status_helloworld # 用于写回告警状态的索引

writeback_alias: elastalert_alerts_helloworld # 用于写回告警的别名

alert_time_limit:

days: 2 # 记录告警的最大时间范围,超过2天的告警会被清除6.2 email 告警配置

如果要使用 email 告警通知如下:

使用 email 告警通知记得创建 smtp_auth.yaml 文件存储邮箱登陆信息

[root@k8s-master EFK]# cat elastalert/elastalert-configmap.yaml-bak

apiVersion: v1

kind: ConfigMap

metadata:

name: elastalert-config

namespace: kube-logging

data:

k8s_app_error.yaml: |

# ========== 基础配置 ==========

es_host: elasticsearch # Elasticsearch服务地址(Kubernetes服务名或IP)

es_port: 9200 # Elasticsearch服务端口

# ========== 规则核心配置 ==========

name: k8s_error_log_helloworld # 规则唯一标识名称(用于日志和告警区分)

type: frequency # 规则类型:

# - frequency: 频率型(固定时间段内达到阈值触发)

# - any: 匹配到即触发

# - spike: 流量突增/突降

# - flatline: 流量低于阈值

# - change: 字段值变化时触发

# ========== 事件去重配置 ==========

query_key: # 定义去重字段(相同值的日志视为重复)

- "@timestamp" # 时间戳字段(其意思表示为用时间去判断亦或者理解为不去重,因为时间没有重复的)

- "message" # 日志内容字段(相同message视为重复事件)

realert: # 相同告警的最小间隔时间(防骚扰)

minutes: 0 # 0表示每次匹配都告警(适合关键错误)

# 若设为5,则5分钟内相同错误只告警一次

# ========== 告警触发条件 ==========

index: k8s-app-* # 监控的索引模式(支持通配符和日期模式)

num_events: 1 # 触发阈值(1表示匹配到1条即告警)

timeframe: # 统计时间窗口(与num_events配合使用)

minutes: 1 # 在1分钟内出现num_events次则触发

# ========== 高级配置(示例) ==========

# aggregation: # 告警聚合配置(将多条日志合并为一个告警)

# minutes: 5 # 5分钟内的匹配日志合并发送

# summary_table_fields: # 聚合告警中显示的字段

# - "message"

# exponential_realert: # 指数级告警间隔(用于逐渐降低频繁错误通知)

# hours: 1 # 每次重复告警间隔乘以2(1h→2h→4h...)

filter:

- query:

bool:

must:

- bool:

should: # 匹配任意一个标签(OR 关系)

- term: { kubernetes_labels_k8s-app.keyword: "nginx" }

- term: { kubernetes_labels_k8s-app.keyword: "log-simulator" }

- term: { kubernetes_labels_k8s-app.keyword: "your-app-1" } # 替换为您的其他标签

- term: { kubernetes_labels_k8s-app.keyword: "your-app-2" } # 替换为您的其他标签

minimum_should_match: 1 # 至少匹配一个标签

- query_string: # 必须是 ERROR 等级

query: 'message: "*ERROR*" OR message: "*Exception*" OR message: "*stacktrace*"'

analyze_wildcard: true

must_not:

- query_string:

query: 'message: "*INFO*"' # 排除包含 INFO 的日志

#query: 'uri:\/monitor\/getExceptionStatusList' # 这里排除了这个接口,使用了\进行转义

analyze_wildcard: true

alert:

- "email" # 使用邮件模块

- "elastalert.alerters.feishu.FeishuAlert" # 使用飞书告警模块

# 这个时间段内的匹配将不告警,适用于某些时间段请求低谷避免误报警

#feishualert_skip:

# start: "01:00:00"

# end: "08:00:00"

email:

- "2099637909@qq.com"

smtp_host: smtp.qq.com

smtp_port: 25

smtp_auth_file: /opt/elastalert/smtp_auth.yaml

from_addr: 2099637909@qq.com

email_format: html

alert_text_type: alert_text_only

# 标题

alert_subject: "生产环境日志告警通知"

# 丰富的邮件模板,包含了更多详细的字段

alert_text: "<br><a href='http://k8s-kibana.localhost.com/app/kibana' target='_blank' style='padding: 8px 16px;background-color: #46bc99;text-decoration:none;color:white;border-radius: 5px;'>立刻前往Kibana查看</a><br>

<table>

<tr><td style='padding:5px;text-align: right;font-weight: bold;border-radius: 5px;background-color: #eef;'>告警时间</td>

<td style='padding:5px;border-radius: 5px;background-color: #eef;'>{@timestamp}</td></tr>

<tr><td style='padding:5px;text-align: right;font-weight: bold;border-radius: 5px;background-color: #eef;'>服务名称</td>

<td style='padding:5px;border-radius: 5px;background-color: #eef;'>{module}</td></tr>

<tr><td style='padding:5px;text-align: right;font-weight: bold;border-radius: 5px;background-color: #eef;'>日志级别</td>

<td style='padding:5px;border-radius: 5px;background-color: #eef;'>{level}</td></tr>

<tr><td style='padding:5px;text-align: right;font-weight: bold;border-radius: 5px;background-color: #eef;'>错误日志</td>

<td style='padding:10px 5px;border-radius: 5px;background-color: #F8F9FA;'>{msg}</td></tr>

<tr><td style='padding:5px;text-align: right;font-weight: bold;border-radius: 5px;background-color: #eef;'>错误数量</td>

<td style='padding:5px;border-radius: 5px;background-color: #eef;'>{num_hits}</td></tr>

<tr><td style='padding:5px;text-align: right;font-weight: bold;border-radius: 5px;background-color: #eef;'>匹配日志数量</td>

<td style='padding:5px;border-radius: 5px;background-color: #eef;'>{num_matches}</td></tr>

<tr><td style='padding:5px;text-align: right;font-weight: bold;border-radius: 5px;background-color: #eef;'>主机名</td>

<td style='padding:5px;border-radius: 5px;background-color: #eef;'>{host}</td></tr>

<tr><td style='padding:5px;text-align: right;font-weight: bold;border-radius: 5px;background-color: #eef;'>Pod 名称</td>

<td style='padding:5px;border-radius: 5px;background-color: #eef;'>{kubernetes.pod_name}</td></tr>

<tr><td style='padding:5px;text-align: right;font-weight: bold;border-radius: 5px;background-color: #eef;'>容器名称</td>

<td style='padding:5px;border-radius: 5px;background-color: #eef;'>{kubernetes.container_name}</td></tr>

<tr><td style='padding:5px;text-align: right;font-weight: bold;border-radius: 5px;background-color: #eef;'>容器ID</td>

<td style='padding:5px;border-radius: 5px;background-color: #eef;'>{kubernetes.container_id}</td></tr>

<tr><td style='padding:5px;text-align: right;font-weight: bold;border-radius: 5px;background-color: #eef;'>日志流</td>

<td style='padding:5px;border-radius: 5px;background-color: #eef;'>{stream}</td></tr>

<tr><td style='padding:5px;text-align: right;font-weight: bold;border-radius: 5px;background-color: #eef;'>索引名称</td>

<td style='padding:5px;border-radius: 5px;background-color: #eef;'>{_index}</td></tr>

</table>"

feishu_webhook_url: "https://open.feishu.cn/open-apis/bot/v2/hook/xxxxxxxx-e043-4a64-975d-xxxxxxxx"

feishu_alert_type: "card" # 默认就是 card

alert_text_args:

- "@timestamp" # 告警触发时间

- "message" # 错误日志

- "num_hits" # 错误数量

- "num_matches" # 规则命中数

- "kubernetes_host" # 主机名

- "kubernetes_namespace_name" # Kubernetes 命名空间

- "kubernetes_pod_name" # Kubernetes Pod 名称

- "kubernetes_container_image" # 容器镜像

- "kubernetes_container_name" # 容器名称

- "stream" # 日志流

- "_index" # 索引名称

kibana_base_url: "http://k8s-kibana.linuxtian.com/app/kibana"

feishu_card_template:

msg_type: interactive

card:

header:

title:

tag: plain_text

content: "🚨 k8s业务error异常告警"

template: red

elements:

- tag: div

text:

tag: lark_md

content: "**🕒 触发时间:**{{@timestamp_local}}" # 本地时间 UTC+8

- tag: div

text:

tag: lark_md

content: "**📦 命名空间:**{{kubernetes_namespace_name}}"

- tag: div

text:

tag: lark_md

content: "**🐳 Pod 名称:**{{kubernetes_pod_name}}"

- tag: div

text:

tag: lark_md

content: "**🧪 容器镜像:**{{kubernetes_container_image}}"

- tag: div

text:

tag: lark_md

content: "**📛 容器名称:**{{kubernetes_container_name}}"

- tag: div

text:

tag: lark_md

content: "**📈 日志流:**{{stream}}"

- tag: div

text:

tag: lark_md

content: "**🗂 索引名称:**{{_index}}"

- tag: div

text:

tag: lark_md

content: "**📊 错误数量:**{{num_hits}}"

- tag: div

text:

tag: lark_md

content: "**🎯 规则命中数:**{{num_matches}}"

- tag: div

text:

tag: lark_md

content: "**📝 错误日志:**{{message}}"

- tag: hr

- tag: action

actions:

- tag: button

text:

tag: plain_text

content: "🔍 查看 Kibana 日志"

type: default

url: "{{ kibana_url }}"

config.json: |

{

"appName": "elastalert-server",

"port": 3030,

"wsport": 3333,

"elastalertPath": "/opt/elastalert",

"verbose": false,

"es_debug": false,

"debug": false,

"rulesPath": {

"relative": true,

"path": "/rules"

},

"templatesPath": {

"relative": true,

"path": "/rule_templates"

},

"es_host": "elasticsearch",

"es_port": 9200,

"writeback_index": "elastalert_status"

}

# ElastAlert主配置文件,定义了规则目录、执行频率等全局设置

elastalert.yaml: |

rules_folder: rules # 规则目录

run_every:

seconds: 60 # 每 60 秒检查一次规则

buffer_time:

minutes: 5 # 缓冲时间为 5 分钟

max_running_instances: 5 # 允许最多并发 5 个规则执行

es_host: elasticsearch # Elasticsearch 主机

es_port: 9200 # Elasticsearch 端口

use_ssl: False # 是否使用 SSL 加密

verify_certs: False # 是否验证 SSL 证书

writeback_index: elastalert_status_k8s # 用于写回告警状态的索引

writeback_alias: elastalert_alerts_k8s # 用于写回告警的别名

alert_time_limit:

days: 2 # 记录告警的最大时间范围,超过2天的告警会被清除[root@k8s-master EFK]# cat elastalert/smtp_auth.yaml

user: 2099637909@qq.com

password: odvodhilxmcqcfhf

[root@k8s-master EFK]# kubectl create secret generic smtp-auth --from-file=elastalert/email_auth.yaml -n kube-logging

# 第二种创建方法:

[root@k8s-master EFK]# cat elastalert/smtp_auth.yaml | base64 -w0

[root@k8s-master EFK]# cat elastalert/smtp_auth.yaml

apiVersion: v1

kind: Secret

metadata:

name: smtp-auth

namespace: kube-logging

data:

# 把 echo 的值复制过来

smtp_auth.yaml: dXNlcjogMjA5OTYzNzkwOUBxcS5jb20KcGFzc3dvcmQ6IG9kdm9kaGlseG1jcWNmaGYK

[root@k8s-master EFK]# kubectl apply -f elastalert/smtp_auth.yaml6.3 启动服务

注意:一定不要挂载本地时区,否则时间会出问题

[root@k8s-master EFK]# cat elastalert/deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: elastalert

name: elastalert

namespace: kube-logging

spec:

replicas: 1

selector:

matchLabels:

app: elastalert

template:

metadata:

labels:

app: elastalert

name: elastalert

spec:

containers:

- name: elastalert

#image: harbor.linuxtian.com/kube-logging/elastalert2-feishu:2.21.0

image: registry.cn-hangzhou.aliyuncs.com/tianxiang_app/elastalert2-feishu:v7

imagePullPolicy: Always

env:

- name: TZ

value: Asia/Shanghai

- name: ELASTALERT_LOG_LEVEL

value: INFO

ports:

- containerPort: 3030

name: tcp-3030

protocol: TCP

- containerPort: 3333

name: tcp-3333

protocol: TCP

resources:

limits:

cpu: '1'

memory: "1024Mi"

requests:

cpu: '0.5'

memory: "512Mi"

volumeMounts:

- name: elastalert-config

mountPath: /opt/elastalert/config.yaml

subPath: elastalert.yaml

#- name: smtp-auth-volume

# mountPath: /opt/elastalert/smtp_auth.yaml

# subPath: smtp_auth.yaml

- name: elastalert-config

mountPath: /opt/elastalert-server/config/config.json

subPath: config.json

- name: elastalert-config

mountPath: /opt/elastalert/rules/k8s_app_error.yaml

subPath: k8s_app_error.yaml

dnsPolicy: ClusterFirst

restartPolicy: Always

volumes:

- name: elastalert-config

configMap:

defaultMode: 420

name: elastalert-config

#- name: smtp-auth-volume

# secret:

# secretName: smtp-auth

---

apiVersion: v1

kind: Service

metadata:

name: elastalert

namespace: kube-logging

spec:

ports:

- name: serverport

port: 3030

protocol: TCP

targetPort: 3030

- name: transport

port: 3333

protocol: TCP

targetPort: 3333

selector:

app: elastalert7. 查看 Elastalert 的索引

当你启动服务后会往 ES 中创建 5 条索引目录

[root@k8s-master1 k8s-app]# curl -X GET "http://10.96.18.27:9200/_cat/indices/elastalert_status*?v"

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size

green open elastalert_status_status 8MmzXf2eQrSU3Qare6MkAw 1 1 43 0 64.1kb 32.1kb

green open elastalert_status_error KFTTY0ugS8KmKc6iWaOcww 1 1 0 0 566b 283b

green open elastalert_status 0_tLdZKwTb6ZfUYgAH5pvw 1 1 188 0 178.5kb 89.2kb

green open elastalert_status_silence xB0Gt6-4Tiib--CWcuBB7A 1 1 190 0 138.4kb 69.2kb

green open elastalert_status_past M9NKxpJLTA2fIWcuvAfjZA 1 1 0 0 566b 283b1.用途:

所以说,如果你要启动两个以上的告警服务,来分别处理不通索引中的业务日志进行不通群组的告警,那么这些索引的名称也要不一致,否则告警会误报错乱

2.举例启动两个以上如下:

deployment 文件如下,里面写两个容器,并且分别挂载不同的配置文件

[root@k8s-app-1 elastalert]# cat elastalert-deplployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: elastalert

name: elastalert

namespace: kube-logging

spec:

replicas: 1

selector:

matchLabels:

app: elastalert

template:

metadata:

labels:

app: elastalert

name: elastalert

spec:

- name: elastalert-test-alarm-1

image: registry.cn-hangzhou.aliyuncs.com/tianxiang_app/elastalert2-feishu:v7

imagePullPolicy: IfNotPresent

env:

- name: TZ

value: Asia/Shanghai

- name: ELASTALERT_LOG_LEVEL

value: INFO

ports:

- containerPort: 3030

name: tcp-3030

protocol: TCP

- containerPort: 3333

name: tcp-3333

protocol: TCP

resources:

limits:

cpu: '1'

memory: "1024Mi"

requests:

cpu: '0.5'

memory: "512Mi"

volumeMounts:

- name: elastalert-config-test-alarm-1

mountPath: /opt/elastalert/config.yaml

subPath: elastalert.yaml

#- name: smtp-auth-volume

# mountPath: /opt/elastalert/smtp_auth.yaml

# subPath: smtp_auth.yaml

- name: elastalert-config-test-alarm-1

mountPath: /opt/elastalert-server/config/config.json

subPath: config.json

- name: elastalert-config-test-alarm-1

mountPath: /opt/elastalert/rules/k8s_app_error.yaml

subPath: k8s_app_error.yaml

- name: elastalert-test-alarm-2

image: registry.cn-hangzhou.aliyuncs.com/tianxiang_app/elastalert2-feishu:v7

imagePullPolicy: IfNotPresent

env:

- name: TZ

value: Asia/Shanghai

- name: ELASTALERT_LOG_LEVEL

value: INFO

ports:

- containerPort: 3030

name: tcp-3030

protocol: TCP

- containerPort: 3333

name: tcp-3333

protocol: TCP

resources:

limits:

cpu: '1'

memory: "1024Mi"

requests:

cpu: '0.5'

memory: "512Mi"

volumeMounts:

- name: elastalert-config-test-alarm-2

mountPath: /opt/elastalert/config.yaml

subPath: elastalert.yaml

#- name: smtp-auth-volume

# mountPath: /opt/elastalert/smtp_auth.yaml

# subPath: smtp_auth.yaml

- name: elastalert-config-test-alarm-2

mountPath: /opt/elastalert-server/config/config.json

subPath: config.json

- name: elastalert-config-test-alarm-2

mountPath: /opt/elastalert/rules/k8s_app_error.yaml

subPath: k8s_app_error.yaml

volumes:

- name: elastalert-config-test-alarm-1

configMap:

defaultMode: 420

name: elastalert-config-test-alarm-1

- name: elastalert-config-test-alarm-2

configMap:

defaultMode: 420

name: elastalert-config-test-alarm-2

#- name: smtp-auth-volume

# secret:

# secretName: smtp-authconfigmap 如下

# 第一个

[root@k8s-app-1 elastalert]# cat elastalert-configmap-test-alarm-1.yaml

metadata:

name: elastalert-config-test-alarm-1 # 修改为唯一名称

k8s_app_error.yaml: |

name: k8s_error_log_test-alarm-1 # 唯一名称

index: k8s-app-test-alarm-1* # 唯一名称

filter:

- query:

bool:

must:

- bool:

should: # 匹配任意一个标签(OR 关系)

- term: { kubernetes_labels_app.keyword: "test-app } # 匹配 k8s-app-test-alarm-1* 索引中的日志

- term: { kubernetes_labels_app.keyword: "your-app-1" } # 替换为您的其他标签

- term: { kubernetes_labels_app.keyword: "your-app-2" } # 替换为您的其他标签

minimum_should_match: 1 # 至少匹配一个标签

- query_string: # 保留原有的错误日志匹配

query: "message:(*error* OR *Exception* OR *stacktrace*)"

analyze_wildcard: true

elastalert.yaml: |

writeback_index: elastalert_status_test-alarm-1 # 修改为唯一名称

writeback_alias: elastalert_alerts_test-alarm-1 # 修改为唯一名称

# 第二个

[root@k8s-app-1 elastalert]# cat elastalert-configmap-test-alarm-2.yaml

metadata:

name: elastalert-config-test-alarm-2 # 修改为唯一名称

k8s_app_error.yaml: |

name: k8s_error_log_test-alarm-2 # 唯一名称

index: k8s-app-test-alarm-1* # 唯一名称

filter:

- query:

bool:

must:

- bool:

should: # 匹配任意一个标签(OR 关系)

- term: { kubernetes_labels_app.keyword: "prod-app } # 匹配 k8s-app-test-alarm-2* 索引中的日志

- term: { kubernetes_labels_app.keyword: "your-app-1" } # 替换为您的其他标签

- term: { kubernetes_labels_app.keyword: "your-app-2" } # 替换为您的其他标签

minimum_should_match: 1 # 至少匹配一个标签

- query_string: # 保留原有的错误日志匹配

query: "message:(*error* OR *Exception* OR *stacktrace*)"

analyze_wildcard: true

elastalert.yaml: |

writeback_index: elastalert_status_test-alarm-2 # 修改为唯一名称

writeback_alias: elastalert_alerts_test-alarm-2 # 修改为唯一名称再次查看索引,不出意外的话你会看到 8 条索引

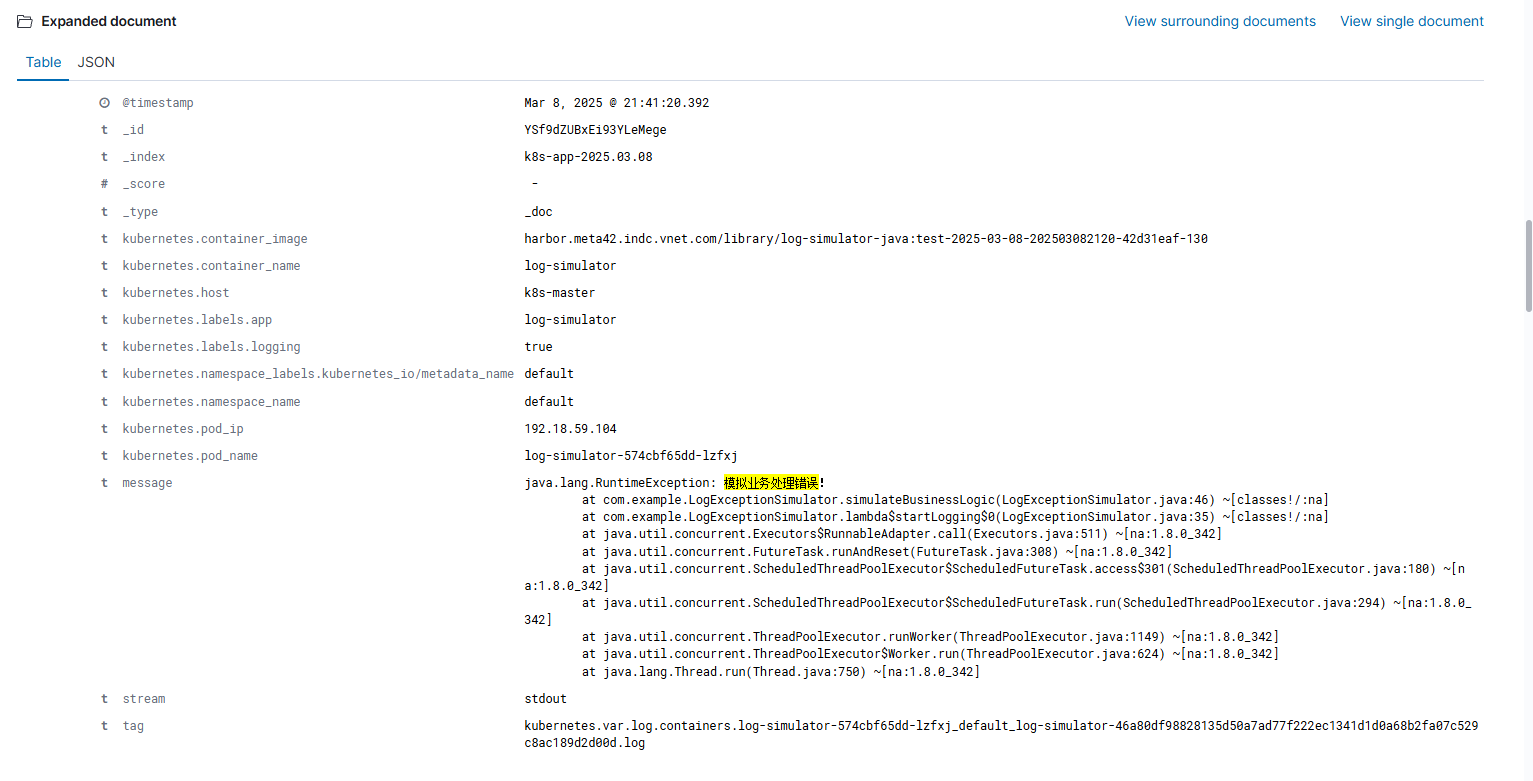

三、使用 Kibana

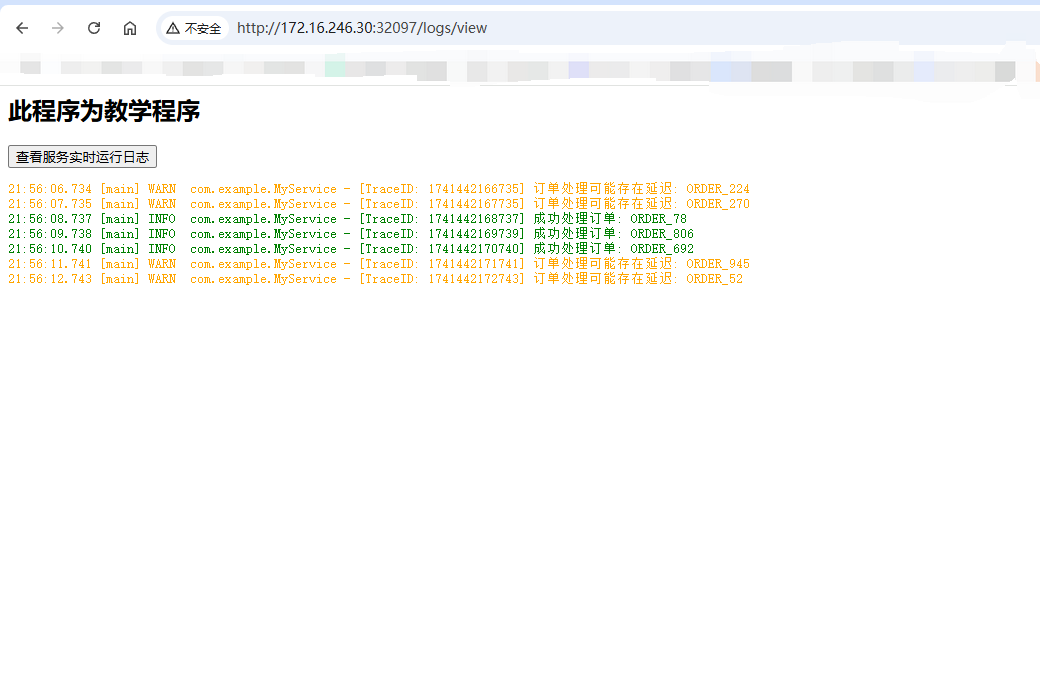

1. 制作业务服务镜像

自定义一个 Java 程序来模拟业务日志

[root@k8s-master log-demo]# mkdir log-simulator

[root@k8s-master log-demo]# mkdir -pv log-simulator/src/main/java/com/example

mkdir: created directory 'log-simulator/src'

mkdir: created directory 'log-simulator/src/main'

mkdir: created directory 'log-simulator/src/main/java'

mkdir: created directory 'log-simulator/src/main/java/com'

mkdir: created directory 'log-simulator/src/main/java/com/example'

[root@k8s-master log-demo]# mkdir -pv log-simulator/src/main/resources

mkdir: created directory 'log-simulator/src/main/resources'

[root@k8s-master log-demo]# mkdir -pv log-simulator/src/resources

mkdir: created directory 'log-simulator/src/resources'

[root@k8s-master log-demo]# tree

.

└── log-simulator

├── Dockerfile

├── pom.xml

└── src

├── main

│ ├── java

│ │ └── com

│ │ └── example

│ │ └── LogExceptionSimulator.java

│ └── resources

└── resources

└── logback.xml

8 directories, 4 files[root@k8s-master log-demo]# cat log-simulator/pom.xml

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.example</groupId>

<artifactId>log-simulator</artifactId>

<version>1.0.0</version>

<packaging>jar</packaging>

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<maven.compiler.source>1.8</maven.compiler.source>

<maven.compiler.target>1.8</maven.compiler.target>

</properties>

<dependencies>

<!-- Spring Boot Web 依赖 -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

<version>2.7.17</version> <!-- 兼容 Java 1.8 的 Spring Boot 版本 -->

</dependency>

<!-- Spring Boot Actuator(提供健康检查和监控) -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-actuator</artifactId>

<version>2.7.17</version>

</dependency>

<!-- Spring Boot Starter Logging(包含 SLF4J + Logback) -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-logging</artifactId>

<version>2.7.17</version>

</dependency>

</dependencies>

<build>

<plugins>

<!-- Spring Boot Maven 插件(用于打包可执行 JAR) -->

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

<version>2.7.17</version>

<executions>

<execution>

<goals>

<goal>repackage</goal>

</goals>

</execution>

</executions>

</plugin>

<!-- Maven 编译器插件 -->

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<version>3.8.1</version>

<configuration>

<source>1.8</source>

<target>1.8</target>

<encoding>UTF-8</encoding>

</configuration>

</plugin>

</plugins>

</build>

</project>[root@k8s-master log-demo]# cat log-simulator/src/resources/logback.xml

<configuration>

<appender name="CONSOLE" class="ch.qos.logback.core.ConsoleAppender">

<encoder>

<charset>UTF-8</charset>

<pattern>%d{yyyy-MM-dd HH:mm:ss.SSS} [%thread] %-5level %logger{36} - [TraceID: %X{traceId}] %msg%n</pattern>

</encoder>

</appender>

<root level="INFO">

<appender-ref ref="CONSOLE" />

</root>

</configuration>[root@k8s-master log-demo]# cat log-simulator/src/main/java/com/example/LogExceptionSimulator.java

package com.example;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RestController;

import org.springframework.web.servlet.mvc.method.annotation.SseEmitter;

import java.io.IOException;

import java.time.LocalTime;

import java.time.format.DateTimeFormatter;

import java.util.Random;

import java.util.concurrent.Executors;

import java.util.concurrent.ScheduledExecutorService;

import java.util.concurrent.TimeUnit;

import java.util.UUID;

@SpringBootApplication

public class LogExceptionSimulator {

private static final Logger logger = LoggerFactory.getLogger(LogExceptionSimulator.class);

private static final ScheduledExecutorService scheduler = Executors.newScheduledThreadPool(1);

private static final Random random = new Random();

public static void main(String[] args) {

SpringApplication.run(LogExceptionSimulator.class, args);

startLogging();

}

private static void startLogging() {

scheduler.scheduleAtFixedRate(() -> {

try {

simulateBusinessLogic();

} catch (Exception e) {

String traceId = UUID.randomUUID().toString();

logger.error("处理订单时发生异常 - [TraceID: {}]", traceId, e);

}

}, 0, 1, TimeUnit.SECONDS);

}

private static void simulateBusinessLogic() {

// 模拟 10% 概率抛出异常

if (random.nextInt(10) < 1) {

throw new RuntimeException("模拟业务处理错误!");

}

// 记录成功日志

logger.info("成功处理订单: ORDER_{} - [TraceID: {}]", (int) (Math.random() * 1000), System.currentTimeMillis());

// 模拟警告日志

if (random.nextInt(10) < 3) {

logger.warn("订单处理可能存在延迟: ORDER_{} - [TraceID: {}]", (int) (Math.random() * 1000), System.currentTimeMillis());

}

}

}

@RestController

@RequestMapping("/logs")

class LogController {

private final Random random = new Random();

@GetMapping("/view")

public String viewLogsPage() {

return "<html><head><title>日志查看</title></head><body>"

+ "<h2>此程序为教学程序</h2>"

+ "<button onclick=\"startListening()\">查看服务实时运行日志</button>"

+ "<pre id='logOutput'></pre>"

+ "<script>"

+ "function startListening() {"

+ " var eventSource = new EventSource('/logs/stream');"

+ " eventSource.onmessage = function(event) {"

+ " var logMessage = event.data;"

+ " var logOutput = document.getElementById('logOutput');"

+ " if (logMessage.includes('ERROR')) {"

+ " logOutput.innerHTML += '<span style=\"color:red\">' + logMessage + '</span><br>';"

+ " } else if (logMessage.includes('WARN')) {"

+ " logOutput.innerHTML += '<span style=\"color:orange\">' + logMessage + '</span><br>';"

+ " } else {"

+ " logOutput.innerHTML += '<span style=\"color:green\">' + logMessage + '</span><br>';"

+ " }"

+ " };"

+ "}"

+ "</script>"

+ "</body></html>";

}

@GetMapping("/stream")

public SseEmitter streamLogs() {

SseEmitter emitter = new SseEmitter();

Executors.newSingleThreadExecutor().execute(() -> {

try {

while (true) {

String logMessage = generateLogMessage();

emitter.send(logMessage);

Thread.sleep(1000);

}

} catch (IOException | InterruptedException e) {

emitter.complete();

}

});

return emitter;

}

private String generateLogMessage() {

String timestamp = LocalTime.now().format(DateTimeFormatter.ofPattern("HH:mm:ss.SSS"));

long traceId = System.currentTimeMillis();

int orderId = random.nextInt(1000);

// 随机生成日志级别

int level = random.nextInt(10);

String logMessage = "";

if (level < 1) {

logMessage = String.format("%s [main] ERROR com.example.MyService - [TraceID: %d] 处理订单时发生异常: 模拟业务处理错误!", timestamp, traceId);

} else if (level < 3) {

logMessage = String.format("%s [main] WARN com.example.MyService - [TraceID: %d] 订单处理可能存在延迟: ORDER_%d", timestamp, traceId, orderId);

} else {

logMessage = String.format("%s [main] INFO com.example.MyService - [TraceID: %d] 成功处理订单: ORDER_%d", timestamp, traceId, orderId);

}

return logMessage;

}

}[root@k8s-master log-demo]# cat log-simulator/Dockerfile

# 第一阶段:使用 Maven 构建 JAR(适配 JDK 1.8)

FROM maven:3.8.7-eclipse-temurin-8 AS build

# 配置阿里云 Maven 镜像加速

RUN mkdir -p /usr/share/maven/ref/ && \

echo '<settings xmlns="http://maven.apache.org/SETTINGS/1.0.0"' > /usr/share/maven/ref/settings.xml && \

echo ' xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"' >> /usr/share/maven/ref/settings.xml && \

echo ' xsi:schemaLocation="http://maven.apache.org/SETTINGS/1.0.0 https://maven.apache.org/xsd/settings-1.0.0.xsd">' >> /usr/share/maven/ref/settings.xml && \

echo ' <mirrors>' >> /usr/share/maven/ref/settings.xml && \

echo ' <mirror>' >> /usr/share/maven/ref/settings.xml && \

echo ' <id>aliyun</id>' >> /usr/share/maven/ref/settings.xml && \

echo ' <name>Aliyun Maven Mirror</name>' >> /usr/share/maven/ref/settings.xml && \

echo ' <url>https://maven.aliyun.com/repository/public</url>' >> /usr/share/maven/ref/settings.xml && \

echo ' <mirrorOf>central</mirrorOf>' >> /usr/share/maven/ref/settings.xml && \

echo ' </mirror>' >> /usr/share/maven/ref/settings.xml && \

echo ' </mirrors>' >> /usr/share/maven/ref/settings.xml && \

echo '</settings>' >> /usr/share/maven/ref/settings.xml

WORKDIR /app

COPY pom.xml .

# 预下载依赖,减少构建时间

RUN mvn -s /usr/share/maven/ref/settings.xml dependency:go-offline

COPY src ./src

# 使用 Maven 打包,跳过测试

RUN mvn -s /usr/share/maven/ref/settings.xml package -DskipTests

# 第二阶段:使用 JDK 8 运行 JAR

FROM harbor.meta42.indc.vnet.com/library/jdk8:v4

ENV JAVA_OPT="-Xms512m -Xmx1024m"

ENV LANG="zh_CN.UTF-8"

ENV TZ=Asia/Shanghai

WORKDIR /app

COPY --from=build /app/target/log-simulator-1.0.0.jar ./app.jar

ENTRYPOINT java -Dfile.encoding=UTF-8 $JAVA_OPT -jar app.jar[root@k8s-master log-demo]# docker build log-simulator/ -t log-simulator:latest

[INFO] Replacing main artifact with repackaged archive

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 27.673 s

[INFO] Finished at: 2025-03-08T11:50:22Z

[INFO] ------------------------------------------------------------------------

Removing intermediate container 809d0019f395

---> 6bb15acfbc81

Step 8/14 : FROM harbor.meta42.indc.vnet.com/library/jdk8:v4

---> 796692a67328

Step 9/14 : ENV LANG="zh_CN.UTF-8"

---> Using cache

---> 03fddc0d22ae

Step 10/14 : ENV LC_ALL="zh_CN.UTF-8"

---> Using cache

---> c8dfdc30ad0c

Step 11/14 : ENV TZ=Asia/Shanghai

---> Using cache

---> 6baccbda106b

Step 12/14 : WORKDIR /app

---> Using cache

---> 54a1362556d8

Step 13/14 : COPY --from=build /app/target/log-simulator-1.0.0.jar ./app.jar

---> 432e9b2bf3fd

Step 14/14 : ENTRYPOINT ["java", "-Dfile.encoding=UTF-8", "-jar", "app.jar"]

---> Running in 9aed26d19e8a

Removing intermediate container 9aed26d19e8a

---> 3d3ce4e8a6db

Successfully built 3d3ce4e8a6db

Successfully tagged log-simulator:latest2. 启动服务

[root@k8s-master log-demo]# vim deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: log-simulator

namespace: default

labels:

k8s-app: log-simulator

spec:

replicas: 1

selector:

matchLabels:

k8s-app: log-simulator

template:

metadata:

labels:

k8s-app: log-simulator

logging: "true"

spec:

containers:

- name: log-simulator

image: log-simulator:latest

imagePullPolicy: IfNotPresent

env:

- name: LANG

value: "zh_CN.UTF-8"

- name: TZ

value: "Asia/Shanghai" # 设置时区,避免日志时间错乱

- name: JAVA_OPT

value: '-Xms512m -Xmx2048m -Xmn256m -XX:+UseG1GC'

---

apiVersion: v1

kind: Service

metadata:

name: log-simulator

namespace: default

spec:

selector:

k8s-app: log-simulator

ports:

- protocol: TCP

port: 8080

targetPort: 8080

type: NodePort

[root@k8s-master log-demo]# kubectl apply -f deployment.yaml

[root@k8s-master log-demo]# kubectl get pod -l app=log-simulator

NAME READY STATUS RESTARTS AGE

log-simulator-fcf59c5d-txwl5 1/1 Running 0 2m11s

[root@k8s-master log-demo]# kubectl logs --tail=10 -f -l app=log-simulator

2025-03-08 21:54:35.230 INFO 8 --- [pool-1-thread-1] com.example.LogExceptionSimulator : 成功处理订单: ORDER_686 - [TraceID: 1741442075230]

2025-03-08 21:54:36.230 INFO 8 --- [pool-1-thread-1] com.example.LogExceptionSimulator : 成功处理订单: ORDER_178 - [TraceID: 1741442076230]

2025-03-08 21:54:36.230 WARN 8 --- [pool-1-thread-1] com.example.LogExceptionSimulator : 订单处理可能存在延迟: ORDER_263 - [TraceID: 1741442076230]

2025-03-08 21:54:37.230 INFO 8 --- [pool-1-thread-1] com.example.LogExceptionSimulator : 成功处理订单: ORDER_70 - [TraceID: 1741442077230]

2025-03-08 21:54:38.230 INFO 8 --- [pool-1-thread-1] com.example.LogExceptionSimulator : 成功处理订单: ORDER_558 - [TraceID: 1741442078230]

2025-03-08 21:54:38.230 WARN 8 --- [pool-1-thread-1] com.example.LogExceptionSimulator : 订单处理可能存在延迟: ORDER_271 - [TraceID: 1741442078230]

2025-03-08 21:54:39.230 INFO 8 --- [pool-1-thread-1] com.example.LogExceptionSimulator : 成功处理订单: ORDER_781 - [TraceID: 1741442079230]

2025-03-08 21:54:40.231 ERROR 8 --- [pool-1-thread-1] com.example.LogExceptionSimulator : 处理订单时发生异常 - [TraceID: 78549e24-024d-4c66-a245-ecfe6f2bddf2]

java.lang.RuntimeException: 模拟业务处理错误!

at com.example.LogExceptionSimulator.simulateBusinessLogic(LogExceptionSimulator.java:46) ~[classes!/:na]

at com.example.LogExceptionSimulator.lambda$startLogging$0(LogExceptionSimulator.java:35) ~[classes!/:na]

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) ~[na:1.8.0_342]

at java.util.concurrent.FutureTask.runAndReset(FutureTask.java:308) ~[na:1.8.0_342]

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$301(ScheduledThreadPoolExecutor.java:180) ~[na:1.8.0_342]

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:294) ~[na:1.8.0_342]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) ~[na:1.8.0_342]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) ~[na:1.8.0_342]

at java.lang.Thread.run(Thread.java:750) ~[na:1.8.0_342]

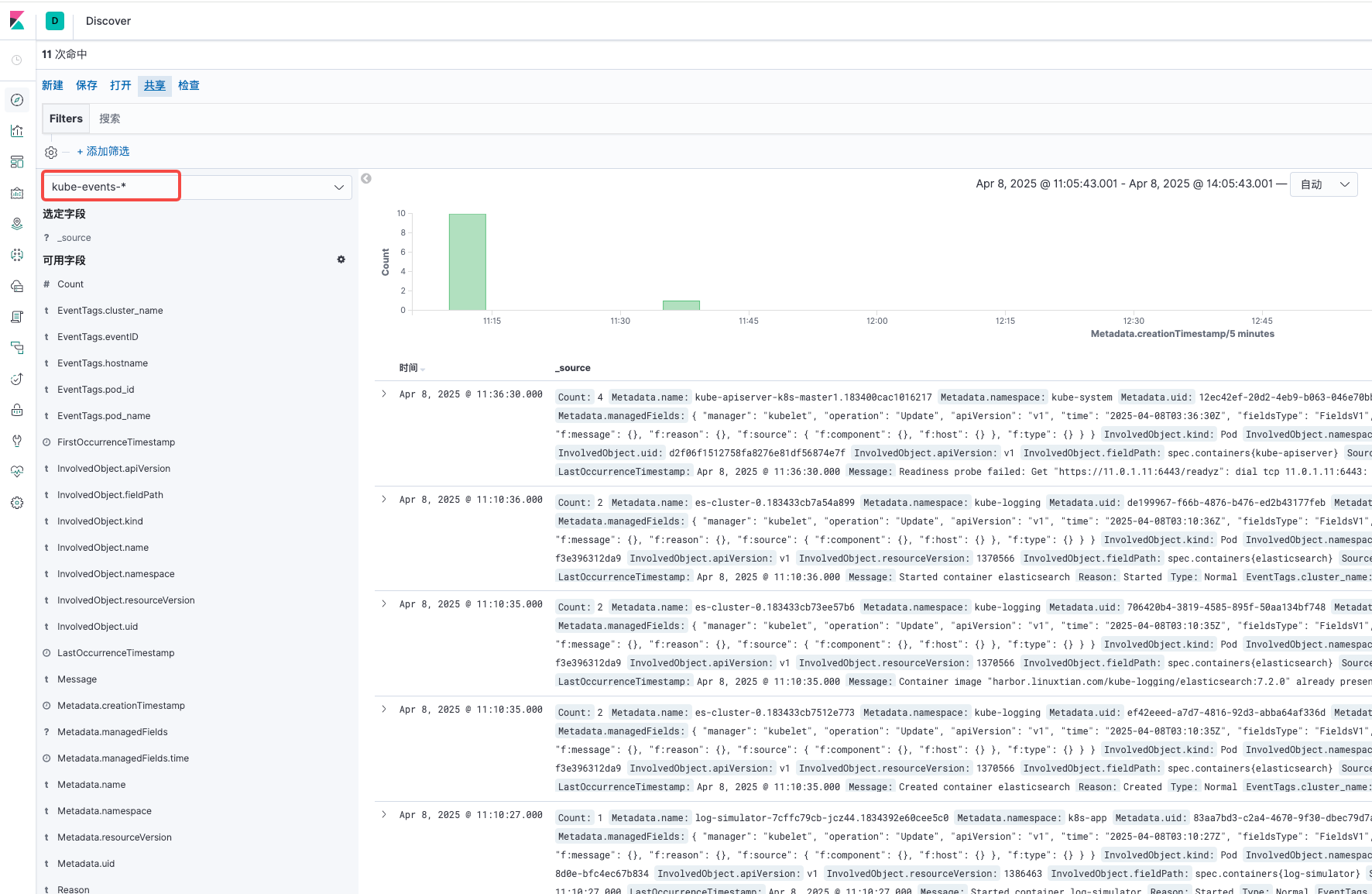

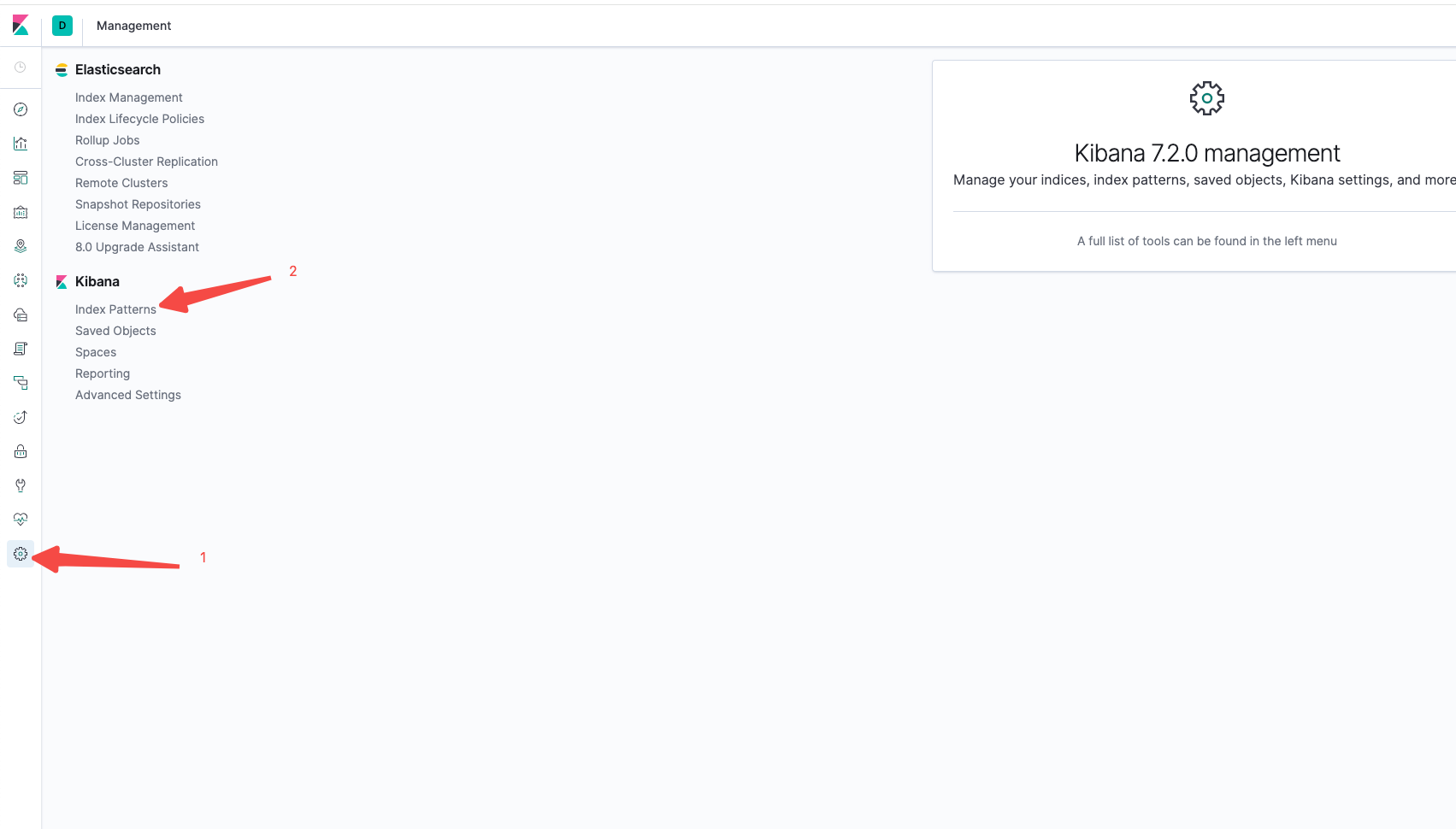

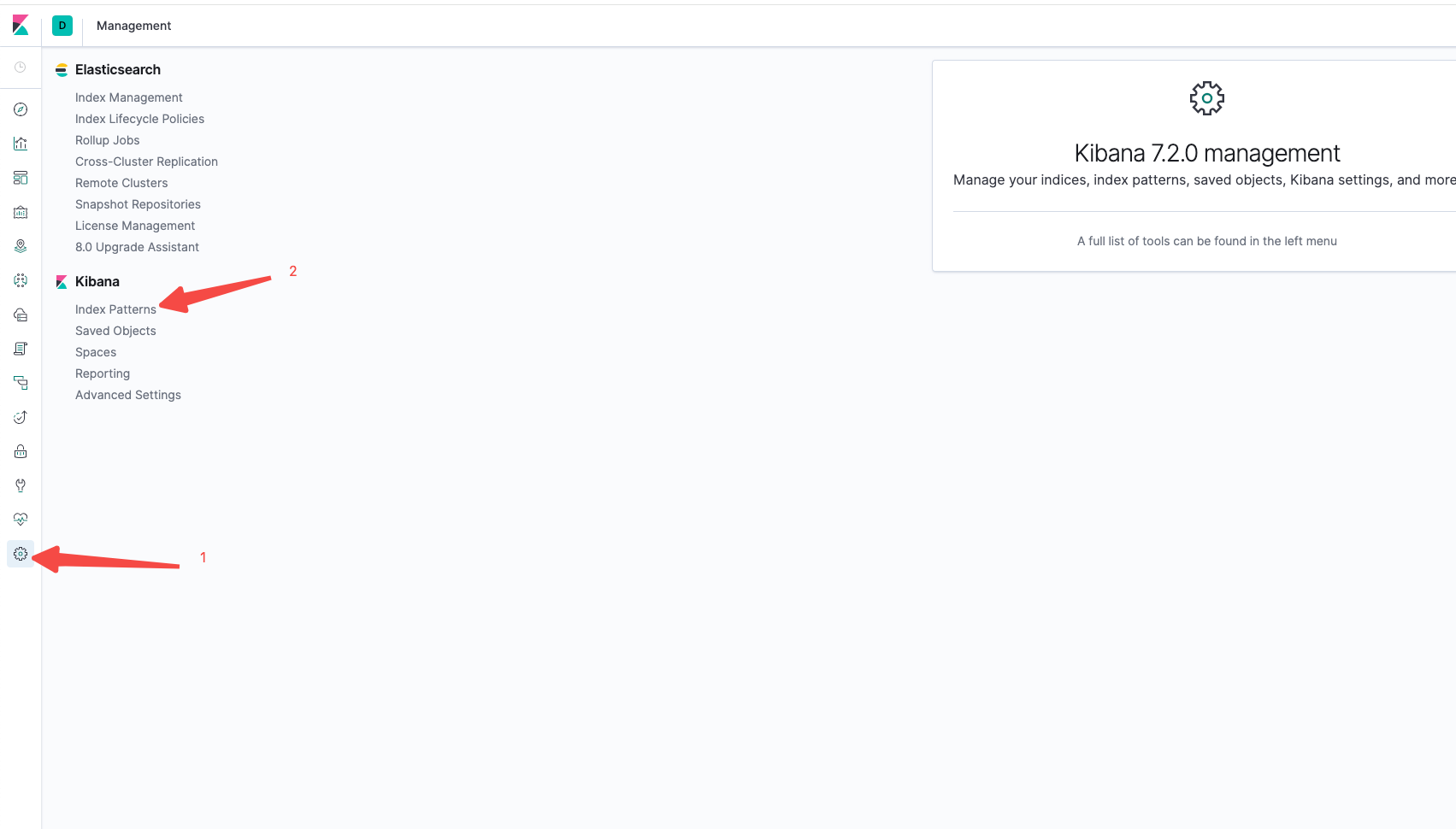

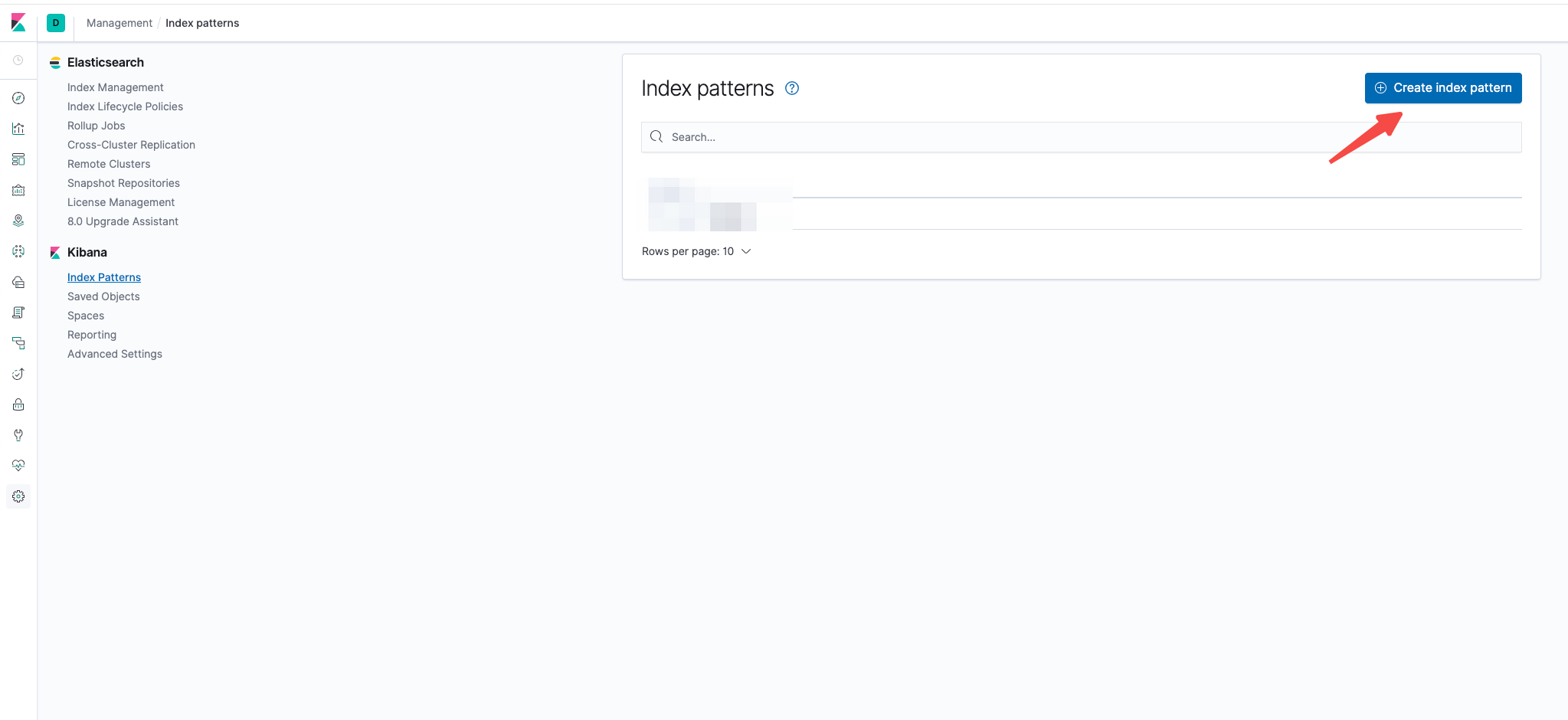

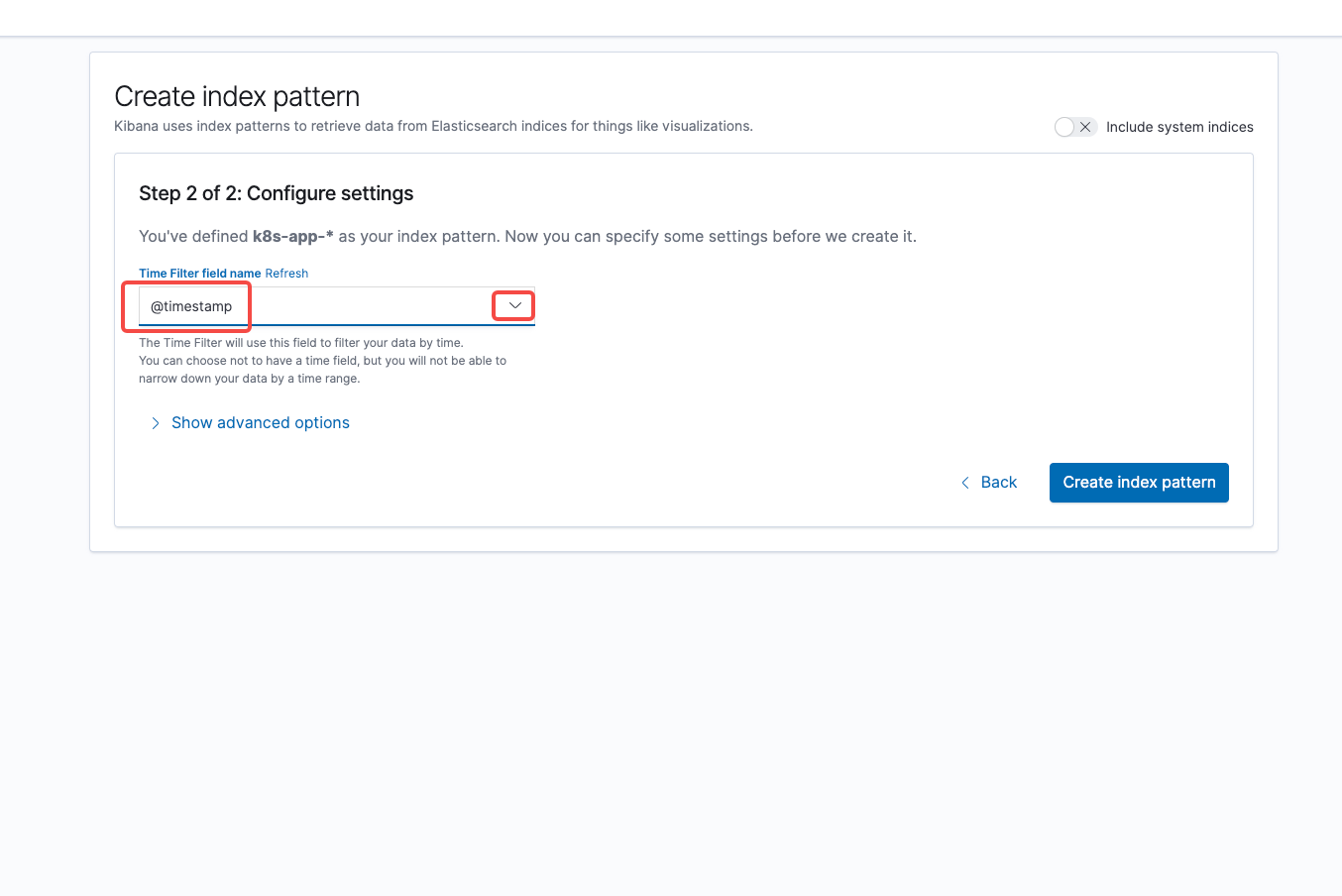

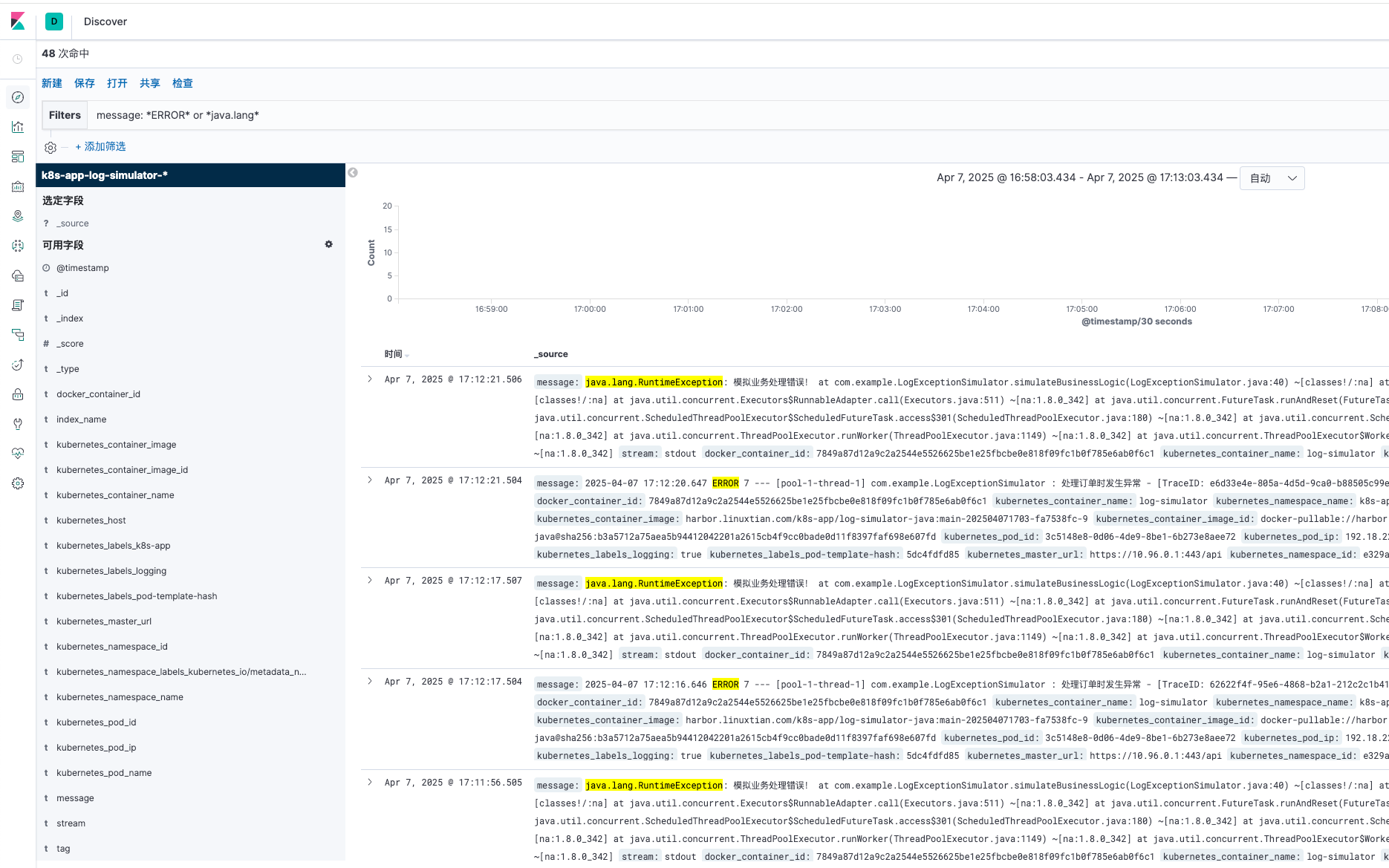

3. 添加索引

打开 kibana 控制台按着如下截图步骤进行添加索引

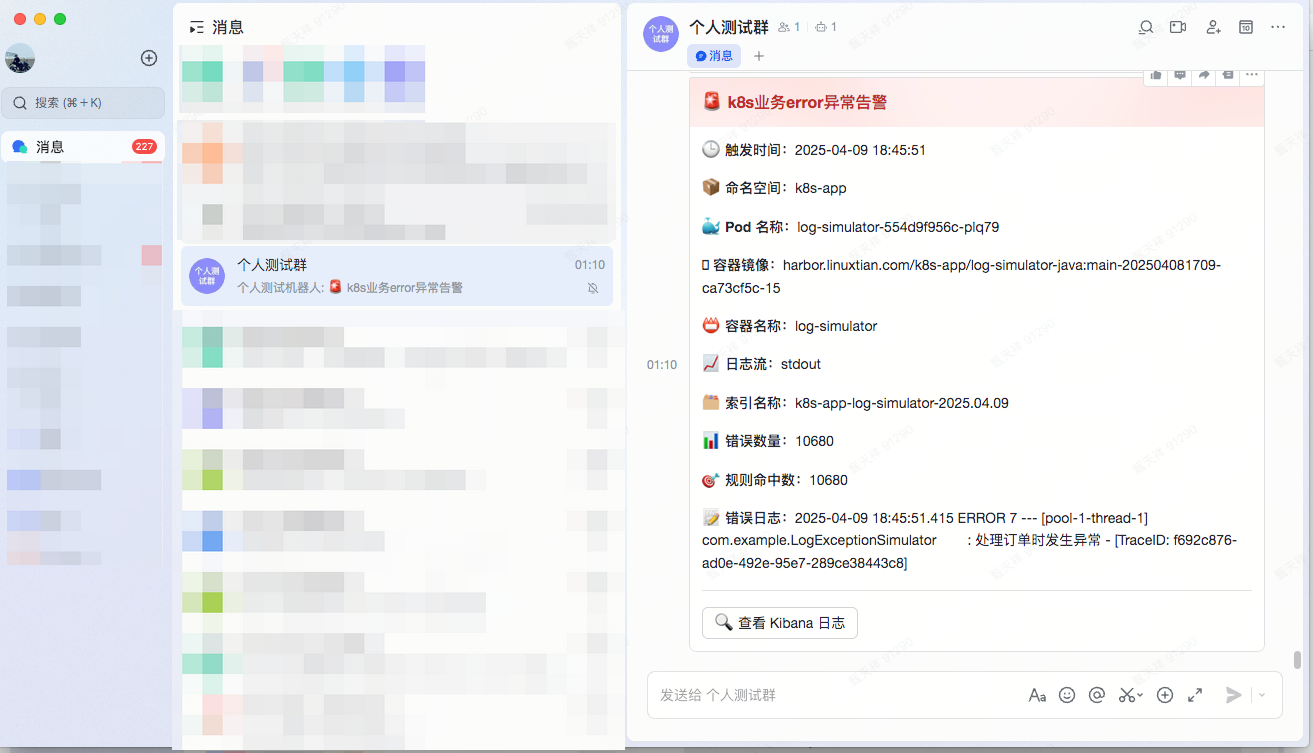

四、飞书告警

1. 效果展示

点击查看 kibana 会自动跳转哦

视频效果

五、POST 请求常用命令

1. 检查分片数

[root@k8s-master EFK]# kubectl -n kube-logging exec -it es-cluster-0 -c elasticsearch -- curl -X GET "http://127.0.0.1:9200/_cat/indices/k8s-app-*?v&s=index"2. 测试新索引创建

[root@k8s-master EFK]# kubectl -n kube-logging exec -it es-cluster-0 -c elasticsearch -- curl -X PUT "http://127.0.0.1:9200/k8s-app-test-2025.08.07?pretty"3. 查看索引(indices)设置和相关信息

[root@k8s-master EFK]# kubectl -n kube-logging exec -it es-cluster-0 -c elasticsearch -- curl -X GET "http://127.0.0.1:9200/_settings?pretty"|grep k8s-app4. 为现有索引应用该 ILM 策略

为所有 k8s-app-* 索引添加 ILM 策略(保留 30 天)如果索引建立之初,已经创建好了索引生命周期和模版,则不用执行下面命令,下面的命令是为了补救才用的,因为所以没有应用策略,所以要应用

[root@k8s-master EFK]# # 为所有 k8s-app-* 索引添加 ILM 策略(保留 30 天)

[root@k8s-master EFK]# kubectl -n kube-logging exec -it es-cluster-0 -c elasticsearch -- curl -X PUT "http://127.0.0.1:9200/k8s-app-*/_settings" -H 'Content-Type: application/json' -d'

{

"settings": {

"index.lifecycle.name": "watch-history-ilm-policy",

"index.lifecycle.rollover_alias": "logs"

}

}

'5. 查看索引的生命周期管理状态,以确保策略已成功应用

[root@k8s-master EFK]# kubectl -n kube-logging exec -it es-cluster-0 -c elasticsearch -- curl -X GET "http://127.0.0.1:9200/k8s-app-*/_ilm/explain?pretty"

{

"indices" : {

"k8s-app-2025.02.17" : {

"index" : "k8s-app-2025.02.17",

"managed" : true,

"policy" : "watch-history-ilm-policy",

"lifecycle_date_millis" : 1739781319447,

"phase" : "new",

"phase_time_millis" : 1739784554675,

"action" : "complete",

"action_time_millis" : 1739784554675,

"step" : "complete",

"step_time_millis" : 1739784554675

}

}

}6. 检查集群状态

[root@k8s-master EFK]# kubectl -n kube-logging exec -it es-cluster-0 -c elasticsearch -- curl -X GET "http://127.0.0.1:9200/_cluster/health?pretty"7. 查看索引写入压力

[root@master1 fluentd]# kubectl -n kube-logging exec -it es-cluster-0 -c elasticsearch -- curl -X GET "http://127.0.0.1:9200/_cat/thread_pool/write?v"

node_name name active queue rejected

es-cluster-2 write 0 0 45

es-cluster-0 write 0 0 0

es-cluster-1 write 2 194 40028. 查看磁盘压力

[root@master1 fluentd]# kubectl -n kube-logging exec -it es-cluster-0 -c elasticsearch -- curl -X GET "http://127.0.0.1:9200/_cat/allocation?v"

shards disk.indices disk.used disk.avail disk.total disk.percent host ip node

36 999.4mb 3.1tb 40.3tb 43.4tb 7 10.233.64.46 10.233.64.46 es-cluster-2

37 1.1gb 2.5tb 40.9tb 43.4tb 5 10.233.123.178 10.233.123.178 es-cluster-1

37 1.1gb 2.2tb 33.9tb 36.2tb 6 10.233.81.123 10.233.81.123 es-cluster-09. 查看所有线程池(包括 search、bulk、index 等)

[root@k8s-app-1 ~]# kubectl -n kube-logging exec -it es-cluster-0 -c elasticsearch -- curl -X GET http://localhost:9200/_cat/thread_pool?v

node_name name active queue rejected

es-cluster-0 analyze 0 0 0

es-cluster-0 ccr 0 0 0

es-cluster-0 data_frame_indexing 0 0 0

es-cluster-0 fetch_shard_started 0 0 010. 查看索引详情

1. 使用 _search API 查看日志内容

# 查看所有索引

[root@master1 fluentd]# kubectl -n kube-logging exec -it es-cluster-0 -c elasticsearch -- curl -X GET "127.0.0.1:9200/_cat/indices?v"

# 查看某一些索引

[root@master1 fluentd]# kubectl -n kube-logging exec -it es-cluster-0 -c elasticsearch -- curl -X GET "http://127.0.0.1:9200/k8s-app-log-simulator-*/_mapping?pretty"

# 查看指定索引的所有日志(前10条)

kubectl -n kube-logging exec -it es-cluster-0 -c elasticsearch -- curl -X GET "http://127.0.0.1:9200/k8s-app-data-sync-job-2025.12.10/_search?pretty" -H 'Content-Type: application/json'

# 查看更多日志(例如100条)

kubectl -n kube-logging exec -it es-cluster-0 -c elasticsearch -- curl -X GET "http://127.0.0.1:9200/k8s-app-data-sync-job-2025.12.10/_search?size=100&pretty" -H 'Content-Type: application/json'2. 使用更精确的查询

# 查询特定字段的日志

kubectl -n kube-logging exec -it es-cluster-0 -c elasticsearch -- curl -X GET "http://127.0.0.1:9200/k8s-app-data-sync-job-2025.12.10/_search?pretty" -H 'Content-Type: application/json' -d '

{

"query": {

"match_all": {}

},

"size": 20

}'3. 查看索引的映射(了解字段结构)

# 先查看索引的 mapping,了解有哪些字段

kubectl -n kube-logging exec -it es-cluster-0 -c elasticsearch -- curl -X GET "http://127.0.0.1:9200/k8s-app-data-sync-job-2025.12.10/_mapping?pretty"4. 使用字段过滤查询

# 只返回特定字段(例如日志消息和时间戳)

kubectl -n kube-logging exec -it es-cluster-0 -c elasticsearch -- curl -X GET "http://127.0.0.1:9200/k8s-app-data-sync-job-2025.12.10/_search?pretty" -H 'Content-Type: application/json' -d '

{

"_source": ["message", "@timestamp", "level"],

"query": {

"match_all": {}

},

"size": 10,

"sort": [

{

"@timestamp": {

"order": "desc"

}

}

]

}'5. 按时间范围查询

# 查询最近1小时的日志

kubectl -n kube-logging exec -it es-cluster-0 -c elasticsearch -- curl -X GET "http://127.0.0.1:9200/k8s-app-data-sync-job-2025.12.10/_search?pretty" -H 'Content-Type: application/json' -d '

{

"query": {

"range": {

"@timestamp": {

"gte": "now-1h"

}

}

},

"sort": [

{

"@timestamp": "desc"

}

],

"size": 50

}'11. 查看索引中指定字段日志

"size": 100:返回最多 100 条匹配的日志数据。

"must"::必须匹配所有条件,精确过滤,所有must条件都必须满足,用于查询所有特定条件内容。

"should"::可选匹配,最好匹配但不强制,用于查询多个条件内容。

"term"::用于精确匹配keyword类型的字段。

"match"::用于全文搜索text类型的字段,比如message,并且自动忽略大小写

"minimum_should_match": 1代表 至少匹配一个should条件。

查询

k8s-app 标签的值是log-simulator或nginx并且日志为ERROE级别的

[root@master1 fluentd]# kubectl -n kube-logging exec -it es-cluster-0 -c elasticsearch -- curl -X GET "http://127.0.0.1:9200/k8s-app-log-simulator-*/_search?pretty" -H 'Content-Type: application/json' -d'

{

"size": 100,

"query": {

"bool": {

"must": [

{

"bool": {

"should": [

{ "term": { "kubernetes_labels_k8s-app.keyword": "log-simulator" }},

{ "term": { "kubernetes_labels_k8s-app.keyword": "nginx" }}

],

"minimum_should_match": 1

}

},

{ "match": { "message": "error" }}

]

}

}

}'查询

k8s-app 标签的值是log-simulator并且namespace是k8s-app并且日志是INFO级别的

[root@master1 fluentd]# kubectl -n kube-logging exec -it es-cluster-0 -c elasticsearch -- curl -X GET "http://127.0.0.1:9200/k8s-app-log-simulator-*/_search?pretty" -H 'Content-Type: application/json' -d'

{

"size": 100,

"query": {

"bool": {

"must": [

{ "term": { "kubernetes_labels_k8s-app.keyword": "log-simulator" }},

{ "term": { "kubernetes_namespace_name.keyword": "k8s-app" }},

{ "match": { "message": "INFO" }}

]

}

}

}'使用 query_string 类型的方法

[root@master1 fluentd]# kubectl -n kube-logging exec -it es-cluster-0 -c elasticsearch -- curl -X GET "http://127.0.0.1:9200/k8s-app-log-simulator-*/_search?pretty" -H 'Content-Type: application/json' -d'

{

"query": {

"bool": {

"must": [

{

"bool": {

"should": [

{ "term": { "kubernetes_labels_k8s-app.keyword": "nginx" }},

{ "term": { "kubernetes_labels_k8s-app.keyword": "log-simulator" }}

],

"minimum_should_match": 1

}

},

{

"query_string": {

"query": "message:(*error* OR *Exception* OR *stacktrace*)",

"analyze_wildcard": true

}

}

]

}

}

}'12. 删除索引

查看索引

[root@master1 fluentd]# kubectl -n kube-logging exec -it es-cluster-0 -c elasticsearch -- curl -X GET "http://127.0.0.1:9200/_cat/indices/k8s-app-*?v"

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size

green open k8s-app-2025.03.23 fsMZv42oTz-RidASGIme8w 1 1 26564178 0 36.8gb 18.4gb

green open k8s-app-2025.03.24 Yq2GtGhBQcWzJOsRKCPWlQ 1 1 31224752 0 43.4gb 21.6gb删除索引

[root@master1 fluentd]# kubectl -n kube-logging exec -it es-cluster-0 -c elasticsearch -- curl -X DELETE "http://127.0.0.1:9200/k8s-app-*"

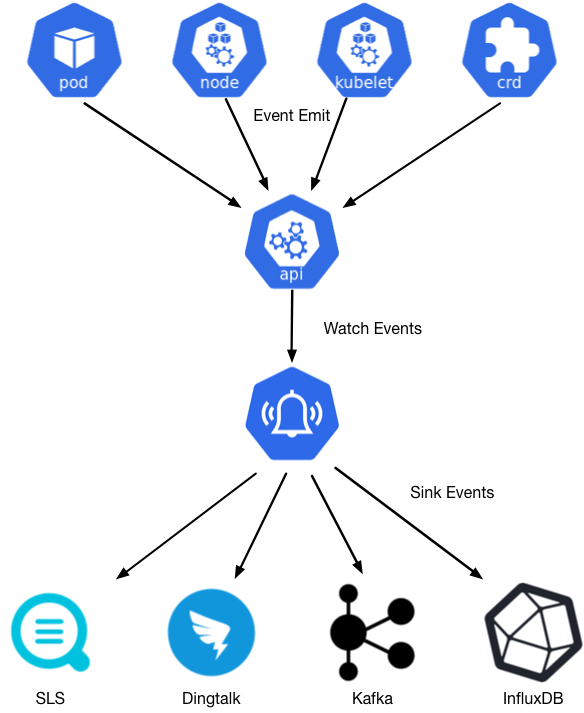

{"acknowledged":true}六、部署 Kube-event 事件收集器

1. 介绍

kube-eventer 是阿里云开源的 Kubernetes 事件监控与告警工具,主要用于收集、过滤和转发 Kubernetes 集群中的事件(Events),帮助用户实时感知集群状态变化(如 Pod 崩溃、调度失败等),并支持对接多种告警渠道。

2. 概念

kubernetes 的核心设计理念是状态机。因此,Normal当转移到期望状态时会发生事件,而Warning当转移到非预期状态时会发生事件。 kube-eventer 可以帮助诊断、分析和报警问题。

3. 核心功能

事件收集

自动捕获 Kubernetes 集群中产生的所有事件(如Warning级别的异常事件),例如:Pod 启动失败、OOMKilled

节点资源不足(

InsufficientCPU)镜像拉取失败(

ErrImagePull)

事件过滤与聚合

支持按事件类型、命名空间、资源对象等条件过滤。

聚合重复事件,避免告警风暴。

多输出渠道

将事件转发至以下目标:告警系统:Slack、DingTalk(钉钉)、企业微信、Webhook

日志系统:Elasticsearch、Kafka、InfluxDB

监控平台:Prometheus、阿里云 SLS

轻量级与易部署

以 DaemonSet 或 Deployment 形式部署,资源占用低。

配置通过 ConfigMap 或命令行参数管理。

4. 应用场景

实时故障排查:快速发现 Pod 频繁重启、节点异常等事件。

告警集成:将 Kubernetes 事件接入现有运维告警体系。

历史分析:存储事件到日志系统,用于后续审计或分析。

5. 部署

[root@k8s-master1 kube-event]# cat deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

name: kube-eventer

name: kube-eventer

namespace: kube-system

spec:

replicas: 1

selector:

matchLabels:

app: kube-eventer

template:

metadata:

labels:

app: kube-eventer

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

spec:

dnsPolicy: ClusterFirstWithHostNet

serviceAccount: kube-eventer

containers:

- image: registry.aliyuncs.com/acs/kube-eventer:v1.2.7-ca03be0-aliyun

name: kube-eventer

command:

- "/kube-eventer"

- "--source=kubernetes:https://kubernetes.default"

- "--sink=webhook:https://open.feishu.cn/open-apis/bot/v2/hook/xxxxxxx-e043-4a64-975d-xxxxxxxx?level=Warning&method=POST&header=Content-Type=application/json&custom_body_configmap=custom-body&custom_body_configmap_namespace=kube-system"

- "--sink=elasticsearch:http://elasticsearch.kube-logging:9200?sniff=false&index=kube-events&ver=7"

env:

# If TZ is assigned, set the TZ value as the time zone

- name: TZ

value: "Asia/Shanghai"

volumeMounts:

- name: localtime

mountPath: /etc/localtime

readOnly: true

- name: zoneinfo

mountPath: /usr/share/zoneinfo

readOnly: true

resources:

requests:

cpu: 100m

memory: 100Mi

limits:

cpu: 500m

memory: 250Mi

volumes:

- name: localtime

hostPath:

path: /etc/localtime

- name: zoneinfo

hostPath:

path: /usr/share/zoneinfo

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: kube-eventer

rules:

- apiGroups:

- ""

resources:

- configmaps

- events

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kube-eventer

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kube-eventer

subjects:

- kind: ServiceAccount

name: kube-eventer

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: kube-eventer

namespace: kube-system

---

apiVersion: v1

kind: ConfigMap

metadata:

name: custom-body

namespace: kube-system

data:

content: '{

"msg_type": "post",

"content": {

"post": {

"zh_cn": {

"title": "🚨 Kubernetes 事件告警",

"content": [

[{

"tag": "text",

"text": "📌 事件类型: {{.Type}}"

}],

[{

"tag": "text",

"text": "🖥 资源名称: {{.InvolvedObject.Name}}"

}],

[{

"tag": "text",

"text": "🏷 命名空间: {{.InvolvedObject.Namespace}}"

}],

[{

"tag": "text",

"text": "🔧 资源类型: {{.InvolvedObject.Kind}}"

}],

[{

"tag": "text",

"text": "⚠️ 事件原因: {{.Reason}}"

}],

[{

"tag": "text",

"text": "⏰ 事件时间: {{.LastTimestamp}}"

}],

[{

"tag": "text",

"text": "💬 详细信息: {{.Message}}"

}],

[{

"tag": "text",

"text": "----------"

}],

[{

"tag": "text",

"text": "🔔 提醒:请尽快处理此告警,确保 Kubernetes 集群的稳定运行。"

}],

[{

"tag": "text",

"text": "来自 kube-eventer 的监控告警。"

}]

]

}

}

}

}'6. 验证效果

[root@k8s-master1 kube-event]# kubectl get pods -n kube-system -l app=kube-eventer

NAME READY STATUS RESTARTS AGE

kube-eventer-9c4ff86cc-gvr67 1/1 Running 0 14m

[root@k8s-master1 kube-event]# kubectl -n kube-system logs -f -l app=kube-eventer

I0408 13:59:00.000188 1 manager.go:102] Exporting 0 events

I0408 13:59:30.000034 1 manager.go:102] Exporting 0 events

I0408 14:00:00.000140 1 manager.go:102] Exporting 0 events

I0408 14:00:30.000456 1 manager.go:102] Exporting 0 events

I0408 14:01:00.000192 1 manager.go:102] Exporting 0 events

I0408 14:01:30.000158 1 manager.go:102] Exporting 0 events

I0408 14:02:00.000124 1 manager.go:102] Exporting 0 events

I0408 14:02:30.000341 1 manager.go:102] Exporting 0 events

I0408 14:03:00.000141 1 manager.go:102] Exporting 0 events

I0408 14:03:30.001138 1 manager.go:102] Exporting 0 events

I0408 14:04:00.000124 1 manager.go:102] Exporting 0 events