Kubernetes 部署 Gitlab-Runner

一、简单介绍

GitLab-CI

GitLab CI/CD是GitLab的一部分,支持从计划到部署具有出色的用户体验。CI/CD是开源GitLab社区版和专有GitLab企业版的一部分。可以根据需要添加任意数量的计算节点,每个构建可以拆分为多个作业,这些作业可以在多台计算机上并行运行。

GitLab-CI轻量级,不需要复杂的安装手段。配置简单,与gitlab可直接适配。实时构建日志十分清晰,UI交互体验很好。使用 YAML 进行配置,任何人都可以很方便的使用。GitLabCI 有助于DevOps人员,例如敏捷开发中,开发与运维是同一个人,最便捷的开发方式。

在大多数情况,构建项目都会占用大量的系统资源,如果让gitlab本身来运行构建任务的话,显然Gitlab的性能会大幅度下降。GitLab-CI最大的作用就是管理各个项目的构建状态。因此,运行构建任务这种浪费资源的事情交给一个独立的Gitlab Runner来做就会好很多,更重要的是Gitlab Runner 可以安装到不同的机器上,甚至是我们本机,这样完全就不会影响Gitlab本身了。

从GitLab8.0开始,GitLab-CI就已经集成在GitLab中,我们只需要在项目中添加一个.gitlab-ci.yaml文件,然后运行一个Runner,即可进行持续集成。

GItLab Runner

Gitlab Runner是一个开源项目,用于运行您的作业并将结果发送给gitlab。它与Gitlab CI结合使用,gitlab ci是Gitlab随附的用于协调作用的开源持续集成服务。

Gitlab Runner是用Go编写的,可以作为一个二进制文件运行,不需要特定于语言的要求

它皆在GNU/Linux,MacOS和Windows操作系统上运行。另外注意:如果要使用Docker,Gitlab Runner要求Docker 至少是v1.13.0版本才可以。

二、Helm 部署

1. 部署 minio 存储

[root@k8s-app-1 minio]# cat deployment.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: minio-runner-pvc

namespace: dev-ops

spec:

storageClassName: "openebs-hostpath"

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 50Gi

---

apiVersion: v1

kind: Service

metadata:

name: minio-runner

namespace: dev-ops

spec:

type: NodePort

ports:

- name: 9090-tcp

protocol: TCP

port: 9090

targetPort: 9090

nodePort: 32307

- name: 9000-tcp

protocol: TCP

port: 9000

targetPort: 9000

nodePort: 32308

selector:

app: minio

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: minio-runner

namespace: dev-ops

annotations:

nginx.ingress.kubernetes.io/proxy-body-size: "0"

labels:

name: minio-runner

spec:

ingressClassName: nginx

rules:

- host: k8s-minio.localhost.com

http:

paths:

- pathType: Prefix

path: "/"

backend:

service:

name: minio-runner

port:

number: 9090

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

name: minio

name: minio-runner

namespace: dev-ops

spec:

replicas: 1

selector:

matchLabels:

name: minio

template:

metadata:

labels:

app: minio

name: minio

spec:

containers:

- name: minio

image: minio/minio:RELEASE.2022-12-07T00-56-37Z

imagePullPolicy: IfNotPresent

command:

- "/bin/bash"

- "-c"

- |

minio server /data --console-address :9090 --address :9000

ports:

- containerPort: 9090

name: console-address

- containerPort: 9000

name: address

env:

- name: MINIO_ACCESS_KEY

value: "admin"

- name: MINIO_SECRET_KEY

value: "admin@123456"

readinessProbe:

failureThreshold: 3

httpGet:

path: /minio/health/ready

port: 9000

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 5

volumeMounts:

- mountPath: /data

name: data

volumes:

- name: data

persistentVolumeClaim:

claimName: minio-runner-pvc1. 拉取 helm 包

$ helm repo add gitlab https://charts.gitlab.io

$ helm repo list

NAME URL

gitlab https://charts.gitlab.io/

$ helm pull gitlab/gitlab-runner

$ tar xvf gitlab-runner-0.71.0.tgz

2. 修改调整

$ vim values.yaml

image:

registry: registry.gitlab.com

image: gitlab-org/gitlab-runner

useTini: false

imagePullPolicy: IfNotPresent

livenessProbe: {}

readinessProbe: {}

replicas: 1

# gitlab 代码库地址

gitlabUrl: https://k8s-gitlab.linuxtian.com

# runner的token

runnerRegistrationToken: "glrt-7QQvoewUEWxxS9CXKymR"

unregisterRunners: true

terminationGracePeriodSeconds: 3600

concurrent: 10

shutdown_timeout: 0

checkInterval: 30

logLevel: info

sessionServer:

enabled: false

serviceType: LoadBalancer

rbac:

create: true

generatedServiceAccountName: ""

rules:

- resources: ["events"]

verbs: ["list", "watch"]

- resources: ["namespaces"]

verbs: ["create", "delete"]

- resources: ["pods"]

verbs: ["create","delete","get"]

- apiGroups: [""]

resources: ["pods/attach","pods/exec"]

verbs: ["get","create","patch","delete"]

- apiGroups: [""]

resources: ["pods/log"]

verbs: ["get","list"]

- resources: ["secrets"]

verbs: ["create","delete","get","update"]

- resources: ["serviceaccounts"]

verbs: ["get"]

- resources: ["services"]

verbs: ["create","get"]

clusterWideAccess: true

serviceAccountAnnotations: {}

podSecurityPolicy:

enabled: false

resourceNames:

- gitlab-runner

imagePullSecrets: []

serviceAccount:

create: true

name: ""

annotations: {}

imagePullSecrets: []

metrics:

enabled: false

portName: metrics

port: 9252

serviceMonitor:

enabled: false

service:

enabled: false

type: ClusterIP

runners:

config: |

[[runners]]

output_limit = 512000

[runners.kubernetes]

namespace = ""

image= "ubuntu:22.04"

privileged = true

cpu_limit = "3"

memory_limit = "5Gi"

image_pull_secrets = ["harbor-registry-secret"]

[runners.cache]

Type = "s3"

Path = "k8s-gitlab"

Shared = true

[runners.cache.s3]

ServerAddress = "minio-runner:9000"

AccessKey = "QZsDibwKHtf73Ql2"

SecretKey = "dr6KufmZ1bZ8JC06JYFOotA5gYdXI3Gh"

BucketName = "gitlab-runners"

BucketLocation = "k8s-gitlab"

Insecure = true

[[runners.kubernetes.volumes.host_path]]

name = "docker"

mount_path = "/var/run/docker.sock"

host_path = "/var/run/docker.sock"

[[runners.kubernetes.volumes.host_path]]

name = "host-time"

mount_path = "/etc/localtime"

host_path = "/etc/localtime"

configPath: ""

# runner 的 tags

tags: "k8s-gitlab"

name: "kubernetes-runner"

cache: {}

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: false

runAsNonRoot: true

privileged: false

capabilities:

drop: ["ALL"]

strategy: {}

podSecurityContext:

runAsUser: 100

fsGroup: 65533

resources:

limits:

memory: 8Gi

cpu: 4

ephemeral-storage: 4Gi

requests:

memory: 1Gi

cpu: 500m

ephemeral-storage: 800Mi

affinity: {}

topologySpreadConstraints: {}

runtimeClassName: ""

nodeSelector: {}

tolerations: []

extraEnv: {}

extraEnvFrom: {}

hostAliases: []

deploymentAnnotations: {}

deploymentLabels: {}

deploymentLifecycle: {}

podAnnotations: {}

podLabels: {}

priorityClassName: ""

secrets: []

configMaps: {}

volumeMounts: []

volumes: []

extraObjects: []

3. 解释配置

配置项 解释说明

[[runners]] Runner 的基本配置部分。

output_limit 设置单个作业(Job)日志输出的最大字节数。此处设置为 512,00000 字节(约 500 MB)。

[runners.kubernetes] Kubernetes 环境中的 GitLab Runner 配置相关。

namespace Runner 所在的 Kubernetes 命名空间,通常通过 Helm 动态替换为实际命名空间。

image Runner 使用的 Docker 镜像,这里设置为 ubuntu:22.04,表示使用 Ubuntu 22.04 镜像。

privileged 是否允许 Runner 容器以特权模式运行,true 表示允许。通常用于需要 Docker-in-Docker 的场景。

cpu_limit 设置 Runner 容器使用的最大 CPU 配额,这里设置为 3 个 CPU 核心。

memory_limit 设置 Runner 容器使用的最大内存,单位为 GiB。此处设置为 5Gi。

image_pull_secrets 设置拉取 Docker 镜像所需的 Secret,这里指定了名为 harbor-registry-secret 的 Secret。

[runners.cache] 配置 GitLab Runner 使用的缓存后端,通常用于加速构建过程。

Type 缓存存储类型,这里使用 s3,意味着使用 S3 存储服务(如 MinIO 或 AWS S3)作为缓存存储。

Path 缓存存储路径,用于存储 Runner 的缓存数据。此处设置为 gitlab-runner-cache-k8s-gitlab。

Shared 是否共享缓存。设置为 true 时,多个 Runner 可以共享缓存。

[runners.cache.s3] 配置与 S3 存储相关的具体设置。

ServerAddress 设置 S3 存储服务的地址,这里设置为 minio-runner:9000,指向 MinIO 实例。

AccessKey S3 存储服务的访问密钥,通常用于认证访问。此处为示例密钥。

SecretKey S3 存储服务的私密密钥,用于认证访问。

BucketName 存储桶的名称,此处设置为 gitlab-runners。

BucketLocation S3 存储桶的区域位置。设置为 K8S-Runner,指定存储区域。

Insecure 是否使用不安全连接(如 HTTP)。设置为 true 表示不使用 HTTPS,false 则使用 HTTPS。

[[runners.kubernetes.volumes.host_path]] 配置 Kubernetes 中的卷挂载。

name 卷的名称,用于区分不同的挂载卷。

mount_path 容器内挂载点路径,这里设置为 /var/run/docker.sock 和 /etc/localtime。

host_path 宿主机路径,表示从宿主机挂载到容器的路径。

tags Runner 标签,用于在 GitLab CI/CD 配置中指定运行作业的 Runner。

tags 设置 Runner 的标签,这里设置为 k8s-gitlab,用于作业匹配指定的 Runner。

name Runner 名称,用于标识不同的 Runner。

4. 部署

$ helm upgrade --install k8s-runner -n dev-ops ./ -f values.yaml三、Yaml 方式部署

以下部署方式包括上面的 helm 部署方式都是使用的 kubernetes 为执行器,流水线启动的时候 kubernetes 会启动一个 job 类型 pod 运行流水线,非常方便~

1. 使用 kubernetes 为执行器

1.1 配置 Token

[root@k8s-master1 runner]# cat gitlab-runner-secrets.yaml

apiVersion: v1

kind: Secret

metadata:

name: gitlab-runner-secrets

namespace: dev-ops

labels:

app: gitlab-ci-runner

data:

# echo -n "glrt-7QQvoewUEWxxS9CXKymR" |base64

GITLAB_CI_TOKEN: Z2xydC1CRHd1X2ppaUZjWVJIMmd0MnZmNQ==

CACHE_S3_ACCESS_KEY: V1lFVE1kcTdNaWt2RlRqQw==

CACHE_S3_SECRET_KEY: OW13VXFjWVVKMWtIRXlBN1RNWjBXQk13RFRLVmQ2NHM=1.2 添加 ca.crt 证书

由于我 gitlab 使用了 https 协议,所以我把域名根证书挂载到容器中了

[root@k8s-master1 runner]# kubectl -n dev-ops create secret generic gitlab-ca-cert \

--from-file=ca.crt=./ca.crt1.3 创建 harbor 镜像拉取证书

[root@k8s-master1 runner]# kubectl create secret docker-registry harbor-registry-secret \

--namespace=dev-ops \

--docker-server=https://harbor.tianxiang.love:30443 \

--docker-username=admin \

--docker-password='Harbor12345' \

--docker-email=harbor@linuxtian.com1.4 创建TZ时区

[root@k8s-master1 runner]# kubectl -n dev-ops create configmap tz-config --from-literal=TZ=Asia/Shanghai1.5 配置 Runner 变量

[root@k8s-master1 runner]# cat gitlab-runner-configmap.yaml

kind: ConfigMap

metadata:

labels:

app: gitlab-ci-runner

name: gitlab-ci-runner-env

namespace: dev-ops

apiVersion: v1

data:

CACHE_TYPE: "s3"

CACHE_SHARED: "true"

CACHE_S3_SERVER_ADDRESS: "minio-runner:9000"

CACHE_S3_BUCKET_NAME: "gitlab-runners"

CACHE_S3_INSECURE: "true"

CACHE_PATH: "k8s-gitlab"

CACHE_S3_BUCKET_LOCATION: "k8s-gitlab"

REGISTER_NON_INTERACTIVE: "true"

REGISTER_LOCKED: "false"

METRICS_SERVER: "0.0.0.0:9100"

CI_SERVER_URL: "https://k8s-gitlab.linuxtian.com/ci"

# 由于我 gitlab 使用了 https 协议,所以我把域名根证书挂载到容器中了

CI_SERVER_TLS_CA_FILE: "/etc/gitlab-runner/certs/ca.crt"

RUNNER_REQUEST_CONCURRENCY: "4"

# 使用 kubernetes 为执行器

RUNNER_EXECUTOR: "kubernetes"

KUBERNETES_NAMESPACE: "dev-ops"

KUBERNETES_PRIVILEGED: "true"

# pod 资源限制

KUBERNETES_CPU_LIMIT: "0"

KUBERNETES_MEMORY_LIMIT: "0"

KUBERNETES_SERVICE_CPU_LIMIT: "0"

KUBERNETES_SERVICE_MEMORY_LIMIT: "0"

KUBERNETES_HELPER_CPU_LIMIT: "0"

KUBERNETES_HELPER_MEMORY_LIMIT: "0"

KUBERNETES_PULL_POLICY: "if-not-present"

KUBERNETES_IMAGE_PULL_SECRETS: "harbor-registry-secret"

# helper 容器镜像,变量值为空视为默认

HELPER_IMAGE: "docker.io/gitlab/gitlab-runner-helper:x86_64-v16.10.0"

KUBERNETES_TERMINATIONGRACEPERIODSECONDS: "10"

KUBERNETES_POLL_INTERVAL: "5"

KUBERNETES_POLL_TIMEOUT: "360"

RUNNER_TAG_LIST: "k8s-runner"

RUNNER_NAME: "k8s-runner"

RUNNER_OUTPUT_LIMIT: "5120000"更多环境变量设置请进入容器后执行:gitlab-ci-multi-runner register --help 命令查看

1.6 配置 RBAC

[root@k8s-master1 runner]# cat gitlab-runner-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: gitlab-ci

namespace: dev-ops

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: gitlab-ci

namespace: dev-ops

rules:

- apiGroups: [""]

resources: ["*"]

verbs: ["*"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: gitlab-ci

namespace: dev-ops

subjects:

- kind: ServiceAccount

name: gitlab-ci

namespace: dev-ops

roleRef:

kind: Role

name: gitlab-ci

apiGroup: rbac.authorization.k8s.io

1.7 配置 Runner 启动注册脚本

默认只有当 Pod 正常通过 Kubernetes(TERM 信号)终止时,才会触发 Runner 取消注册。 如果强制终止 Pod(SIGKILL 信号),Runner 将不会注销自身。必须手动清理这种被杀死的 Runner 。

[root@k8s-master1 runner]# cat gitlab-runner-scripts-configmap.yaml

kind: ConfigMap

metadata:

labels:

app: gitlab-ci-runner

name: gitlab-ci-runner-scripts

namespace: dev-ops

apiVersion: v1

data:

run.sh: |

#!/bin/bash

CONFIG_FILE="/etc/gitlab-runner/config.toml"

RUNNER_BIN="/usr/bin/gitlab-runner"

# 注销 runner

unregister() {

kill %1

echo "注销 runner ${RUNNER_NAME} ..."

gitlab-runner unregister -t "$(gitlab-runner list 2>&1 | tail -n1 | awk '{print $4}' | cut -d'=' -f2)" -n ${RUNNER_NAME}

exit $?

}

# 设置信号捕获

trap 'unregister' EXIT HUP INT QUIT PIPE TERM

echo "注册 runner ${RUNNER_NAME} ..."

if [[ -n "${CI_SERVER_TLS_CA_FILE}" ]]; then

echo "使用 Gitlab CA 根证书注册 ..."

${RUNNER_BIN} register \

--non-interactive \

--url "${CI_SERVER_URL}" \

--registration-token "${GITLAB_CI_TOKEN}" \

--executor "${RUNNER_EXECUTOR:-shell}" \

--tls-ca-file "${CI_SERVER_TLS_CA_FILE}" \

--description "${RUNNER_NAME}"

cp "${CI_SERVER_TLS_CA_FILE}" /etc/pki/ca-trust/source/anchors/

update-ca-trust extract

else

echo "使用默认方式注册 ..."

${RUNNER_BIN} register \

--non-interactive \

--url "${CI_SERVER_URL}" \

--registration-token "${GITLAB_CI_TOKEN}" \

--executor "${RUNNER_EXECUTOR:-shell}" \

--description "${RUNNER_NAME}"

fi

# 配置文件

cat > "$CONFIG_FILE" <<EOF

concurrent = ${RUNNER_REQUEST_CONCURRENCY:-4}

check_interval = 0

shutdown_timeout = 0

[session_server]

session_timeout = 1800

[[runners]]

name = "${RUNNER_NAME}"

output_limit = ${RUNNER_OUTPUT_LIMIT:-5120000}

request_concurrency = ${RUNNER_REQUEST_CONCURRENCY:-4}

url = "${CI_SERVER_URL}"

token = "${GITLAB_CI_TOKEN}"

# 使用 kubernetes 为执行器

executor = "${RUNNER_EXECUTOR}"

# 由于我 gitlab 使用了 https 协议,所以我把域名根证书挂载到容器中了

tls-ca-file = "${CI_SERVER_TLS_CA_FILE}"

[runners.cache]

Type = "${CACHE_TYPE:-s3}"

Path = "${CACHE_PATH:-k8s-gitlab}"

Shared = ${CACHE_SHARED:-true}

[runners.cache.s3]

ServerAddress = "${CACHE_S3_SERVER_ADDRESS}"

AccessKey = "${CACHE_S3_ACCESS_KEY}"

SecretKey = "${CACHE_S3_SECRET_KEY}"

BucketName = "${CACHE_S3_BUCKET_NAME}"

BucketLocation = "${CACHE_S3_BUCKET_LOCATION}"

Insecure = ${CACHE_S3_INSECURE:-true}

[runners.kubernetes]

host = ""

bearer_token_overwrite_allowed = false

image = ""

namespace = "${KUBERNETES_NAMESPACE:-dev-ops}"

privileged = ${KUBERNETES_PRIVILEGED:-true}

cpu_limit = "${KUBERNETES_CPU_LIMIT:-3}"

memory_limit = "${KUBERNETES_MEMORY_LIMIT:-4Gi}"

service_cpu_limit = "${KUBERNETES_SERVICE_CPU_LIMIT:-3}"

service_memory_limit = "${KUBERNETES_SERVICE_MEMORY_LIMIT:-4Gi}"

helper_cpu_limit = "${KUBERNETES_HELPER_CPU_LIMIT:-500m}"

helper_memory_limit = "${KUBERNETES_HELPER_MEMORY_LIMIT:-500Mi}"

pull_policy = "${KUBERNETES_PULL_POLICY:-if-not-present}"

image_pull_secrets = ["${KUBERNETES_IMAGE_PULL_SECRETS:-}"]

helper_image = "${HELPER_IMAGE:-registry.gitlab.com/gitlab-org/gitlab-runner/gitlab-runner-helper:x86_64-v16.10.0}"

terminationGracePeriodSeconds = ${KUBERNETES_TERMINATIONGRACEPERIODSECONDS:-10}

poll_interval = ${KUBERNETES_POLL_INTERVAL:-5}

poll_timeout = ${KUBERNETES_POLL_TIMEOUT:-360}

pod_labels_overwrite_allowed = ""

service_account_overwrite_allowed = ""

pod_annotations_overwrite_allowed = ""

[runners.kubernetes.container_env]

"TZ" = "Asia/Shanghai"

[runners.kubernetes.pod_security_context]

[runners.kubernetes.init_permissions_container_security_context]

[runners.kubernetes.build_container_security_context]

[runners.kubernetes.helper_container_security_context]

[runners.kubernetes.service_container_security_context]

[runners.kubernetes.volumes]

[runners.kubernetes.dns_config]

[[runners.kubernetes.volumes.host_path]]

name = "docker"

mount_path = "/var/run/docker.sock"

read_only = true

host_path = "/var/run/docker.sock"

[[runners.kubernetes.volumes.host_path]]

name = "host-time"

mount_path = "/etc/localtime"

read_only = true

host_path = "/etc/localtime"

[[runners.kubernetes.volumes.config_map]]

name = "tz-config"

mount_path = "/etc/timezone"

sub_path = "TZ"

EOF

# 启动 runner 并让脚本等待其退出

echo "Starting runner ${RUNNER_NAME} ..."

${RUNNER_BIN} run --user=gitlab-runner --working-directory=/home/gitlab-runner &

wait1.8 Runner 服务

[root@k8s-master1 runner]# cat gitlab-runner-statefulset.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: gitlab-ci-runner

namespace: dev-ops

labels:

app: gitlab-ci-runner

spec:

selector:

matchLabels:

app: gitlab-ci-runner

updateStrategy:

type: RollingUpdate

replicas: 2

serviceName: gitlab-ci-runner

template:

metadata:

labels:

app: gitlab-ci-runner

spec:

serviceAccountName: gitlab-ci

containers:

- image: gitlab/gitlab-runner:v16.10.0

name: gitlab-ci-runner

command: ["/bin/bash", "-c", "/scripts/run.sh"]

#command: ["/bin/bash", "-c", "sleep 9999"]

envFrom:

- configMapRef:

name: gitlab-ci-runner-env

- secretRef:

name: gitlab-runner-secrets

ports:

- containerPort: 9100

name: http-metrics

protocol: TCP

volumeMounts:

- name: gitlab-ci-runner-scripts

mountPath: "/scripts/run.sh"

subPath: "run.sh"

# 由于我 gitlab 使用了 https 协议,所以我把域名根证书挂载到容器中了

- name: ca-cert

mountPath: /etc/gitlab-runner/certs

- name: host-time

mountPath: /etc/localtime

volumes:

- name: gitlab-ci-runner-scripts

configMap:

name: gitlab-ci-runner-scripts

defaultMode: 0777

- name: ca-cert

secret:

secretName: gitlab-ca-cert

- name: host-time

hostPath:

path: /etc/localtime

restartPolicy: Always2. 使用 shell 为执行器

基础配置和上面一样,只是把关键配置给做修改

2.1 配置 Runner 变量

[root@k8s-master1 runner]# cat gitlab-runner-configmap.yaml

kind: ConfigMap

metadata:

labels:

app: gitlab-ci-runner

name: gitlab-ci-runner-env

namespace: dev-ops

apiVersion: v1

data:

CACHE_TYPE: "s3"

CACHE_SHARED: "true"

CACHE_S3_SERVER_ADDRESS: "minio-runner:9000"

CACHE_S3_BUCKET_NAME: "gitlab-runners"

CACHE_S3_INSECURE: "true"

CACHE_PATH: "k8s-gitlab"

CACHE_S3_BUCKET_LOCATION: "k8s-gitlab"

REGISTER_NON_INTERACTIVE: "true"

REGISTER_LOCKED: "false"

METRICS_SERVER: "0.0.0.0:9100"

CI_SERVER_URL: "https://k8s-gitlab.linuxtian.com/ci"

# 由于我 gitlab 使用了 https 协议,所以我把域名根证书挂载到容器中了

CI_SERVER_TLS_CA_FILE: "/etc/gitlab-runner/certs/ca.crt"

RUNNER_REQUEST_CONCURRENCY: "1"

# 使用 shell 为执行器

RUNNER_EXECUTOR: "shell"

RUNNER_TAG_LIST: "k8s-runner"

RUNNER_NAME: "k8s-runner"

RUNNER_OUTPUT_LIMIT: "5120000"2.3 配置 Runner 启动注册脚本

[root@k8s-master1 runner-shell]# cat gitlab-runner-scripts-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: gitlab-ci-runner-scripts

namespace: dev-ops

labels:

app: gitlab-ci-runner

data:

run.sh: |

#!/bin/bash

set -e

CONFIG_FILE="/etc/gitlab-runner/config.toml"

RUNNER_BIN="/usr/bin/gitlab-runner"

# 注销 runner

unregister() {

kill %1

echo "注销 runner ${RUNNER_NAME} ..."

gitlab-runner unregister -t "$(gitlab-runner list 2>&1 | tail -n1 | awk '{print $4}' | cut -d'=' -f2)" -n ${RUNNER_NAME}

exit $?

}

# 设置信号捕获

trap 'unregister' EXIT HUP INT QUIT PIPE TERM

echo "注册 runner ${RUNNER_NAME} ..."

if [[ -n "${CI_SERVER_TLS_CA_FILE}" ]]; then

echo "使用 Gitlab CA 根证书注册 ..."

${RUNNER_BIN} register \

--non-interactive \

--url "${CI_SERVER_URL}" \

--registration-token "${GITLAB_CI_TOKEN}" \

--executor "${RUNNER_EXECUTOR:-shell}" \

--tls-ca-file "${CI_SERVER_TLS_CA_FILE}" \

--description "${RUNNER_NAME}"

cp "${CI_SERVER_TLS_CA_FILE}" /etc/pki/ca-trust/source/anchors/

update-ca-trust extract

else

echo "使用默认方式注册 ..."

${RUNNER_BIN} register \

--non-interactive \

--url "${CI_SERVER_URL}" \

--registration-token "${GITLAB_CI_TOKEN}" \

--executor "${RUNNER_EXECUTOR:-shell}" \

--description "${RUNNER_NAME}"

fi

# 添加配置文件

cat > "$CONFIG_FILE" <<EOF

concurrent = ${RUNNER_REQUEST_CONCURRENCY:-1}

check_interval = 0

shutdown_timeout = 0

[session_server]

session_timeout = 1800

[[runners]]

name = "${RUNNER_NAME}"

output_limit = ${RUNNER_OUTPUT_LIMIT:-5120000}

request_concurrency = ${RUNNER_REQUEST_CONCURRENCY:-4}

url = "${CI_SERVER_URL}"

token = "${GITLAB_CI_TOKEN}"

executor = "${RUNNER_EXECUTOR:-shell}"

tls-ca-file = "${CI_SERVER_TLS_CA_FILE:-""}"

[runners.cache]

Type = "${CACHE_TYPE:-s3}"

Path = "${CACHE_PATH:-k8s-gitlab}"

Shared = ${CACHE_SHARED:-true}

[runners.cache.s3]

ServerAddress = "${CACHE_S3_SERVER_ADDRESS}"

AccessKey = "${CACHE_S3_ACCESS_KEY}"

SecretKey = "${CACHE_S3_SECRET_KEY}"

BucketName = "${CACHE_S3_BUCKET_NAME}"

BucketLocation = "${CACHE_S3_BUCKET_LOCATION}"

Insecure = ${CACHE_S3_INSECURE:-true}

EOF

echo "启动 GitLab Runner..."

${RUNNER_BIN} run -n ${RUNNER_NAME} --user=root --working-directory=/etc/gitlab-runner &

wait2.3 制作 Runner 镜像

如果使用 shell 为解释器的话,所以 runner 本身运行流水线,像一些基础的环境还是要配置的,类似 Jenkins 的 slave

[root@k8s-master1 build]# wget https://fileserver.tianxiang.love/chfs/shared/jdk-8u441-linux-x64.tar.gz

[root@k8s-master1 build]# wget https://archive.apache.org/dist/maven/maven-3/3.6.3/binaries/apache-maven-3.6.3-bin.tar.gz

[root@k8s-master1 build]# wget https://gitlab-runner-downloads.s3.amazonaws.com/v16.10.0/binaries/gitlab-runner-linux-amd64

[root@k8s-master1 build]# ls

apache-maven-3.6.3-bin.tar.gz Dockerfile gitlab-runner-linux-amd64 jdk-8u441-linux-x64.tar.gz settings.xml simkai.ttf simsun.ttc

# simkai.ttf simsun.ttc windows 系统里面的字体,宋体文件

[root@k8s-master1 build]# cat Dockerfile

FROM harbor.tianxiang.love:30443/library/centos:7

LABEL maintainer="2099637909@qq.com"

LABEL description="GitLab Runner with Maven, Node.js, Java, Docker based on CentOS 7"

# 配置安装源

RUN rm -rf /etc/yum.repos.d/* && \

curl -o /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo && \

curl -o /etc/yum.repos.d/docker-ce.repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# 安装基础工具

RUN yum install -y \

epel-release \

curl \

wget \

unzip \

tar \

git \

ca-certificates \

sudo \

which \

bash-completion \

yum-utils \

device-mapper-persistent-data \

lvm2 \

kde-l10n-Chinese \

fontconfig mkfontscale

# 配置时区

RUN ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

# 配置中文字符集

RUN localedef -c -f UTF-8 -i zh_CN zh_CN.UTF-8 && \

echo 'LANG="zh_CN.GB18030"' > /etc/locale.conf && \

source /etc/locale.conf

ENV LANG=zh_CN.UTF-8 \

LC_ALL=zh_CN.UTF-8

# 安装字体

RUN mkdir -p /usr/share/fonts/chinese && \

chmod -R 755 /usr/share/fonts/chinese

COPY simkai.ttf /usr/share/fonts/chinese

COPY simsun.ttc /usr/share/fonts/chinese

RUN cd /usr/share/fonts/chinese && \

mkfontscale && \

fc-list :lang=zh

# 安装 docker

RUN yum -y install docker-ce-20.10.9-3.el7

# 安装 Node.js 最新 LTS(18.x 或 20.x)

RUN curl -fsSL https://rpm.nodesource.com/setup_16.x | bash - && \

yum install -y nodejs && yum clean all

# 安装 Maven

ADD apache-maven-3.6.3-bin.tar.gz /usr/local/

COPY settings.xml /usr/local/apache-maven-3.6.3/conf/settings.xml

ENV MAVEN_HOME=/usr/local/apache-maven-3.6.3

ENV PATH=$MAVEN_HOME/bin:$PATH

# 安装JDK环境

ADD jdk-8u441-linux-x64.tar.gz /usr/local/

ENV JAVA_HOME=/usr/local/jdk1.8.0_441

ENV PATH=$JAVA_HOME/bin:$JAVA_HOME/bin:$PATH

ENV CLASSPATH=$CLASSPATH:$JAVA_HOME/lib:$JAVA_HOME/lib/tools.jar

# 安装 kubectl 二进制

#COPY kubectl /usr/bin/kubectl

# 添加 gitlab-runner 二进制(你指定版本 v16.10.0)

COPY gitlab-runner-linux-amd64 /usr/bin/gitlab-runner

RUN chmod +x /usr/bin/gitlab-runner

# 创建运行目录

WORKDIR /etc/gitlab-runner

# 设置默认用户

USER root2.4 Runner 服务

由于 runner 运行流水线使用不再是 job 类型 pod 而是自己本身所以需要挂载 docker 和 kubectl 相关文件

[root@k8s-master1 runner]# cat gitlab-runner-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: gitlab-ci-runner

namespace: dev-ops

labels:

app: gitlab-ci-runner

spec:

selector:

matchLabels:

app: gitlab-ci-runner

replicas: 10

template:

metadata:

labels:

app: gitlab-ci-runner

spec:

serviceAccountName: gitlab-ci

containers:

- image: harbor.tianxiang.love:30443/dev-ops/gitlab-runner:centos7-v16.10.0 # 使用自己制作的镜像

imagePullPolicy: Always

name: gitlab-ci-runner

command: ["/bin/bash", "-c", "/scripts/run.sh"]

#command: ["/bin/bash", "-c", "sleep 9999"]

envFrom:

- configMapRef:

name: gitlab-ci-runner-env

- secretRef:

name: gitlab-runner-secrets

ports:

- containerPort: 9100

name: http-metrics

protocol: TCP

volumeMounts:

- name: gitlab-ci-runner-scripts

mountPath: "/scripts/run.sh"

subPath: "run.sh"

# https 自签名根证书

- name: ca-cert

mountPath: /etc/gitlab-runner/certs

- name: host-time

mountPath: /etc/localtime

- name: kubectl

mountPath: /usr/bin/kubectl

- name: docker-sock

mountPath: /var/run/docker.sock

readOnly: true

volumes:

- name: gitlab-ci-runner-scripts

configMap:

name: gitlab-ci-runner-scripts

defaultMode: 0777

- name: ca-cert

secret:

secretName: gitlab-ca-cert

- name: host-time

hostPath:

path: /etc/localtime

- name: kubectl

hostPath:

path: /usr/bin/kubectl

type: File

- name: docker-sock

hostPath:

path: /var/run/docker.sock

restartPolicy: Always四、gitlab-ci 文件

1. maven 项目用法

配套 java 实验项目:java-demo-project.zip

gitlab-ci.yml 文件

不通的项目使用不同的参数来灵活应对,所以需要修改以下来适合自己的项目

第42行:

# 动态获取 JAR 二进制文件名

- JAR_NAME=$(find ./target -maxdepth 1 -name "*.jar" ! -name "*-sources.jar" ! -name "*-javadoc.jar" ! -name "original-*" | head -n 1)

第80-83行

# 定义 k8s 部署项目名称

- PROJECT_NAME="k8s-app"

# 定义镜像名称

- IMAGE_NAME="java-demon-project"

第144-328行中的相关k8s环境的地址修改一下以下是全部配置文件

stages:

- package

- docker_build

- deploy_k8s

variables:

GIT_DEPTH: 0 # 完整克隆代码

MAVEN_OPTS: "-Dmaven.repo.local=${CI_PROJECT_DIR}/.cache/maven" # Maven 缓存

# 全局缓存配置(所有 job 继承)

cache:

key: ${CI_COMMIT_REF_SLUG}

paths:

- .cache/maven # 全局缓存 .cache 目录

policy: pull-push

before_script:

- mkdir -p .cache/maven/jar/

- export MAVEN_OPTS="-Dmaven.repo.local=${CI_PROJECT_DIR}/.cache/maven"

- export PATH=/usr/local/apache-maven-3.6.3/bin:$PATH

mvn_build_job:

image: harbor.tianxiang.love:30443/library/maven-3.6.3-jdk-21:latest

stage: package

tags:

- k8s-runner

script:

# 动态构建模式

- |

if [[ "$CI_COMMIT_BRANCH" =~ ^pre.* ]]; then

PROFILE=pre

elif [[ "$CI_COMMIT_BRANCH" =~ ^dev.* ]]; then

PROFILE=dev

elif [[ "$CI_COMMIT_BRANCH" == "main" || "$CI_COMMIT_BRANCH" =~ ^prod.* ]]; then

PROFILE=prod

fi

# maven 构建并指定缓存目录

- mvn -B clean install -P${PROFILE} -Dmaven.test.skip=true #-Dmaven.repo.local=.cache/maven

# 动态获取 JAR 二进制文件名

- JAR_NAME=$(find target -maxdepth 1 -name "*.jar" ! -name "*-sources.jar" ! -name "*-javadoc.jar" ! -name "original-*" | head -n 1)

- ls $JAR_NAME

# 创建缓存 JAR 二进制文件目录

- cp $JAR_NAME .cache/maven/jar/

# 验证缓存是否成

- ls -lah .cache/maven/jar/

rules:

- if: '$CI_COMMIT_BRANCH =~ /^pre.*/' # 匹配 pre 开头的分支,如 pre-2025-05-15

when: on_success

- if: '$CI_COMMIT_BRANCH =~ /^dev.*/' # 匹配 dev 开头的分支

when: on_success

- if: '$CI_COMMIT_BRANCH == "main"' # 只匹配 main 分支

when: on_success

- if: '$CI_COMMIT_BRANCH =~ /^prod.*/' # 匹配 prod 开头的分支,如 prod-2025-05-15

when: on_success

docker_build_job:

image: harbor.tianxiang.love:30443/library/docker:latest

stage: docker_build

tags:

- k8s-runner

script:

# 验证上个阶段的构建产物是否在本阶段存在

- ls -lah .cache/maven/jar/

# 从缓存中动态获取 JAR 文件名

- JAR_NAME=$(ls $(pwd)/.cache/maven/jar/*.jar)

# 复制 JAR 文件到项目根目录 target 目录中以备 Dockerfile 使用

- mkdir -p target

- cp $JAR_NAME target/

# 定义 k8s 部署项目名称

- PROJECT_NAME="k8s-app"

# 定义镜像名称

- IMAGE_NAME="java-demon-project"

# 生成镜像标签

- TAG_NAME="${CI_COMMIT_REF_NAME}-$(date +%Y-%m-%d-%H-%M)-${CI_COMMIT_SHORT_SHA}-${CI_PIPELINE_ID}"

# 动态构建模式

- |

if [[ "$CI_COMMIT_BRANCH" =~ ^pre.* ]]; then

PROFILE=pre

HARBOR_ADDRESS=harbor.meta42-uat.com

# 预生产环境 Docker 认证

mkdir -pv ~/.docker/

echo $PRE_DOCKER_AUTH_CONFIG | base64 -d > ~/.docker/config.json

# 配置 docker ca 证书

mkdir -pv /etc/docker/certs.d/harbor.tianxiang.love:30443

echo $PRE_DOCKER_CA | base64 -d > /etc/docker/certs.d/harbor.tianxiang.love:30443/ca.crt

cat /etc/docker/certs.d/harbor.tianxiang.love:30443/ca.crt

ENV_DESC="预生产环境"

elif [[ "$CI_COMMIT_BRANCH" =~ ^dev.* ]]; then

PROFILE=dev

HARBOR_ADDRESS=harbor.tianxiang.love:30443

# 开发环境 Docker 认证

mkdir -pv ~/.docker/

echo $DEV_DOCKER_AUTH_CONFIG | base64 -d > ~/.docker/config.json

# 配置 docker ca 证书

mkdir -pv /etc/docker/certs.d/harbor.tianxiang.love:30443

echo $DEV_DOCKER_CA | base64 -d > /etc/docker/certs.d/harbor.tianxiang.love:30443/ca.crt

cat /etc/docker/certs.d/harbor.tianxiang.love:30443/ca.crt

ENV_DESC="开发环境"

elif [[ "$CI_COMMIT_BRANCH" == "main" || "$CI_COMMIT_BRANCH" =~ ^prod.* ]]; then

PROFILE=prod

HARBOR_ADDRESS=harbor.tianxiang.love:30443

# 生产环境 Docker 认证

mkdir -pv ~/.docker/

echo $PROD_DOCKER_AUTH_CONFIG | base64 -d > ~/.docker/config.json

# 配置 docker ca 证书

mkdir -pv /etc/docker/certs.d/harbor.tianxiang.love:30443

echo $PROD_DOCKER_CA | base64 -d > /etc/docker/certs.d/harbor.tianxiang.love:30443/ca.crt

cat /etc/docker/certs.d/harbor.tianxiang.love:30443/ca.crt

ENV_DESC="生产环境"

else

echo "❌ 未识别的分支,构建终止"

exit 1

fi

- |

cat <<EOF > .cache/maven/.ENV_FILE.env

PROJECT_NAME=$PROJECT_NAME

IMAGE_NAME=$IMAGE_NAME

TAG_NAME=$TAG_NAME

HARBOR_ADDRESS=$HARBOR_ADDRESS

EOF

- cat .cache/maven/.ENV_FILE.env

- echo "制作镜像 $IMAGE_NAME:$TAG_NAME 到$ENV_DESC"

- ls -la /etc/docker/certs.d/

- ls -la /etc/docker/certs.d/*/

- docker login $HARBOR_ADDRESS

- docker pull harbor.tianxiang.love:30443/library/jdk-8u421:v1.8.0_421

- docker build --build-arg PROFILE=$PROFILE -t $HARBOR_ADDRESS/$PROJECT_NAME/$IMAGE_NAME:$TAG_NAME .

- docker push $HARBOR_ADDRESS/$PROJECT_NAME/$IMAGE_NAME:$TAG_NAME

- MSG="✅ 已推送镜像 \"$HARBOR_ADDRESS/$PROJECT_NAME/$IMAGE_NAME:$TAG_NAME\" 到$ENV_DESC"

- echo "$MSG"

- docker rmi $HARBOR_ADDRESS/$PROJECT_NAME/$IMAGE_NAME:$TAG_NAME

artifacts:

paths:

- .cache/maven/.ENV_FILE.env

expire_in: 1 week

rules:

- if: '$CI_COMMIT_BRANCH =~ /^pre.*/' # 匹配 pre 开头的分支,如 pre-2025-05-15

when: on_success

- if: '$CI_COMMIT_BRANCH =~ /^dev.*/' # 匹配 dev 开头的分支

when: on_success

- if: '$CI_COMMIT_BRANCH == "main"' # 只匹配 main 分支

when: on_success

- if: '$CI_COMMIT_BRANCH =~ /^prod.*/' # 匹配 prod 开头的分支,如 prod-2025-05-15

when: on_success

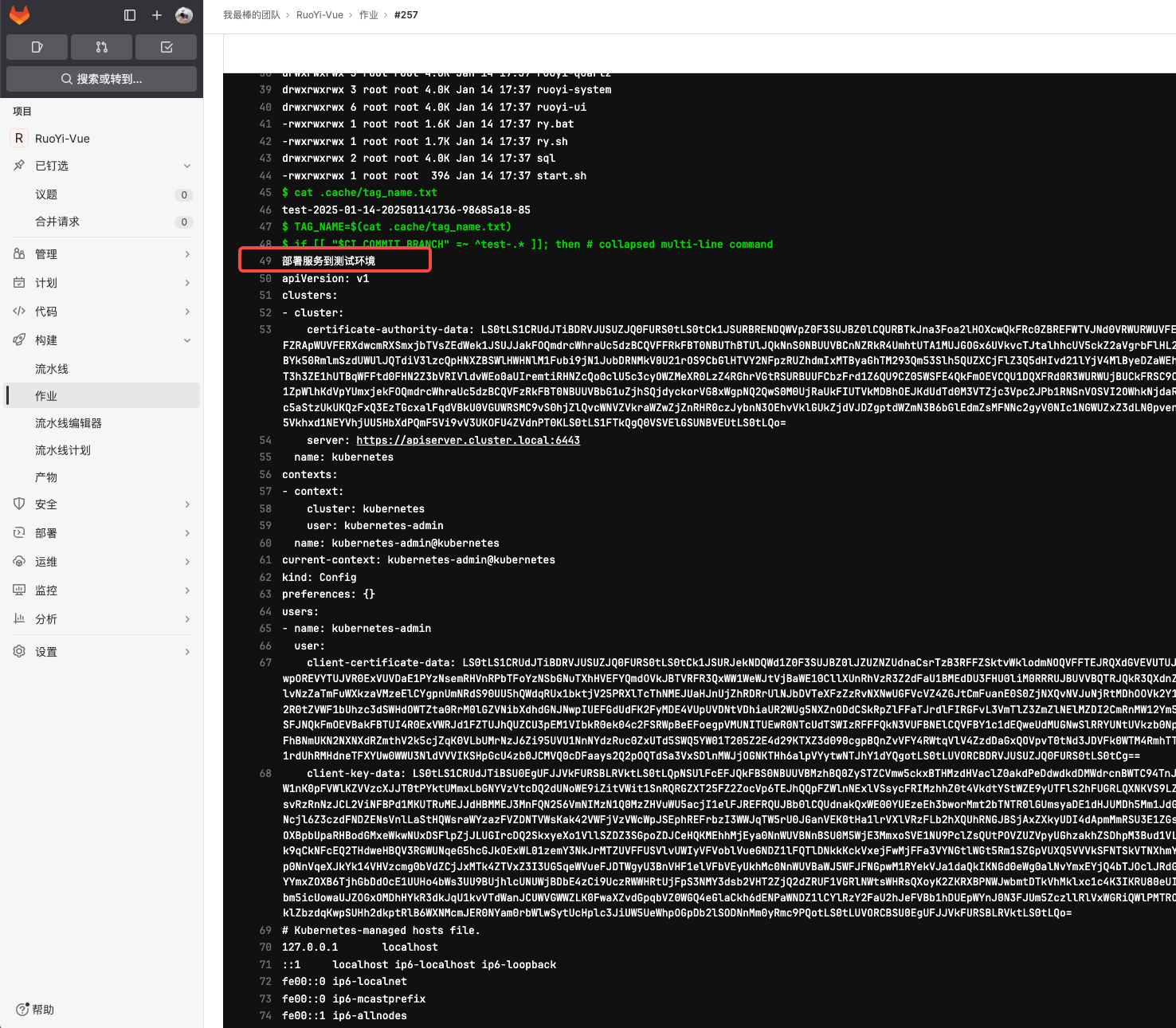

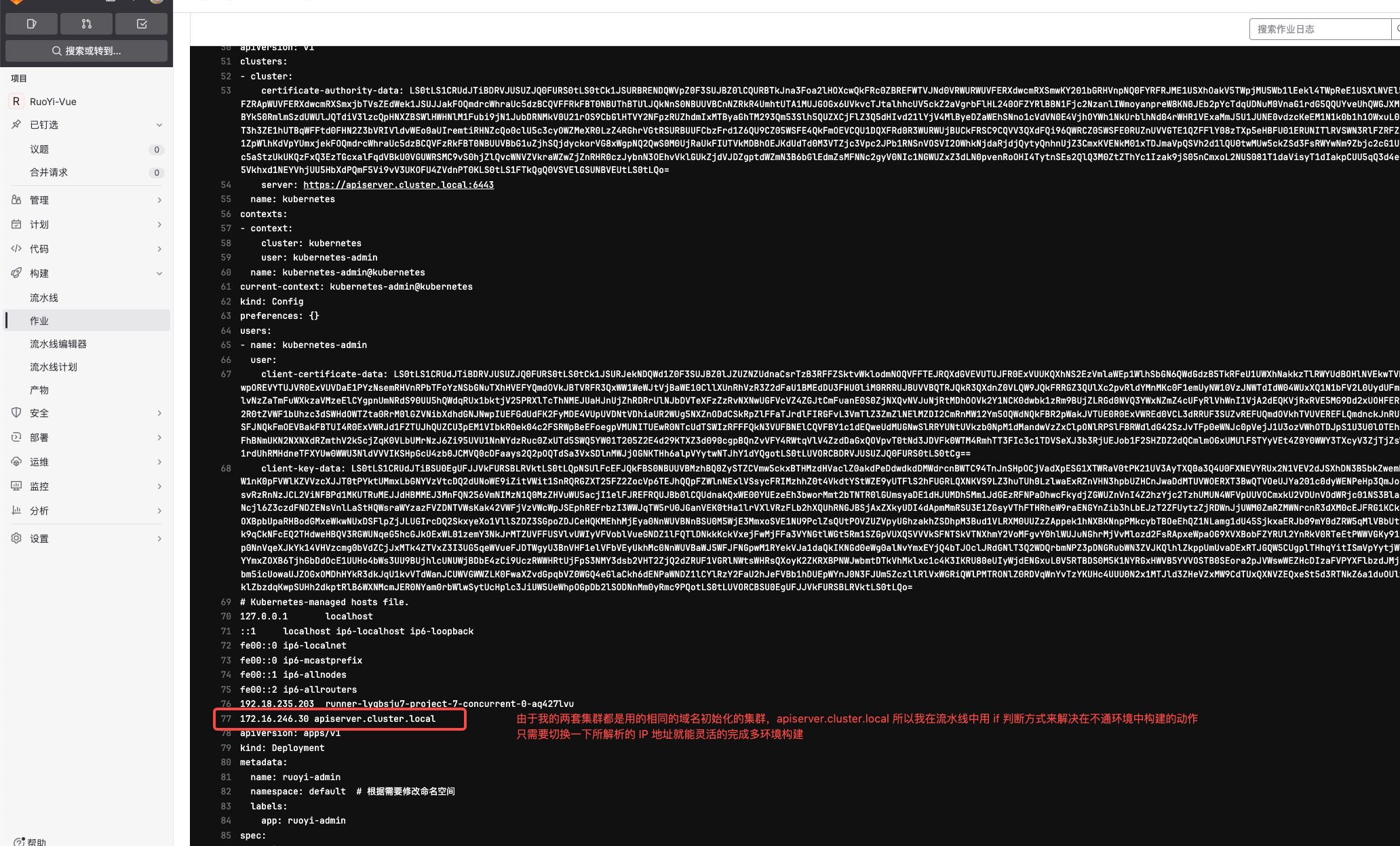

deploy_k8s_job:

image: harbor.tianxiang.love:30443/library/kubectl:v1.23.0

stage: deploy_k8s

tags:

- k8s-runner

script:

- mkdir -pv ~/.kube/

- ls -lh

# 加载缓存中的镜像信息变量

- source .cache/maven/.ENV_FILE.env

- |

if [[ "$CI_COMMIT_BRANCH" =~ ^pre.* ]]; then

echo '部署服务到预生产环境'

PROFILE=pre

echo "$PRE_KUBE_CONFIG" | base64 -d > ~/.kube/config

echo '192.168.233.246 apiserver.cluster.local' | tee -a /etc/hosts

sed -e "s/{{.IMAGE_NAME}}/$IMAGE_NAME/g" \

-e "s/{{.PROJECT_NAME}}/$PROJECT_NAME/g" \

-e "s/{{.TAG_NAME}}/$TAG_NAME/g" \

-e "s/{{.HARBOR_ADDRESS}}/$HARBOR_ADDRESS/g" \

-e"s/{{.PROFILE}}/$PROFILE/g" \

k8s/deployment-pre.tml > k8s/deployment-pre.yaml

cat k8s/deployment-pre.yaml

# 记录部署开始时间

DEPLOY_START_TIME=$(date +%s)

echo "DEPLOY_START_TIME=$DEPLOY_START_TIME" > /tmp/deploy_time.env

kubectl apply -f k8s/deployment-pre.yaml

echo "🚀 开始部署..."

# 检查 pod 启动情况

MAX_RETRIES=120

SLEEP_SECONDS=3

TIMEOUT=$((MAX_RETRIES * SLEEP_SECONDS))

# 从文件读取部署开始时间

if [[ -f /tmp/deploy_time.env ]]; then

source /tmp/deploy_time.env

else

echo "❌ 未找到部署时间记录"

exit 1

fi

echo "⏳ 等待新创建的 Pod 启动并全部就绪最长 $TIMEOUT 秒..."

echo "📅 部署开始时间: $(date -d @$DEPLOY_START_TIME)"

for ((i = 1; i <= MAX_RETRIES; i++)); do

# 获取当前 Pod 信息

PODS_JSON=$(kubectl get pods -n "$PROJECT_NAME" -l k8s-app="$IMAGE_NAME" -o json 2>/dev/null)

# 获取在部署开始后创建的、就绪的 Pod 数

READY_COUNT=$(echo "$PODS_JSON" | jq --argjson start_time "$DEPLOY_START_TIME" '

[.items[]

| select(.status.phase == "Running")

| select(.status.containerStatuses != null)

| select(.status.containerStatuses[]?.ready == true)

| select((.metadata.creationTimestamp | sub("Z$"; "") | sub("T"; " ") | strptime("%Y-%m-%d %H:%M:%S") | mktime) >= $start_time)

] | length

')

# 获取预期副本数

EXPECTED_REPLICAS=$(kubectl get deploy -n "$PROJECT_NAME" "$IMAGE_NAME" -o jsonpath="{.spec.replicas}" 2>/dev/null || echo "1")

echo "第 $i 次检测:新就绪 Pod 数量: $READY_COUNT / 期望副本数: $EXPECTED_REPLICAS"

if [[ "$READY_COUNT" -eq "$EXPECTED_REPLICAS" ]]; then

echo "✅ 最新部署的 Pod 均已就绪"

# 显示新创建的Pod

echo "📋 新创建的 Pod 列表:"

kubectl get pods -n "$PROJECT_NAME" -l k8s-app="$IMAGE_NAME" --sort-by=.metadata.creationTimestamp -o wide

echo "🌐 创建的 Service 列表:"

kubectl get svc -n "$PROJECT_NAME" -l k8s-app="$IMAGE_NAME"

# 清理临时文件

rm -f /tmp/deploy_time.env

exit 0

fi

if [[ $i -eq $MAX_RETRIES ]]; then

echo "❌ 超时(${TIMEOUT} 秒):新创建的 Pod 未全部就绪"

echo "📋 当前 Pod 状态:"

kubectl get pods -n "$PROJECT_NAME" -l k8s-app="$IMAGE_NAME" -o wide

echo "🔍 部署详情:"

kubectl describe deploy -n "$PROJECT_NAME" "$IMAGE_NAME"

# 清理临时文件

rm -f /tmp/deploy_time.env

exit 1

fi

sleep "$SLEEP_SECONDS"

done

grep -v '192.168.233.246 apiserver.cluster.local' /etc/hosts > /tmp/hosts && cat /tmp/hosts > /etc/hosts

elif [[ "$CI_COMMIT_BRANCH" =~ ^dev.* ]]; then

echo '部署服务到开发环境'

PROFILE=dev

echo "$DEV_KUBE_CONFIG" | base64 -d > ~/.kube/config

echo '192.168.198.51 dev-apiserver.cluster.local' | tee -a /etc/hosts

sed -e "s/{{.IMAGE_NAME}}/$IMAGE_NAME/g" \

-e "s/{{.PROJECT_NAME}}/$PROJECT_NAME/g" \

-e "s/{{.TAG_NAME}}/$TAG_NAME/g" \

-e "s/{{.HARBOR_ADDRESS}}/$HARBOR_ADDRESS/g" \

-e "s/{{.PROFILE}}/$PROFILE/g" \

k8s/deployment-dev.tml > k8s/deployment-dev.yaml

cat k8s/deployment-dev.yaml

# 记录部署开始时间

DEPLOY_START_TIME=$(date +%s)

echo "DEPLOY_START_TIME=$DEPLOY_START_TIME" > /tmp/deploy_time.env

kubectl apply -f k8s/deployment-dev.yaml

echo "🚀 开始部署..."

# 检查 pod 启动情况

MAX_RETRIES=120

SLEEP_SECONDS=3

TIMEOUT=$((MAX_RETRIES * SLEEP_SECONDS))

# 从文件读取部署开始时间

if [[ -f /tmp/deploy_time.env ]]; then

source /tmp/deploy_time.env

else

echo "❌ 未找到部署时间记录"

exit 1

fi

echo "⏳ 等待新创建的 Pod 启动并全部就绪最长 $TIMEOUT 秒..."

echo "📅 部署开始时间: $(date -d @$DEPLOY_START_TIME)"

for ((i = 1; i <= MAX_RETRIES; i++)); do

# 获取当前 Pod 信息

PODS_JSON=$(kubectl get pods -n "$PROJECT_NAME" -l k8s-app="$IMAGE_NAME" -o json 2>/dev/null)

# 获取在部署开始后创建的、就绪的 Pod 数

READY_COUNT=$(echo "$PODS_JSON" | jq --argjson start_time "$DEPLOY_START_TIME" '

[.items[]

| select(.status.phase == "Running")

| select(.status.containerStatuses != null)

| select(.status.containerStatuses[]?.ready == true)

| select((.metadata.creationTimestamp | sub("Z$"; "") | sub("T"; " ") | strptime("%Y-%m-%d %H:%M:%S") | mktime) >= $start_time)

] | length

')

# 获取预期副本数

EXPECTED_REPLICAS=$(kubectl get deploy -n "$PROJECT_NAME" "$IMAGE_NAME" -o jsonpath="{.spec.replicas}" 2>/dev/null || echo "1")

echo "第 $i 次检测:新就绪 Pod 数量: $READY_COUNT / 期望副本数: $EXPECTED_REPLICAS"

if [[ "$READY_COUNT" -eq "$EXPECTED_REPLICAS" ]]; then

echo "✅ 最新部署的 Pod 均已就绪"

# 显示新创建的Pod

echo "📋 新创建的 Pod 列表:"

kubectl get pods -n "$PROJECT_NAME" -l k8s-app="$IMAGE_NAME" --sort-by=.metadata.creationTimestamp -o wide

echo "🌐 创建的 Service 列表:"

kubectl get svc -n "$PROJECT_NAME" -l k8s-app="$IMAGE_NAME"

# 清理临时文件

rm -f /tmp/deploy_time.env

exit 0

fi

if [[ $i -eq $MAX_RETRIES ]]; then

echo "❌ 超时(${TIMEOUT} 秒):新创建的 Pod 未全部就绪"

echo "📋 当前 Pod 状态:"

kubectl get pods -n "$PROJECT_NAME" -l k8s-app="$IMAGE_NAME" -o wide

echo "🔍 部署详情:"

kubectl describe deploy -n "$PROJECT_NAME" "$IMAGE_NAME"

# 清理临时文件

rm -f /tmp/deploy_time.env

exit 1

fi

sleep "$SLEEP_SECONDS"

done

grep -v '192.168.198.51 dev-apiserver.cluster.local' /etc/hosts > /tmp/hosts && cat /tmp/hosts > /etc/hosts

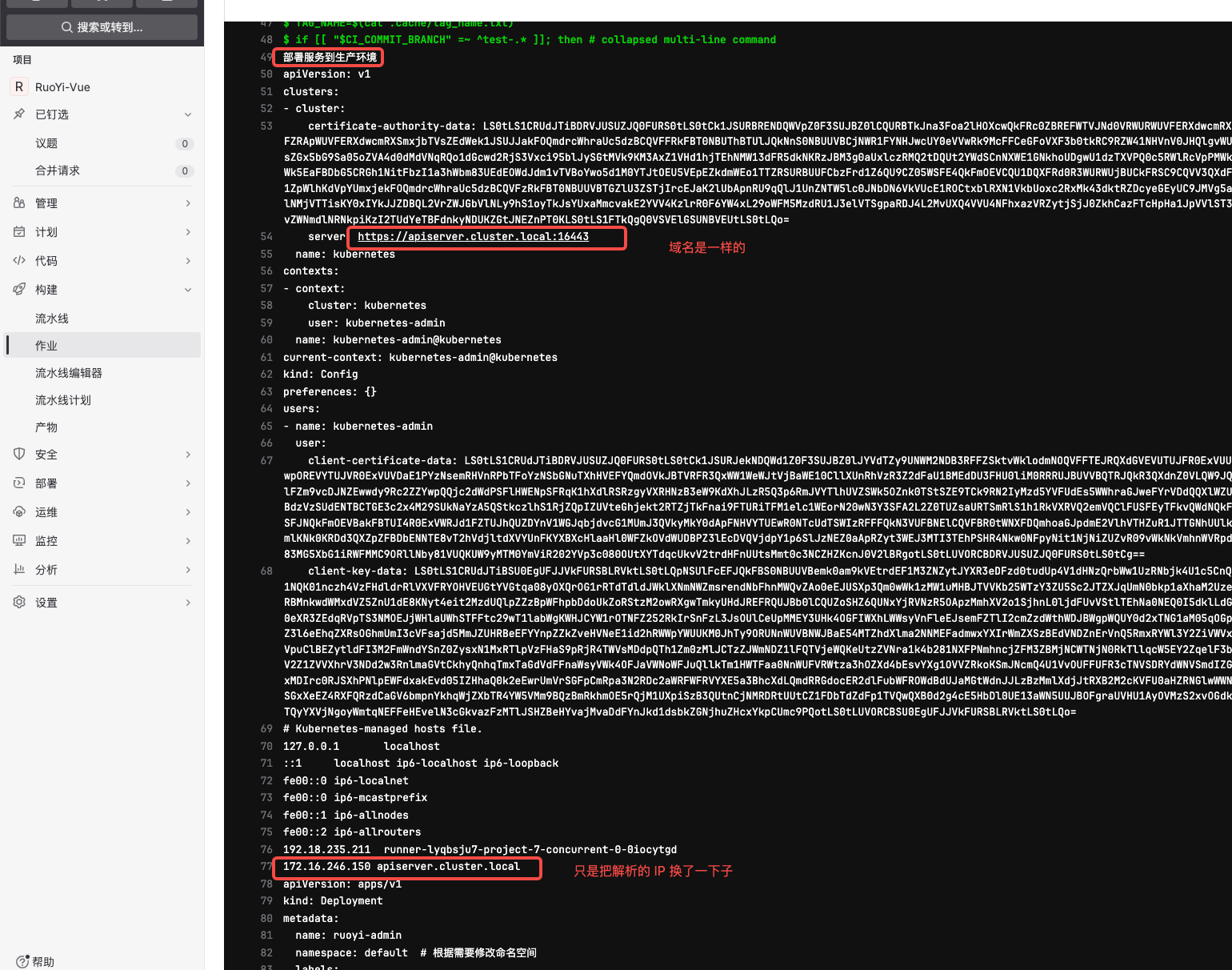

elif [[ "$CI_COMMIT_BRANCH" == "main" || "$CI_COMMIT_BRANCH" =~ ^prod.* ]]; then

echo '部署服务到生产环境'

PROFILE=prod

echo "$PROD_KUBE_CONFIG" | base64 -d > ~/.kube/config

echo '192.168.233.246 apiserver.cluster.local ' | tee -a /etc/hosts

sed -e "s/{{.IMAGE_NAME}}/$IMAGE_NAME/g" \

-e "s/{{.PROJECT_NAME}}/$PROJECT_NAME/g" \

-e "s/{{.TAG_NAME}}/$TAG_NAME/g" \

-e "s/{{.HARBOR_ADDRESS}}/$HARBOR_ADDRESS/g" \

-e"s/{{.PROFILE}}/$PROFILE/g" \

k8s/deployment-prod.tml > k8s/deployment-prod.yaml

cat k8s/deployment-prod.yaml

# 记录部署开始时间

DEPLOY_START_TIME=$(date +%s)

echo "DEPLOY_START_TIME=$DEPLOY_START_TIME" > /tmp/deploy_time.env

kubectl apply -f k8s/deployment-prod.yaml

echo "🚀 开始部署..."

# 检查 pod 启动情况

MAX_RETRIES=120

SLEEP_SECONDS=3

TIMEOUT=$((MAX_RETRIES * SLEEP_SECONDS))

# 从文件读取部署开始时间

if [[ -f /tmp/deploy_time.env ]]; then

source /tmp/deploy_time.env

else

echo "❌ 未找到部署时间记录"

exit 1

fi

echo "⏳ 等待新创建的 Pod 启动并全部就绪最长 $TIMEOUT 秒..."

echo "📅 部署开始时间: $(date -d @$DEPLOY_START_TIME)"

for ((i = 1; i <= MAX_RETRIES; i++)); do

# 获取当前 Pod 信息

PODS_JSON=$(kubectl get pods -n "$PROJECT_NAME" -l k8s-app="$IMAGE_NAME" -o json 2>/dev/null)

# 获取在部署开始后创建的、就绪的 Pod 数

READY_COUNT=$(echo "$PODS_JSON" | jq --argjson start_time "$DEPLOY_START_TIME" '

[.items[]

| select(.status.phase == "Running")

| select(.status.containerStatuses != null)

| select(.status.containerStatuses[]?.ready == true)

| select((.metadata.creationTimestamp | sub("Z$"; "") | sub("T"; " ") | strptime("%Y-%m-%d %H:%M:%S") | mktime) >= $start_time)

] | length

')

# 获取预期副本数

EXPECTED_REPLICAS=$(kubectl get deploy -n "$PROJECT_NAME" "$IMAGE_NAME" -o jsonpath="{.spec.replicas}" 2>/dev/null || echo "1")

echo "第 $i 次检测:新就绪 Pod 数量: $READY_COUNT / 期望副本数: $EXPECTED_REPLICAS"

if [[ "$READY_COUNT" -eq "$EXPECTED_REPLICAS" ]]; then

echo "✅ 最新部署的 Pod 均已就绪"

# 显示新创建的Pod

echo "📋 新创建的 Pod 列表:"

kubectl get pods -n "$PROJECT_NAME" -l k8s-app="$IMAGE_NAME" --sort-by=.metadata.creationTimestamp -o wide

echo "🌐 创建的 Service 列表:"

kubectl get svc -n "$PROJECT_NAME" -l k8s-app="$IMAGE_NAME"

# 清理临时文件

rm -f /tmp/deploy_time.env

exit 0

fi

if [[ $i -eq $MAX_RETRIES ]]; then

echo "❌ 超时(${TIMEOUT} 秒):新创建的 Pod 未全部就绪"

echo "📋 当前 Pod 状态:"

kubectl get pods -n "$PROJECT_NAME" -l k8s-app="$IMAGE_NAME" -o wide

echo "🔍 部署详情:"

kubectl describe deploy -n "$PROJECT_NAME" "$IMAGE_NAME"

# 清理临时文件

rm -f /tmp/deploy_time.env

exit 1

fi

sleep "$SLEEP_SECONDS"

done

grep -v '192.168.233.246 apiserver.cluster.local ' /etc/hosts > /tmp/hosts && cat /tmp/hosts > /etc/hosts

fi

rules:

- if: '$CI_COMMIT_BRANCH =~ /^pre.*/' # 匹配 pre 开头的分支,如 pre-2025-05-15

when: on_success

- if: '$CI_COMMIT_BRANCH =~ /^dev.*/' # 匹配 dev 开头的分支

when: on_success

- if: '$CI_COMMIT_BRANCH == "main"' # 只匹配 main 分支

when: manual # 手动触发 main 分支部署

- if: '$CI_COMMIT_BRANCH =~ /^prod.*/' # 匹配 prod 开头的分支,如 prod-2025-05-15

when: manual # 手动触发 prod 开头的分支部署Dockerfile 文件

FROM harbor.tianxiang.love:30443/library/maven-3.6.3-jdk-21:latest

MAINTAINER zhentianxiang

ARG PORT=8080

ARG LANG=zh_CN.UTF-8

ARG TZ=Asia/Shanghai

ARG PROFILE=dev

ENV JAVA_OPTS="-Dspring.profiles.active=${PROFILE}"

ENV PORT=${PORT}

ENV USER=root

ENV APP_HOME=/home/${USER}/apps

ENV JAR_FILE=app.jar

ENV LANG=${LANG}

ENV TZ=${TZ}

COPY target/*.jar ${APP_HOME}/${JAR_FILE}

COPY start.sh ${APP_HOME}/start.sh

WORKDIR ${APP_HOME}

CMD ["sh", "-c", "/bin/sh $APP_HOME/start.sh"]

配套 k8s/deployment-pre.tml、deployment-prod.tml 、deployment-dev.tml

举例 prod 环境文件

其实三个文件都一样,只是命名不一样

kind: Deployment

apiVersion: apps/v1

metadata:

name: {{.IMAGE_NAME}}

namespace: {{.PROJECT_NAME}}

labels:

k8s-app: {{.IMAGE_NAME}}

app: {{.IMAGE_NAME}}

spec:

replicas: 1

selector:

matchLabels:

k8s-app: {{.IMAGE_NAME}}

app: {{.IMAGE_NAME}}

template:

metadata:

labels:

k8s-app: {{.IMAGE_NAME}}

app: {{.IMAGE_NAME}}

spec:

containers:

- name: {{.IMAGE_NAME}}

image: "{{.HARBOR_ADDRESS}}/{{.PROJECT_NAME}}/{{.IMAGE_NAME}}:{{.TAG_NAME}}"

imagePullPolicy: Always

ports:

- name: http-0

containerPort: 8080

protocol: TCP

env:

- name: JAVA_OPTS

value: "-Xms512m -Xmx2048m -Xmn256m -XX:+UseG1GC -Dspring.profiles.active={{.PROFILE}}"

- name: LANG

value: "zh_CN.UTF-8"

- name: TZ

value: "Asia/Shanghai" # 设置时区,避免日志时间错乱

resources:

limits:

cpu: '0.5'

memory: 1Gi

requests:

cpu: '0.5'

memory: 512Mi

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

periodSeconds: 10

successThreshold: 1

failureThreshold: 3 # 3次失败后重启

timeoutSeconds: 10

readinessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

periodSeconds: 10

failureThreshold: 3 # 3次失败后将容器标记为不健康

timeoutSeconds: 10

restartPolicy: Always

---

apiVersion: v1

kind: Service

metadata:

name: {{.IMAGE_NAME}}

namespace: {{.PROJECT_NAME}}

labels:

k8s-app: {{.IMAGE_NAME}}

app: {{.IMAGE_NAME}}

spec:

ports:

- name: http

port: 8080

protocol: TCP

targetPort: 8080

selector:

k8s-app: {{.IMAGE_NAME}}

type: NodePort2. vue 前端用法

配套 VUE 前端项目:helloworld-vue-main.zip

gitlab-ci.yml 文件

不通的项目使用不同的参数来灵活应对,所以需要修改以下来适合自己的项目

# 第85-88行修改

# 定义 k8s 部署项目名称

- PROJECT_NAME="k8s-app"

# 定义镜像名称

- IMAGE_NAME="helloworld-vue"

第146-330行中的相关k8s环境的地址修改一下stages:

- package

- docker_build

- deploy_k8s

variables:

GIT_DEPTH: 0 # 完整克隆代码

NPM_CONFIG_CACHE: "${CI_PROJECT_DIR}/.cache/npm" # NodeJS 缓存

# 全局缓存配置(所有job继承)

cache:

key: ${CI_COMMIT_REF_SLUG}

paths:

- .cache/npm # 全局缓存 .cache 目录

policy: pull-push

before_script:

- mkdir -p .cache/npm/static/ ~/.docker/

- export NPM_CONFIG_CACHE="${CI_PROJECT_DIR}/.cache/npm"

node_build_job:

image: harbor.meta42.indc.vnet.com/tools/node:20-slim

#harbor.meta42.indc.vnet.com/tools/node:18-slim

#harbor.meta42.indc.vnet.com/tools/node:16-slim

stage: package

tags:

- K8S-Runner

script:

# 配置 npm 并指定缓存目录

- npm config set registry https://registry.npmmirror.com

#- npm config set cache $CI_PROJECT_DIR/.cache/npm

#- npm ci --prefer-offline --cache .cache/npm # 如果项目根目录中有 package-lock.json 则不用注释,否则使用下面的 npm install

- npm install --prefer-offline #--cache .cache/npm

# 动态构建模式

- |

if [[ "$CI_COMMIT_BRANCH" =~ ^pre.* ]]; then

PROFILE=pre

elif [[ "$CI_COMMIT_BRANCH" =~ ^dev.* ]]; then

PROFILE=dev

elif [[ "$CI_COMMIT_BRANCH" == "main" || "$CI_COMMIT_BRANCH" =~ ^prod.* ]]; then

PROFILE=prod

fi

echo "当前构建模式为:$MODE"

# 构建项目

- npm run build -- --mode $MODE

# 准备构建产物

- cp -ra dist .cache/npm/static/

# 验证缓存是否完成

- ls -lah .cache/npm/static/

#artifacts: # 这种方式缓存不太友好,因为文件太大就会报错413

# paths:

# - dist

# expire_in: 1 week

rules:

- if: '$CI_COMMIT_BRANCH =~ /^pre.*/' # 匹配 pre 开头的分支,如 pre-2025-05-15

when: on_success

- if: '$CI_COMMIT_BRANCH =~ /^dev.*/' # 匹配 dev 开头的分支

when: on_success

- if: '$CI_COMMIT_BRANCH == "main"' # 只匹配 main 分支

when: on_success

- if: '$CI_COMMIT_BRANCH =~ /^prod.*/' # 匹配 prod 开头的分支,如 prod-2025-05-15

when: on_success

docker_build_job:

image: harbor.meta42.indc.vnet.com/tools/docker:latest

stage: docker_build

tags:

- K8S-Runner

script:

# 验证上个阶段的构建产物是否在本阶段存在

- ls -la .cache/npm/static/

# 复制到项目根目录中以备 Dockerfile 使用

- cp -ra .cache/npm/static/dist ./dist

- ls -alh dist

# 定义 k8s 部署项目名称

- PROJECT_NAME="k8s-app"

# 定义镜像名称

- IMAGE_NAME="helloworld-vue"

# 生成镜像标签

- TAG_NAME="${CI_COMMIT_REF_NAME}-$(date +%Y-%m-%d-%H-%M)-${CI_COMMIT_SHORT_SHA}-${CI_PIPELINE_ID}"

# 动态构建模式

- |

if [[ "$CI_COMMIT_BRANCH" =~ ^pre.* ]]; then

PROFILE=pre

HARBOR_ADDRESS=harbor.meta42-uat.com

# 预生产环境 Docker 认证

echo $PRE_DOCKER_AUTH_CONFIG | base64 -d > ~/.docker/config.json

ENV_DESC="预生产环境"

elif [[ "$CI_COMMIT_BRANCH" =~ ^dev.* ]]; then

PROFILE=dev

HARBOR_ADDRESS=harbor.meta42.indc.vnet.com

# 开发环境 Docker 认证

echo $PROD_DOCKER_AUTH_CONFIG | base64 -d > ~/.docker/config.json

ENV_DESC="开发环境"

elif [[ "$CI_COMMIT_BRANCH" == "main" || "$CI_COMMIT_BRANCH" =~ ^prod.* ]]; then

PROFILE=prod

HARBOR_ADDRESS=harbor.meta42.indc.vnet.com

# 生产环境 Docker 认证

echo $PROD_DOCKER_AUTH_CONFIG | base64 -d > ~/.docker/config.json

ENV_DESC="生产环境"

else

echo "❌ 未识别的分支,构建终止"

exit 1

fi

- |

cat <<EOF > .cache/npm/.ENV_FILE.env

PROJECT_NAME=$PROJECT_NAME

IMAGE_NAME=$IMAGE_NAME

TAG_NAME=$TAG_NAME

HARBOR_ADDRESS=$HARBOR_ADDRESS

EOF

- cat .cache/npm/.ENV_FILE.env

- docker build --build-arg PROFILE=$PROFILE -t $HARBOR_ADDRESS/$PROJECT_NAME/$IMAGE_NAME:$TAG_NAME .

- docker push $HARBOR_ADDRESS/$PROJECT_NAME/$IMAGE_NAME:$TAG_NAME

- MSG="✅ 已推送镜像 \"$HARBOR_ADDRESS/$PROJECT_NAME/$IMAGE_NAME:$TAG_NAME\" 到$ENV_DESC"

- echo "$MSG"

- docker rmi $HARBOR_ADDRESS/$PROJECT_NAME/$IMAGE_NAME:$TAG_NAME

artifacts:

paths:

- .cache/npm/.ENV_FILE.env

expire_in: 1 week

rules:

- if: '$CI_COMMIT_BRANCH =~ /^pre.*/' # 匹配 pre 开头的分支,如 pre-2025-05-15

when: on_success

- if: '$CI_COMMIT_BRANCH =~ /^dev.*/' # 匹配 dev 开头的分支

when: on_success

- if: '$CI_COMMIT_BRANCH == "main"' # 只匹配 main 分支

when: on_success

- if: '$CI_COMMIT_BRANCH =~ /^prod.*/' # 匹配 prod 开头的分支,如 prod-2025-05-15

when: on_success

deploy_k8s_job:

image: harbor.meta42.indc.vnet.com/tools/kubectl:1.23.0

stage: deploy_k8s

tags:

- K8S-Runner

script:

- mkdir -pv ~/.kube/

- ls -lh

# 加载缓存中的镜像信息变量

- source .cache/maven/.ENV_FILE.env

- |

if [[ "$CI_COMMIT_BRANCH" =~ ^pre.* ]]; then

echo '部署服务到预生产环境'

PROFILE=pre

echo "$PRE_KUBE_CONFIG" | base64 -d > ~/.kube/config

cat ~/.kube/config

echo '192.168.248.30 apiserver.cluster.local' | tee -a /etc/hosts

cat /etc/hosts

sed -e "s/{{.IMAGE_NAME}}/$IMAGE_NAME/g" \

-e "s/{{.PROJECT_NAME}}/$PROJECT_NAME/g" \

-e "s/{{.TAG_NAME}}/$TAG_NAME/g" \

-e "s/{{.HARBOR_ADDRESS}}/$HARBOR_ADDRESS/g" \

-e"s/{{.PROFILE}}/$PROFILE/g" \

k8s/deployment-pre.tml > k8s/deployment-pre.yaml

cat k8s/deployment-pre.yaml

kubectl apply -f k8s/deployment-pre.yaml

# 检查 pod 启动情况

MAX_RETRIES=60

SLEEP_SECONDS=1

TIMEOUT=$((MAX_RETRIES * SLEEP_SECONDS))

echo "⏳ 等待新创建的 Pod 启动并全部就绪最长 $TIMEOUT 秒..."

NOW=$(date +%s)

for ((i = 1; i <= MAX_RETRIES; i++)); do

# 获取当前 Pod 信息

PODS_JSON=$(kubectl get pods -n "$PROJECT_NAME" -l k8s-app="$IMAGE_NAME" -o json)

# 获取就绪、运行状态且在最近 10 秒内创建的 Pod 数

READY_COUNT=$(echo "$PODS_JSON" | jq --argjson now "$NOW" '

[.items[]

| select(.status.phase == "Running")

| select(.status.containerStatuses[].ready == true)

| select((($now - (.metadata.creationTimestamp | sub("Z$"; "") | sub("T"; " ") | strptime("%Y-%m-%d %H:%M:%S") | mktime)) < 10))

] | length

')

# 获取预期副本数

EXPECTED_REPLICAS=$(kubectl get deploy -n "$PROJECT_NAME" "$IMAGE_NAME" -o jsonpath="{.spec.replicas}")

echo "第 $i 次检测:新就绪 Pod 数量: $READY_COUNT / 期望副本数: $EXPECTED_REPLICAS"

if [[ "$READY_COUNT" -eq "$EXPECTED_REPLICAS" ]]; then

echo "✅ 最新部署的 Pod 均已就绪"

kubectl get pods -n "$PROJECT_NAME" -l k8s-app="$IMAGE_NAME" -o wide

exit 0

fi

if [[ $i -eq $MAX_RETRIES ]]; then

echo "❌ 超时(${TIMEOUT} 秒):新创建的 Pod 未全部就绪"

kubectl get pods -n "$PROJECT_NAME" -l k8s-app="$IMAGE_NAME" -o wide

kubectl describe deploy -n "$PROJECT_NAME" "$IMAGE_NAME"

exit 1

fi

sleep "$SLEEP_SECONDS"

done

grep -v '192.168.248.30 apiserver.cluster.local' /etc/hosts > /tmp/hosts && cat /tmp/hosts > /etc/hosts

elif [[ "$CI_COMMIT_BRANCH" =~ ^dev.* ]]; then

echo '部署服务到开发环境'

PROFILE=dev

echo "$DEV_KUBE_CONFIG" | base64 -d > ~/.kube/config

cat ~/.kube/config

#echo '192.168.100.30 dev-apiserver.cluster.local' | tee -a /etc/hosts

cat /etc/hosts

sed -e "s/{{.IMAGE_NAME}}/$IMAGE_NAME/g" \

-e "s/{{.PROJECT_NAME}}/$PROJECT_NAME/g" \

-e "s/{{.TAG_NAME}}/$TAG_NAME/g" \

-e "s/{{.HARBOR_ADDRESS}}/$HARBOR_ADDRESS/g" \

-e "s/{{.PROFILE}}/$PROFILE/g" \

k8s/deployment-dev.tml > k8s/deployment-dev.yaml

cat k8s/deployment-dev.yaml

kubectl apply -f k8s/deployment-dev.yaml

# 检查 pod 启动情况

MAX_RETRIES=60 # 开发环境可以减少等待时间

SLEEP_SECONDS=1

TIMEOUT=$((MAX_RETRIES * SLEEP_SECONDS))

echo "⏳ 等待新创建的 Pod 启动并全部就绪最长 $TIMEOUT 秒..."

NOW=$(date +%s)

for ((i = 1; i <= MAX_RETRIES; i++)); do

# 获取当前 Pod 信息

PODS_JSON=$(kubectl get pods -n "$PROJECT_NAME" -l k8s-app="$IMAGE_NAME" -o json)

# 获取就绪、运行状态且在最近 10 秒内创建的 Pod 数

READY_COUNT=$(echo "$PODS_JSON" | jq --argjson now "$NOW" '

[.items[]

| select(.status.phase == "Running")

| select(.status.containerStatuses[].ready == true)

| select((($now - (.metadata.creationTimestamp | sub("Z$"; "") | sub("T"; " ") | strptime("%Y-%m-%d %H:%M:%S") | mktime)) < 10))

] | length

')

# 获取预期副本数

EXPECTED_REPLICAS=$(kubectl get deploy -n "$PROJECT_NAME" "$IMAGE_NAME" -o jsonpath="{.spec.replicas}")

echo "第 $i 次检测:新就绪 Pod 数量: $READY_COUNT / 期望副本数: $EXPECTED_REPLICAS"

if [[ "$READY_COUNT" -eq "$EXPECTED_REPLICAS" ]]; then

echo "✅ 最新部署的 Pod 均已就绪"

kubectl get pods -n "$PROJECT_NAME" -l k8s-app="$IMAGE_NAME" -o wide

exit 0

fi

if [[ $i -eq $MAX_RETRIES ]]; then

echo "❌ 超时(${TIMEOUT} 秒):新创建的 Pod 未全部就绪"

kubectl get pods -n "$PROJECT_NAME" -l k8s-app="$IMAGE_NAME" -o wide

kubectl describe deploy -n "$PROJECT_NAME" "$IMAGE_NAME"

exit 1

fi

sleep "$SLEEP_SECONDS"

done

#grep -v '192.168.100.30 dev-apiserver.cluster.local' /etc/hosts > /tmp/hosts && cat /tmp/hosts > /etc/hosts

elif [[ "$CI_COMMIT_BRANCH" == "main" || "$CI_COMMIT_BRANCH" =~ ^prod.* ]]; then

echo '部署服务到生产环境'

PROFILE=prod

echo "$PROD_KUBE_CONFIG" | base64 -d > ~/.kube/config

cat ~/.kube/config

echo '192.168.233.30 lb.kubesphere.local' | tee -a /etc/hosts

cat /etc/hosts

sed -e "s/{{.IMAGE_NAME}}/$IMAGE_NAME/g" \

-e "s/{{.PROJECT_NAME}}/$PROJECT_NAME/g" \

-e "s/{{.TAG_NAME}}/$TAG_NAME/g" \

-e "s/{{.HARBOR_ADDRESS}}/$HARBOR_ADDRESS/g" \

-e"s/{{.PROFILE}}/$PROFILE/g" \

k8s/deployment-prod.tml > k8s/deployment-prod.yaml

cat k8s/deployment-prod.yaml

kubectl apply -f k8s/deployment-prod.yaml

# 检查 pod 启动情况

MAX_RETRIES=60

SLEEP_SECONDS=1

TIMEOUT=$((MAX_RETRIES * SLEEP_SECONDS))

echo "⏳ 等待新创建的 Pod 启动并全部就绪最长 $TIMEOUT 秒..."

NOW=$(date +%s)

for ((i = 1; i <= MAX_RETRIES; i++)); do

# 获取当前 Pod 信息

PODS_JSON=$(kubectl get pods -n "$PROJECT_NAME" -l k8s-app="$IMAGE_NAME" -o json)

# 获取就绪、运行状态且在最近 10 秒内创建的 Pod 数

READY_COUNT=$(echo "$PODS_JSON" | jq --argjson now "$NOW" '

[.items[]

| select(.status.phase == "Running")

| select(.status.containerStatuses[].ready == true)

| select((($now - (.metadata.creationTimestamp | sub("Z$"; "") | sub("T"; " ") | strptime("%Y-%m-%d %H:%M:%S") | mktime)) < 10))

] | length

')

# 获取预期副本数

EXPECTED_REPLICAS=$(kubectl get deploy -n "$PROJECT_NAME" "$IMAGE_NAME" -o jsonpath="{.spec.replicas}")

echo "第 $i 次检测:新就绪 Pod 数量: $READY_COUNT / 期望副本数: $EXPECTED_REPLICAS"

if [[ "$READY_COUNT" -eq "$EXPECTED_REPLICAS" ]]; then

echo "✅ 最新部署的 Pod 均已就绪"

kubectl get pods -n "$PROJECT_NAME" -l k8s-app="$IMAGE_NAME" -o wide

exit 0

fi

if [[ $i -eq $MAX_RETRIES ]]; then

echo "❌ 超时(${TIMEOUT} 秒):新创建的 Pod 未全部就绪"

kubectl get pods -n "$PROJECT_NAME" -l k8s-app="$IMAGE_NAME" -o wide

kubectl describe deploy -n "$PROJECT_NAME" "$IMAGE_NAME"

exit 1

fi

sleep "$SLEEP_SECONDS"

done

grep -v '192.168.233.30 lb.kubesphere.local' /etc/hosts > /tmp/hosts && cat /tmp/hosts > /etc/hosts

fi

rules:

- if: '$CI_COMMIT_BRANCH =~ /^pre.*/' # 匹配 pre 开头的分支,如 pre-2025-05-15

when: on_success

- if: '$CI_COMMIT_BRANCH =~ /^dev.*/' # 匹配 dev 开头的分支

when: on_success

- if: '$CI_COMMIT_BRANCH == "main"' # 只匹配 main 分支

when: manual # 手动触发 main 分支部署

- if: '$CI_COMMIT_BRANCH =~ /^prod.*/' # 匹配 prod 开头的分支,如 prod-2025-05-15

when: manual # 手动触发 prod 开头的分支部署Dockerfile 文件

FROM harbor.tianxiang.love:30443/library/nginx:latest

# 将构建好的 dist 目录复制到 Nginx 的默认静态文件目录

COPY dist /usr/share/nginx/html

# 如果有自定义的 nginx 配置文件,可以复制到容器中

COPY front-server.conf /etc/nginx/conf.d/default.conf

# 暴露 Nginx 使用的端口,默认是 80

EXPOSE 80

# 启动 Nginx 服务

CMD ["nginx", "-g", "daemon off;"]front-server.conf 配置文件

server {

listen 80;

server_name localhost;

root /usr/share/nginx/html;

index index.html;

location / {

try_files $uri $uri/ /index.html;

}

access_log /var/log/nginx/access.log;

error_log /var/log/nginx/error.log;

}3. golang 项目用法

stages:

- package

- docker_build

- deploy_k8s

variables:

GIT_DEPTH: 0 # 完整克隆代码

GOCACHE: "${CI_PROJECT_DIR}/.cache/go-build" # 设置GOCACHE到当前目录下的缓存目录

GOPATH: "${CI_PROJECT_DIR}/.cache/go" # 设置模块缓存位置

# 全局缓存配置(所有 job 继承)

cache:

key: ${CI_COMMIT_REF_SLUG}

paths:

- .cache/go-build # 构建缓存

- .cache/go/pkg/mod # 模块缓存

- bin/ # 构建输出目录

policy: pull-push

before_script:

- mkdir -p .cache/go-build .cache/go bin/ ~/.docker/

- export GOCACHE="${CI_PROJECT_DIR}/.cache/go-build"

- export GOPATH="${CI_PROJECT_DIR}/.cache/go"

- export GOPROXY=https://goproxy.cn

- export GO111MODULE=on

go_build_job: # 修正作业名称

image: harbor.meta42.indc.vnet.com/tools/golang:1.23.2

stage: package

tags:

- K8S-Runner

script:

# 动态构建模式

- APP_NAME="helloworld-go"

- |

if [[ "$CI_COMMIT_BRANCH" =~ ^pre.* ]]; then

export APP_ENV=pre

BUILD_FLAGS="-X main.version=pre-${CI_COMMIT_SHORT_SHA}"

elif [[ "$CI_COMMIT_BRANCH" =~ ^dev.* ]]; then

export APP_ENV=dev

BUILD_FLAGS="-X main.version=pre-${CI_COMMIT_SHORT_SHA}"

elif [[ "$CI_COMMIT_BRANCH" == "main" || "$CI_COMMIT_BRANCH" =~ ^prod.* ]]; then

export APP_ENV=prod

BUILD_FLAGS="-X main.version=${CI_COMMIT_SHORT_SHA}"

fi

# 下载依赖

- go mod download

# 构建应用

- go build -ldflags "$BUILD_FLAGS" -o bin/${APP_NAME}

# 验证构建结果

- ls -lah bin/

#- ./bin/${APP_NAME} -version # 测试可执行文件

artifacts:

paths:

- bin/${APP_NAME}

expire_in: 1 week

rules:

- if: '$CI_COMMIT_BRANCH =~ /^pre.*/' # 匹配 pre 开头的分支,如 pre-2025-05-15

when: on_success

- if: '$CI_COMMIT_BRANCH =~ /^dev.*/' # 匹配 dev 开头的分支

when: on_success

- if: '$CI_COMMIT_BRANCH == "main"' # 只匹配 main 分支

when: on_success

- if: '$CI_COMMIT_BRANCH =~ /^prod.*/' # 匹配 prod 开头的分支,如 prod-2025-05-15

when: on_success

docker_build_job:

image: harbor.meta42.indc.vnet.com/tools/docker:latest

stage: docker_build

tags:

- K8S-Runner

script:

# 验证上个阶段的构建产物是否在本阶段存在

- ls -lah bin/

# 定义 k8s 部署项目名称

- PROJECT_NAME="k8s-app"

# 定义镜像名称

- IMAGE_NAME="helloworld-go"

# 生成镜像标签

- TAG_NAME="${CI_COMMIT_REF_NAME}-$(date +%Y-%m-%d-%H-%M)-${CI_COMMIT_SHORT_SHA}-${CI_PIPELINE_ID}"

# 动态构建模式

- |

if [[ "$CI_COMMIT_BRANCH" =~ ^pre.* ]]; then

PROFILE=pre

HARBOR_ADDRESS=harbor.meta42-uat.com

# 预生产环境 Docker 认证

echo $PRE_DOCKER_AUTH_CONFIG | base64 -d > ~/.docker/config.json

ENV_DESC="预生产环境"

elif [[ "$CI_COMMIT_BRANCH" =~ ^dev.* ]]; then

PROFILE=dev

HARBOR_ADDRESS=harbor.meta42.indc.vnet.com

# 开发环境 Docker 认证

echo $PROD_DOCKER_AUTH_CONFIG | base64 -d > ~/.docker/config.json

ENV_DESC="开发环境"

elif [[ "$CI_COMMIT_BRANCH" == "main" || "$CI_COMMIT_BRANCH" =~ ^prod.* ]]; then

PROFILE=prod

HARBOR_ADDRESS=harbor.meta42.indc.vnet.com

# 生产环境 Docker 认证

echo $PROD_DOCKER_AUTH_CONFIG | base64 -d > ~/.docker/config.json

ENV_DESC="生产环境"

else

echo "❌ 未识别的分支,构建终止"

exit 1

fi

- |

cat <<EOF > .cache/go/.ENV_FILE.env

PROJECT_NAME=$PROJECT_NAME

IMAGE_NAME=$IMAGE_NAME

TAG_NAME=$TAG_NAME

HARBOR_ADDRESS=$HARBOR_ADDRESS

EOF

- cat .cache/go/.ENV_FILE.env

- docker build --build-arg PROFILE=$PROFILE -t $HARBOR_ADDRESS/$PROJECT_NAME/$IMAGE_NAME:$TAG_NAME .

- docker push $HARBOR_ADDRESS/$PROJECT_NAME/$IMAGE_NAME:$TAG_NAME

- MSG="✅ 已推送镜像 \"$HARBOR_ADDRESS/$PROJECT_NAME/$IMAGE_NAME:$TAG_NAME\" 到$ENV_DESC"

- echo "$MSG"

- docker rmi $HARBOR_ADDRESS/$PROJECT_NAME/$IMAGE_NAME:$TAG_NAME

artifacts:

paths:

- .cache/go/.ENV_FILE.env

expire_in: 1 week

rules:

- if: '$CI_COMMIT_BRANCH =~ /^pre.*/' # 匹配 pre 开头的分支,如 pre-2025-05-15

when: on_success

- if: '$CI_COMMIT_BRANCH =~ /^dev.*/' # 匹配 dev 开头的分支

when: on_success

- if: '$CI_COMMIT_BRANCH == "main"' # 只匹配 main 分支

when: on_success

- if: '$CI_COMMIT_BRANCH =~ /^prod.*/' # 匹配 prod 开头的分支,如 prod-2025-05-15

when: on_success

deploy_k8s_job:

image: harbor.meta42.indc.vnet.com/tools/kubectl:1.23.0

stage: deploy_k8s

tags:

- K8S-Runner

script:

- mkdir -pv ~/.kube/

- ls -lh

# 加载缓存中的镜像信息变量

- source .cache/maven/.ENV_FILE.env

- |

if [[ "$CI_COMMIT_BRANCH" =~ ^pre.* ]]; then

echo '部署服务到预生产环境'

PROFILE=pre

echo "$PRE_KUBE_CONFIG" | base64 -d > ~/.kube/config

cat ~/.kube/config

echo '192.168.248.30 apiserver.cluster.local' | tee -a /etc/hosts

cat /etc/hosts

sed -e "s/{{.IMAGE_NAME}}/$IMAGE_NAME/g" \

-e "s/{{.PROJECT_NAME}}/$PROJECT_NAME/g" \

-e "s/{{.TAG_NAME}}/$TAG_NAME/g" \

-e "s/{{.HARBOR_ADDRESS}}/$HARBOR_ADDRESS/g" \

-e"s/{{.PROFILE}}/$PROFILE/g" \

k8s/deployment-pre.tml > k8s/deployment-pre.yaml

cat k8s/deployment-pre.yaml

kubectl apply -f k8s/deployment-pre.yaml

# 检查 pod 启动情况

MAX_RETRIES=60

SLEEP_SECONDS=1

TIMEOUT=$((MAX_RETRIES * SLEEP_SECONDS))

echo "⏳ 等待新创建的 Pod 启动并全部就绪最长 $TIMEOUT 秒..."

NOW=$(date +%s)

for ((i = 1; i <= MAX_RETRIES; i++)); do

# 获取当前 Pod 信息

PODS_JSON=$(kubectl get pods -n "$PROJECT_NAME" -l k8s-app="$IMAGE_NAME" -o json)

# 获取就绪、运行状态且在最近 10 秒内创建的 Pod 数

READY_COUNT=$(echo "$PODS_JSON" | jq --argjson now "$NOW" '

[.items[]

| select(.status.phase == "Running")

| select(.status.containerStatuses[].ready == true)

| select((($now - (.metadata.creationTimestamp | sub("Z$"; "") | sub("T"; " ") | strptime("%Y-%m-%d %H:%M:%S") | mktime)) < 10))

] | length

')

# 获取预期副本数

EXPECTED_REPLICAS=$(kubectl get deploy -n "$PROJECT_NAME" "$IMAGE_NAME" -o jsonpath="{.spec.replicas}")

echo "第 $i 次检测:新就绪 Pod 数量: $READY_COUNT / 期望副本数: $EXPECTED_REPLICAS"

if [[ "$READY_COUNT" -eq "$EXPECTED_REPLICAS" ]]; then

echo "✅ 最新部署的 Pod 均已就绪"

kubectl get pods -n "$PROJECT_NAME" -l k8s-app="$IMAGE_NAME" -o wide

exit 0

fi

if [[ $i -eq $MAX_RETRIES ]]; then

echo "❌ 超时(${TIMEOUT} 秒):新创建的 Pod 未全部就绪"

kubectl get pods -n "$PROJECT_NAME" -l k8s-app="$IMAGE_NAME" -o wide

kubectl describe deploy -n "$PROJECT_NAME" "$IMAGE_NAME"

exit 1

fi

sleep "$SLEEP_SECONDS"

done

grep -v '192.168.248.30 apiserver.cluster.local' /etc/hosts > /tmp/hosts && cat /tmp/hosts > /etc/hosts

elif [[ "$CI_COMMIT_BRANCH" =~ ^dev.* ]]; then

echo '部署服务到开发环境'

PROFILE=dev

echo "$DEV_KUBE_CONFIG" | base64 -d > ~/.kube/config

cat ~/.kube/config

#echo '192.168.100.30 dev-apiserver.cluster.local' | tee -a /etc/hosts

cat /etc/hosts

sed -e "s/{{.IMAGE_NAME}}/$IMAGE_NAME/g" \

-e "s/{{.PROJECT_NAME}}/$PROJECT_NAME/g" \

-e "s/{{.TAG_NAME}}/$TAG_NAME/g" \

-e "s/{{.HARBOR_ADDRESS}}/$HARBOR_ADDRESS/g" \

-e "s/{{.PROFILE}}/$PROFILE/g" \

k8s/deployment-dev.tml > k8s/deployment-dev.yaml

cat k8s/deployment-dev.yaml

kubectl apply -f k8s/deployment-dev.yaml

# 检查 pod 启动情况

MAX_RETRIES=60 # 开发环境可以减少等待时间

SLEEP_SECONDS=1

TIMEOUT=$((MAX_RETRIES * SLEEP_SECONDS))

echo "⏳ 等待新创建的 Pod 启动并全部就绪最长 $TIMEOUT 秒..."

NOW=$(date +%s)

for ((i = 1; i <= MAX_RETRIES; i++)); do

# 获取当前 Pod 信息

PODS_JSON=$(kubectl get pods -n "$PROJECT_NAME" -l k8s-app="$IMAGE_NAME" -o json)

# 获取就绪、运行状态且在最近 10 秒内创建的 Pod 数

READY_COUNT=$(echo "$PODS_JSON" | jq --argjson now "$NOW" '

[.items[]

| select(.status.phase == "Running")

| select(.status.containerStatuses[].ready == true)

| select((($now - (.metadata.creationTimestamp | sub("Z$"; "") | sub("T"; " ") | strptime("%Y-%m-%d %H:%M:%S") | mktime)) < 10))

] | length

')

# 获取预期副本数

EXPECTED_REPLICAS=$(kubectl get deploy -n "$PROJECT_NAME" "$IMAGE_NAME" -o jsonpath="{.spec.replicas}")

echo "第 $i 次检测:新就绪 Pod 数量: $READY_COUNT / 期望副本数: $EXPECTED_REPLICAS"

if [[ "$READY_COUNT" -eq "$EXPECTED_REPLICAS" ]]; then

echo "✅ 最新部署的 Pod 均已就绪"

kubectl get pods -n "$PROJECT_NAME" -l k8s-app="$IMAGE_NAME" -o wide

exit 0

fi

if [[ $i -eq $MAX_RETRIES ]]; then

echo "❌ 超时(${TIMEOUT} 秒):新创建的 Pod 未全部就绪"

kubectl get pods -n "$PROJECT_NAME" -l k8s-app="$IMAGE_NAME" -o wide

kubectl describe deploy -n "$PROJECT_NAME" "$IMAGE_NAME"

exit 1

fi

sleep "$SLEEP_SECONDS"

done

#grep -v '192.168.100.30 dev-apiserver.cluster.local' /etc/hosts > /tmp/hosts && cat /tmp/hosts > /etc/hosts

elif [[ "$CI_COMMIT_BRANCH" == "main" || "$CI_COMMIT_BRANCH" =~ ^prod.* ]]; then

echo '部署服务到生产环境'

PROFILE=prod

echo "$PROD_KUBE_CONFIG" | base64 -d > ~/.kube/config

cat ~/.kube/config

echo '192.168.233.30 lb.kubesphere.local' | tee -a /etc/hosts

cat /etc/hosts

sed -e "s/{{.IMAGE_NAME}}/$IMAGE_NAME/g" \

-e "s/{{.PROJECT_NAME}}/$PROJECT_NAME/g" \

-e "s/{{.TAG_NAME}}/$TAG_NAME/g" \

-e "s/{{.HARBOR_ADDRESS}}/$HARBOR_ADDRESS/g" \

-e"s/{{.PROFILE}}/$PROFILE/g" \

k8s/deployment-prod.tml > k8s/deployment-prod.yaml

cat k8s/deployment-prod.yaml

kubectl apply -f k8s/deployment-prod.yaml

# 检查 pod 启动情况

MAX_RETRIES=60

SLEEP_SECONDS=1

TIMEOUT=$((MAX_RETRIES * SLEEP_SECONDS))

echo "⏳ 等待新创建的 Pod 启动并全部就绪最长 $TIMEOUT 秒..."

NOW=$(date +%s)

for ((i = 1; i <= MAX_RETRIES; i++)); do

# 获取当前 Pod 信息

PODS_JSON=$(kubectl get pods -n "$PROJECT_NAME" -l k8s-app="$IMAGE_NAME" -o json)

# 获取就绪、运行状态且在最近 10 秒内创建的 Pod 数

READY_COUNT=$(echo "$PODS_JSON" | jq --argjson now "$NOW" '

[.items[]

| select(.status.phase == "Running")

| select(.status.containerStatuses[].ready == true)

| select((($now - (.metadata.creationTimestamp | sub("Z$"; "") | sub("T"; " ") | strptime("%Y-%m-%d %H:%M:%S") | mktime)) < 10))

] | length

')

# 获取预期副本数

EXPECTED_REPLICAS=$(kubectl get deploy -n "$PROJECT_NAME" "$IMAGE_NAME" -o jsonpath="{.spec.replicas}")

echo "第 $i 次检测:新就绪 Pod 数量: $READY_COUNT / 期望副本数: $EXPECTED_REPLICAS"

if [[ "$READY_COUNT" -eq "$EXPECTED_REPLICAS" ]]; then

echo "✅ 最新部署的 Pod 均已就绪"

kubectl get pods -n "$PROJECT_NAME" -l k8s-app="$IMAGE_NAME" -o wide

exit 0

fi

if [[ $i -eq $MAX_RETRIES ]]; then

echo "❌ 超时(${TIMEOUT} 秒):新创建的 Pod 未全部就绪"

kubectl get pods -n "$PROJECT_NAME" -l k8s-app="$IMAGE_NAME" -o wide

kubectl describe deploy -n "$PROJECT_NAME" "$IMAGE_NAME"

exit 1

fi

sleep "$SLEEP_SECONDS"

done

grep -v '192.168.233.30 lb.kubesphere.local' /etc/hosts > /tmp/hosts && cat /tmp/hosts > /etc/hosts

fi

rules:

- if: '$CI_COMMIT_BRANCH =~ /^pre.*/' # 匹配 pre 开头的分支,如 pre-2025-05-15

when: on_success

- if: '$CI_COMMIT_BRANCH =~ /^dev.*/' # 匹配 dev 开头的分支

when: on_success

- if: '$CI_COMMIT_BRANCH == "main"' # 只匹配 main 分支

when: manual # 手动触发 main 分支部署

- if: '$CI_COMMIT_BRANCH =~ /^prod.*/' # 匹配 prod 开头的分支,如 prod-2025-05-15

when: manual # 手动触发 prod 开头的分支部署五、上手测试

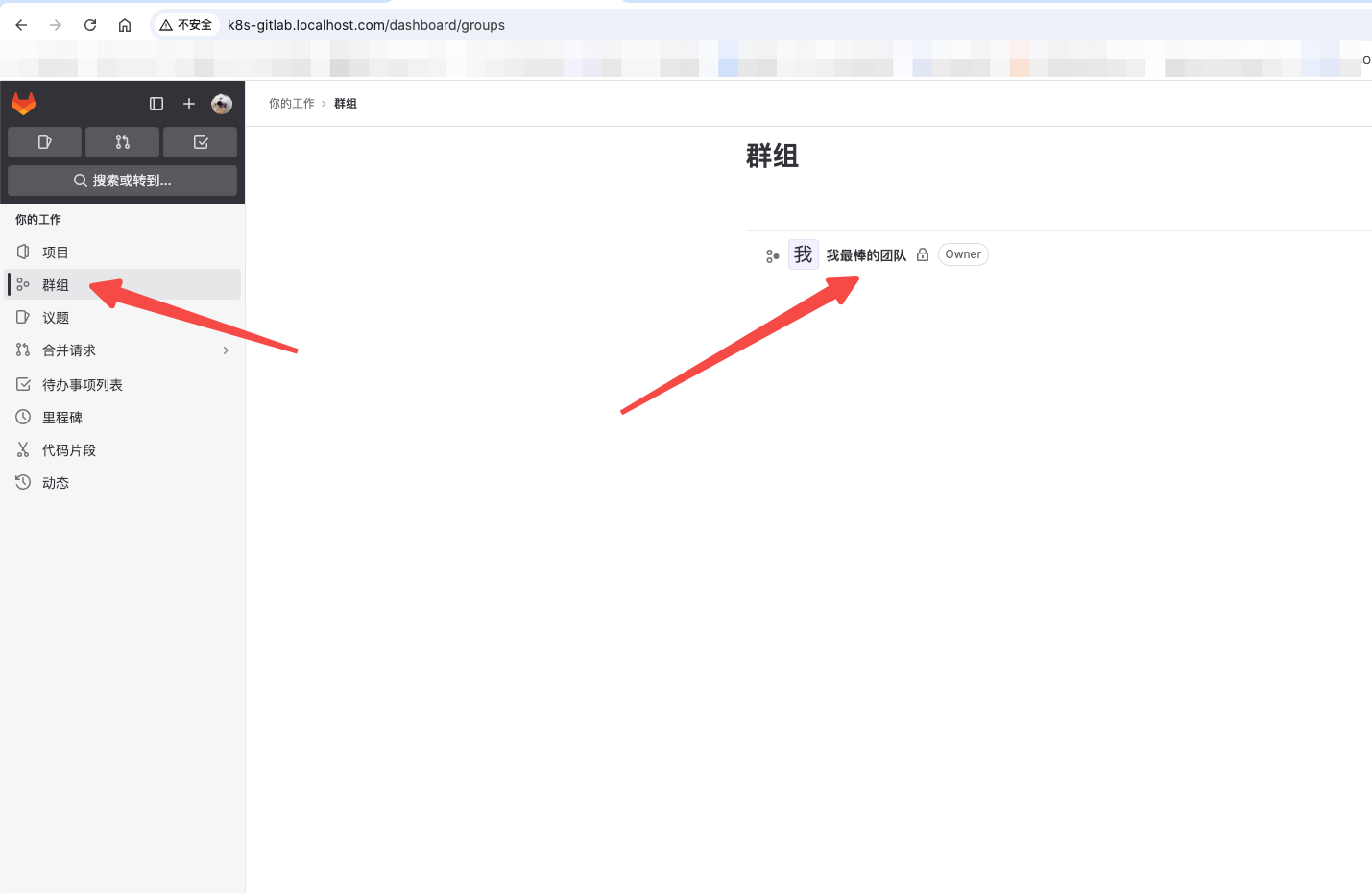

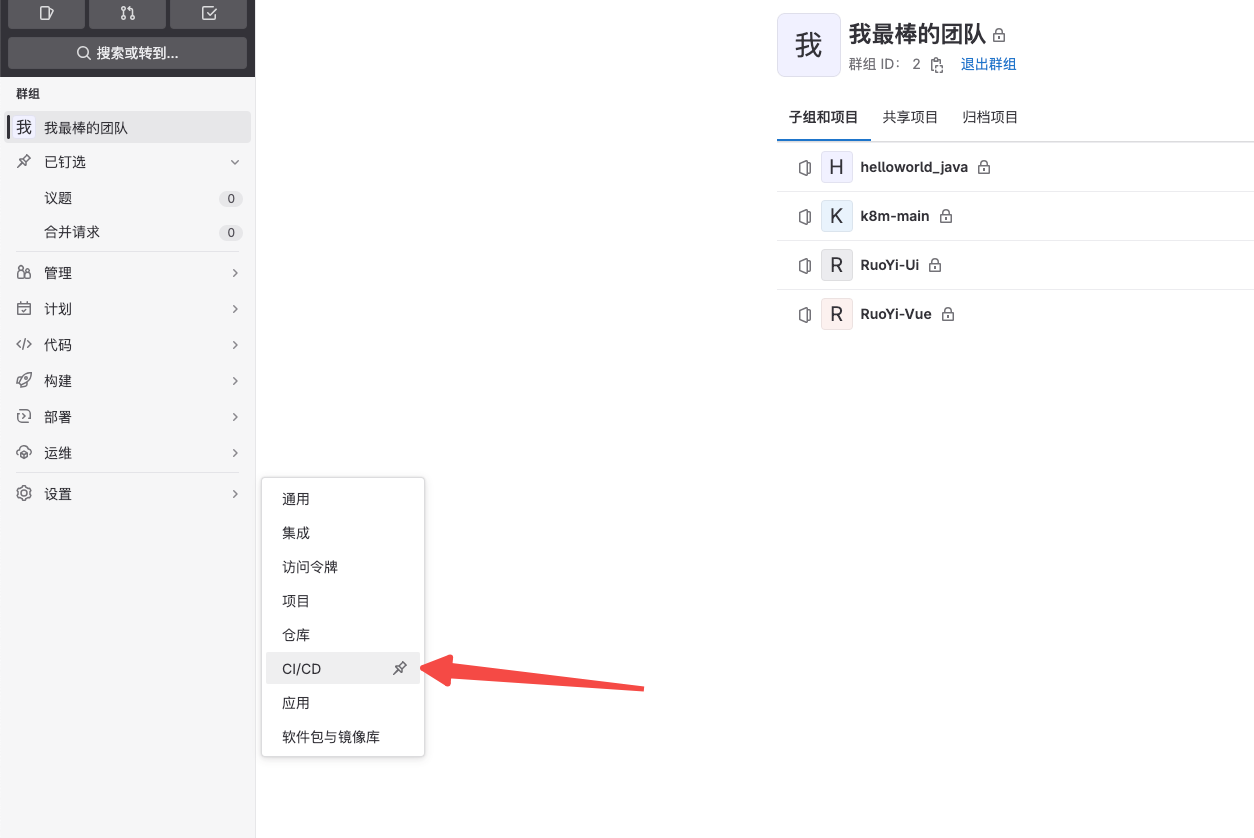

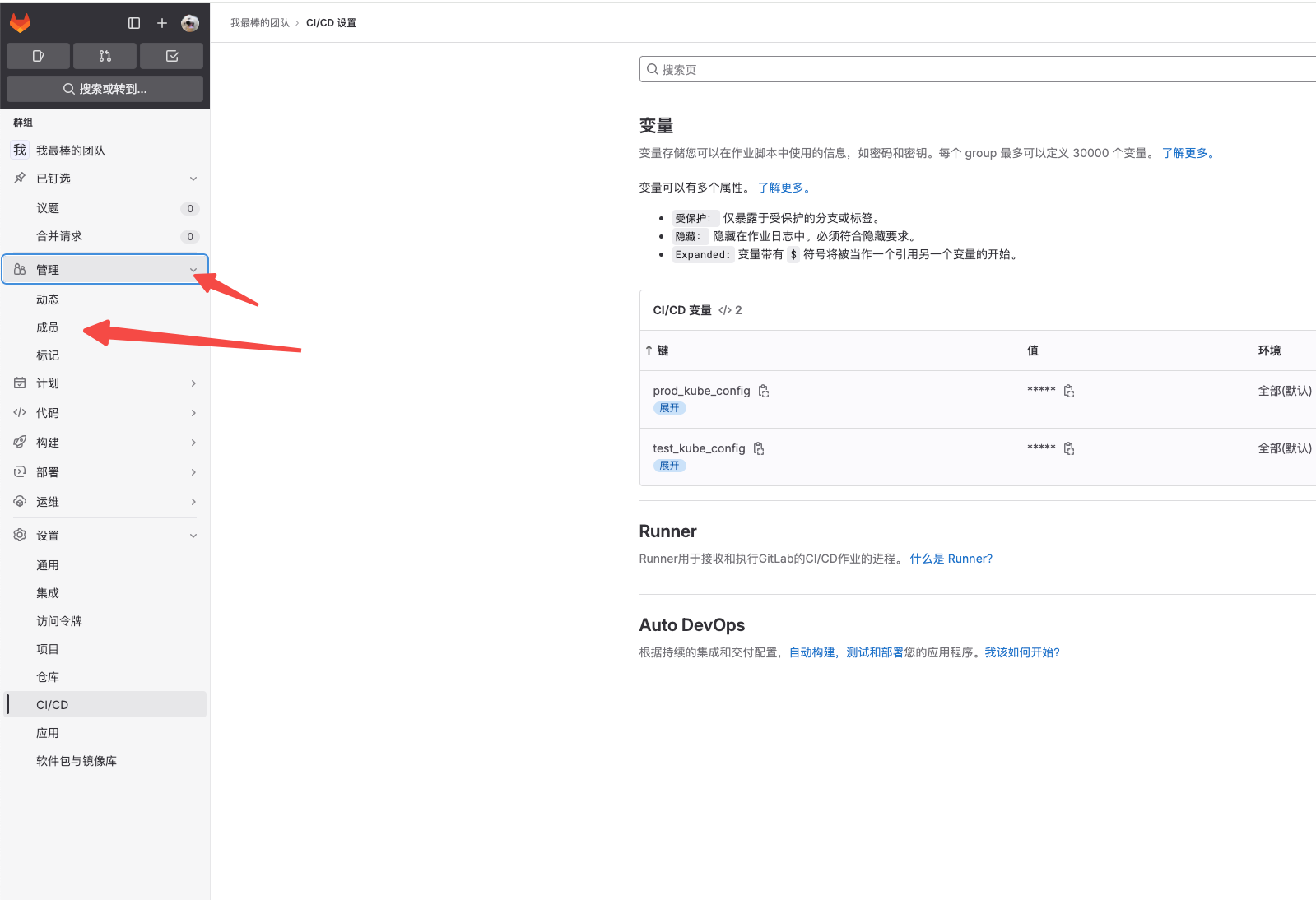

1. gitlab 新建变量

首先你要新建用户组,然后在用户组这一级别进行配置

因为流水线部署到 k8s 是通过客户端证书通信的,所以要准备好相应的工作

一般来说变量的值就是 base64 加密后的内容,像是文件的话加密可以用: cat ~/.kube/config | base64 -w0

注意:如果变量 "受保护" 你的分支同样也要受保护

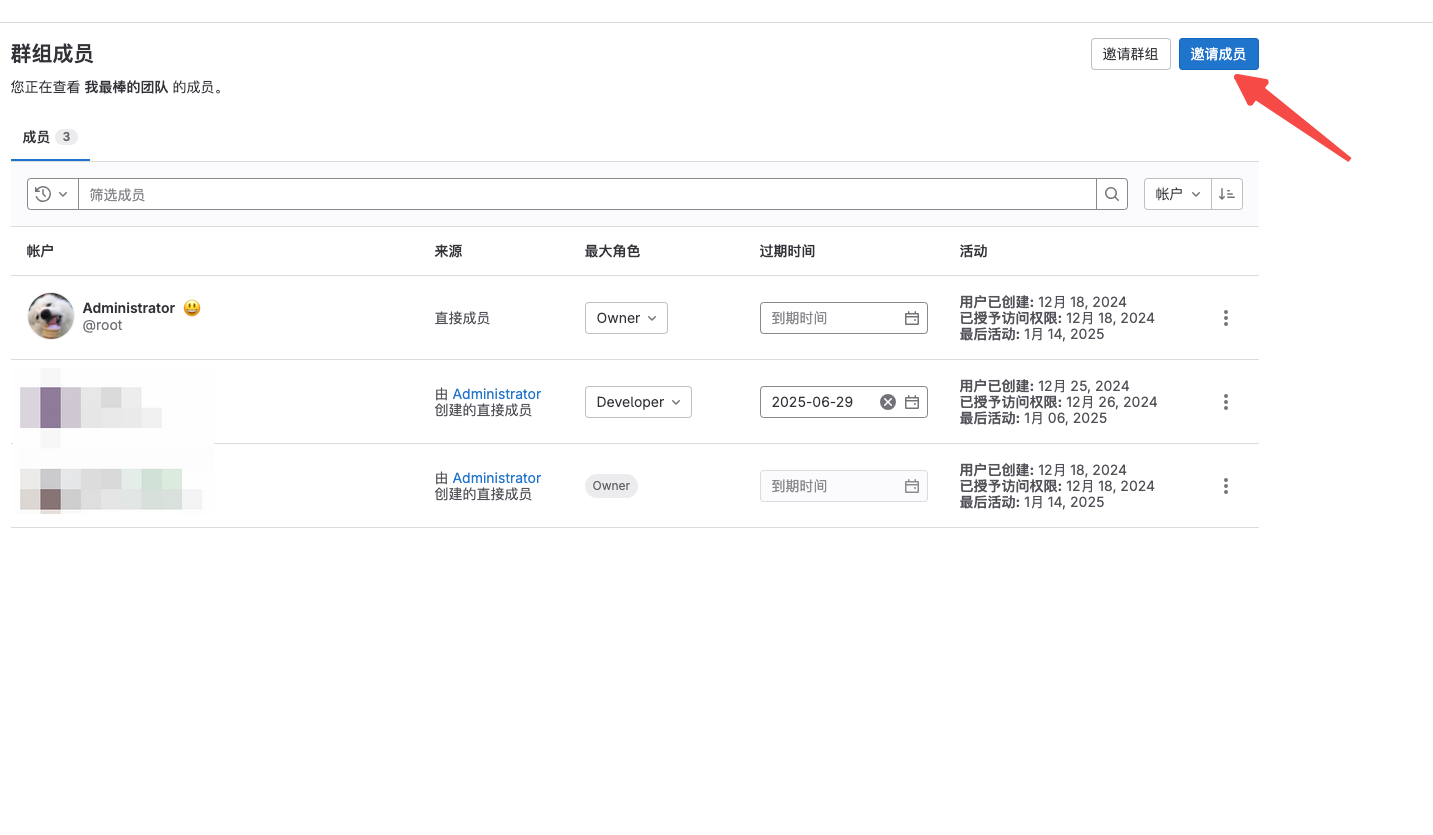

做完之后你要把相应的用户加入到用户组中,这样的话他们就具备使用该变量的权限了

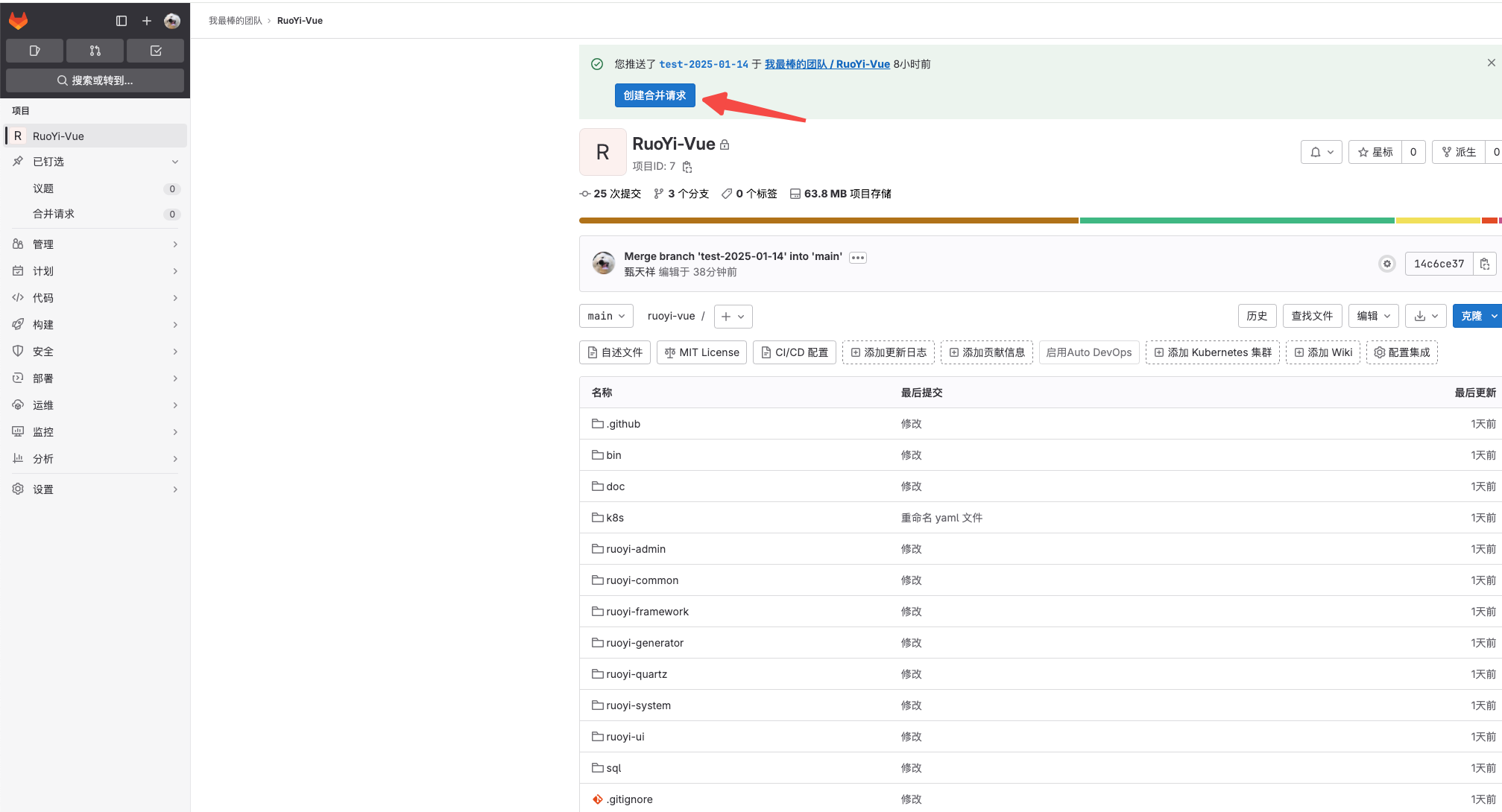

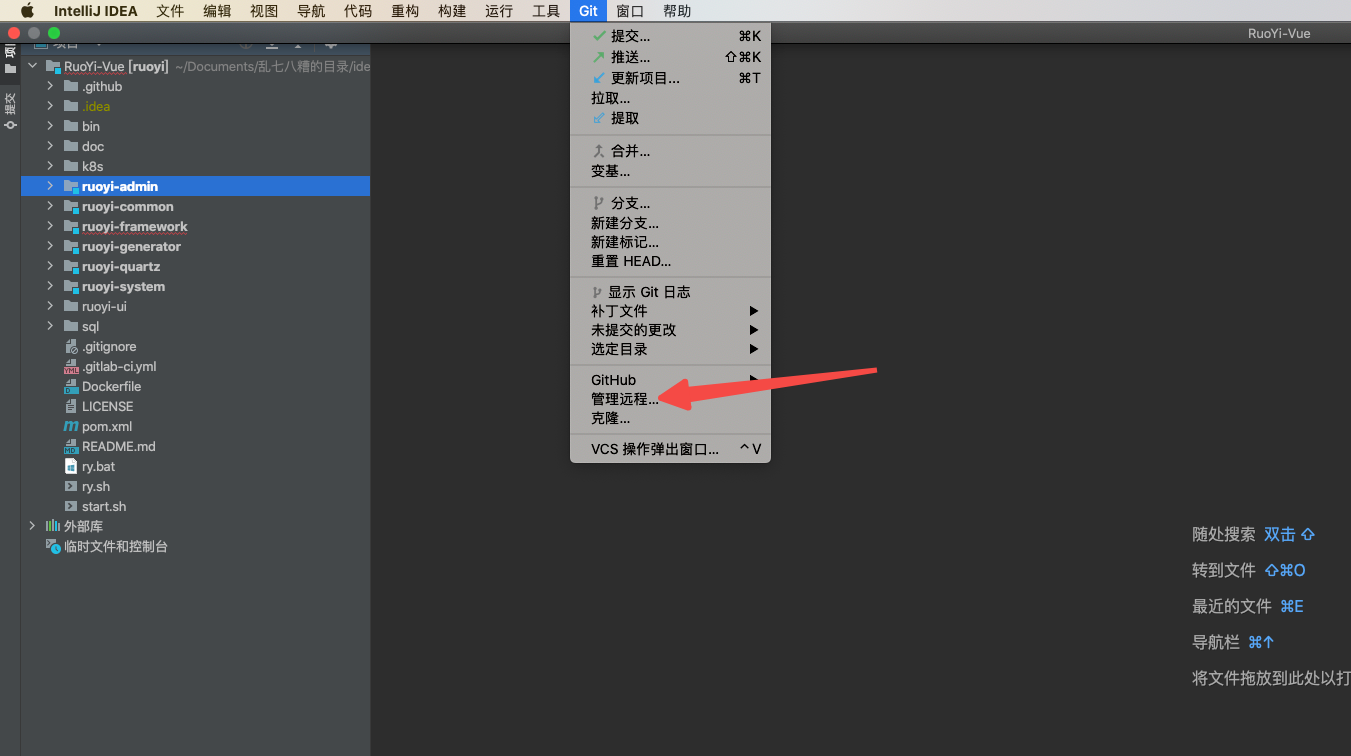

2. 配置远程仓库

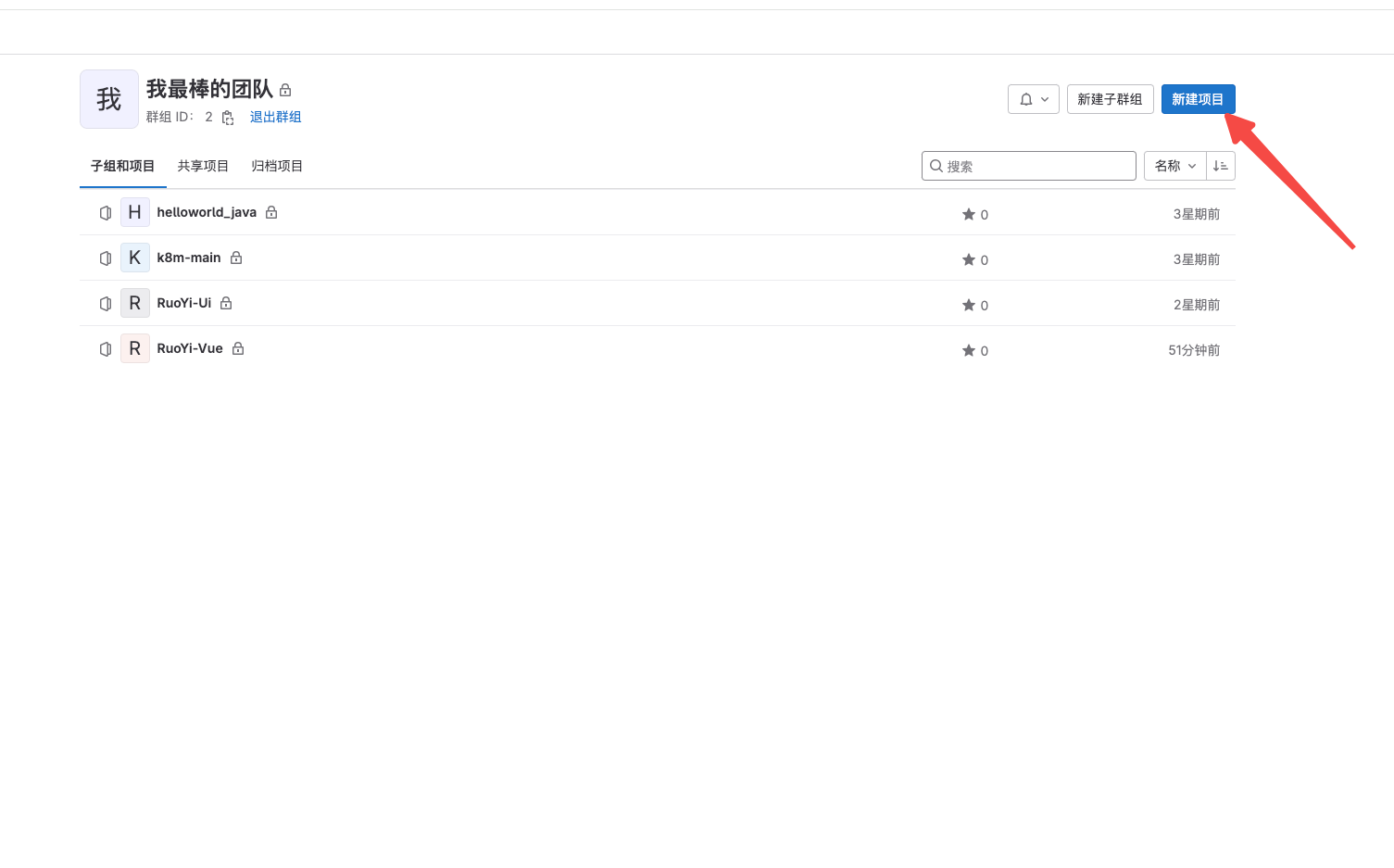

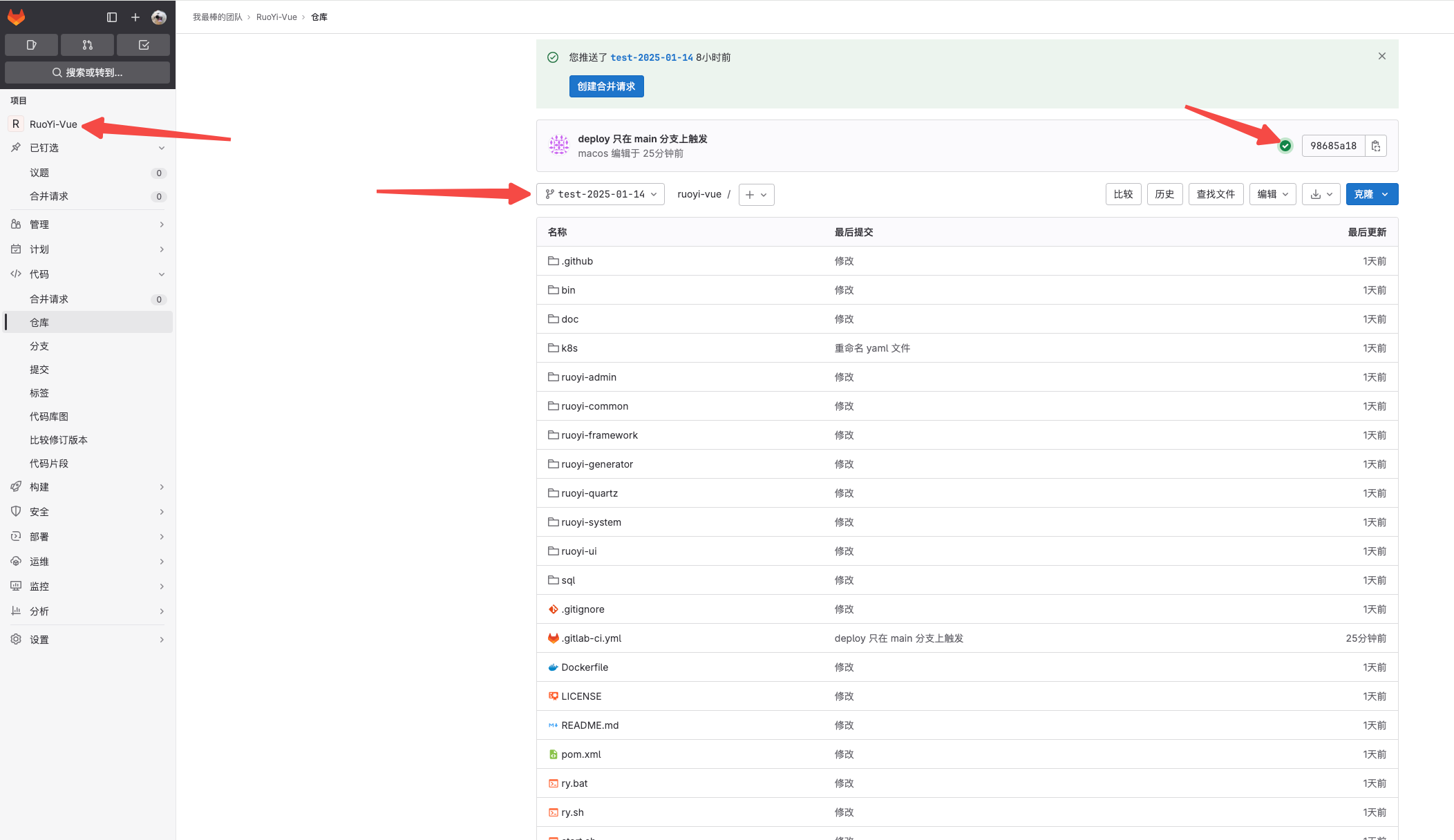

首先你要先准备新建一个空的项目,登陆到你的gitlab账号,进入到你的组中,新建项目

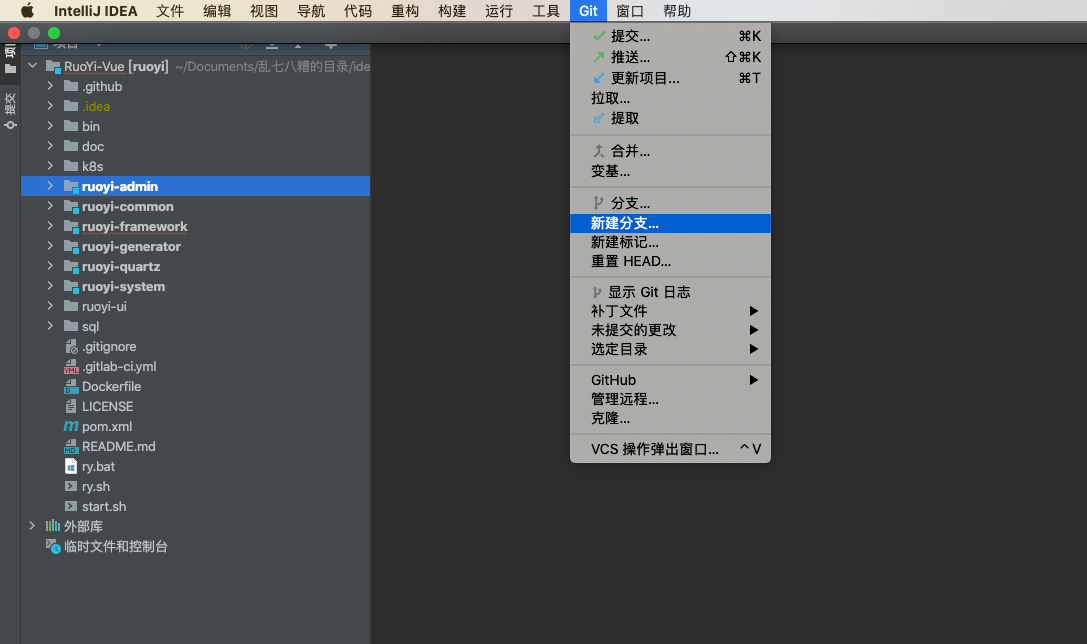

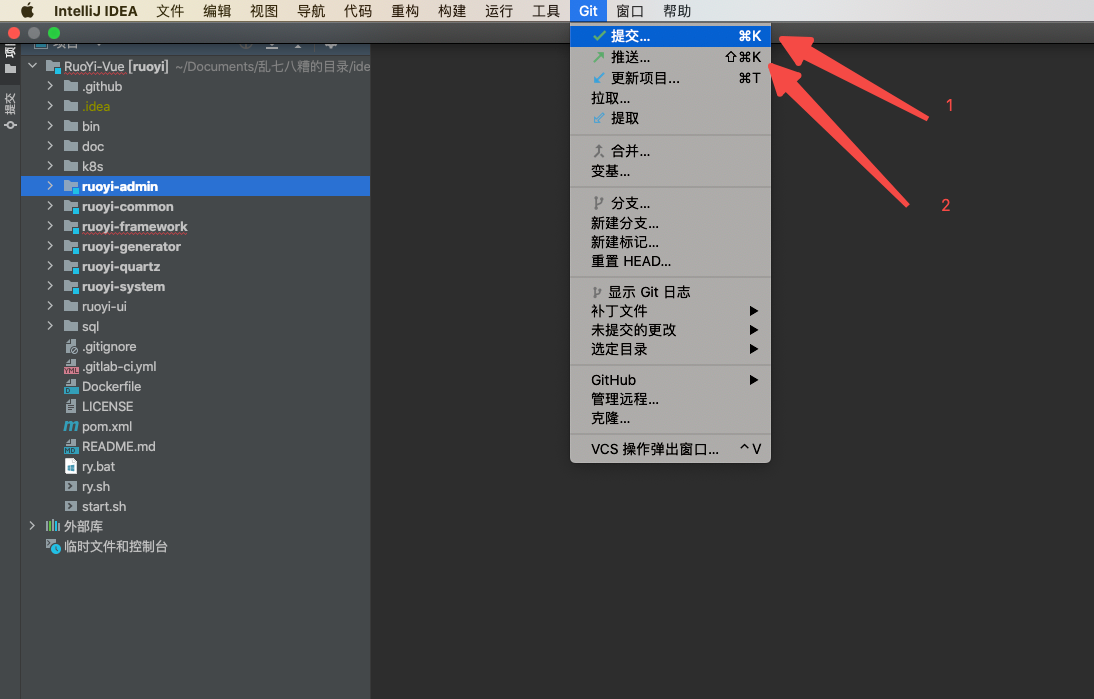

然后新建一个 test-xxx 的分支,推送自己的项目代码到仓库中

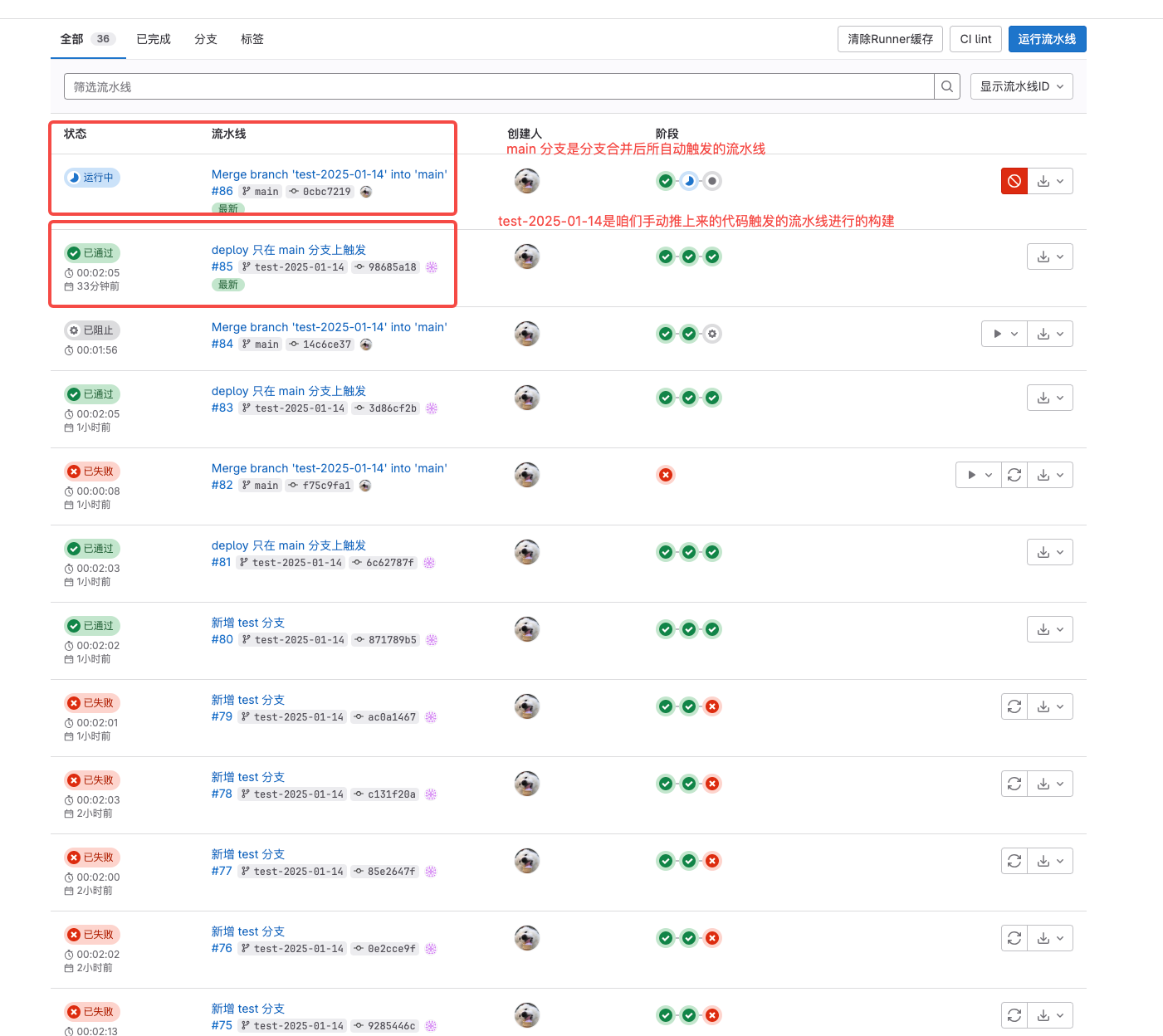

3. 检查流水线构建

不出意外的话你就能在 gitlab 页面中看到流水线信息了

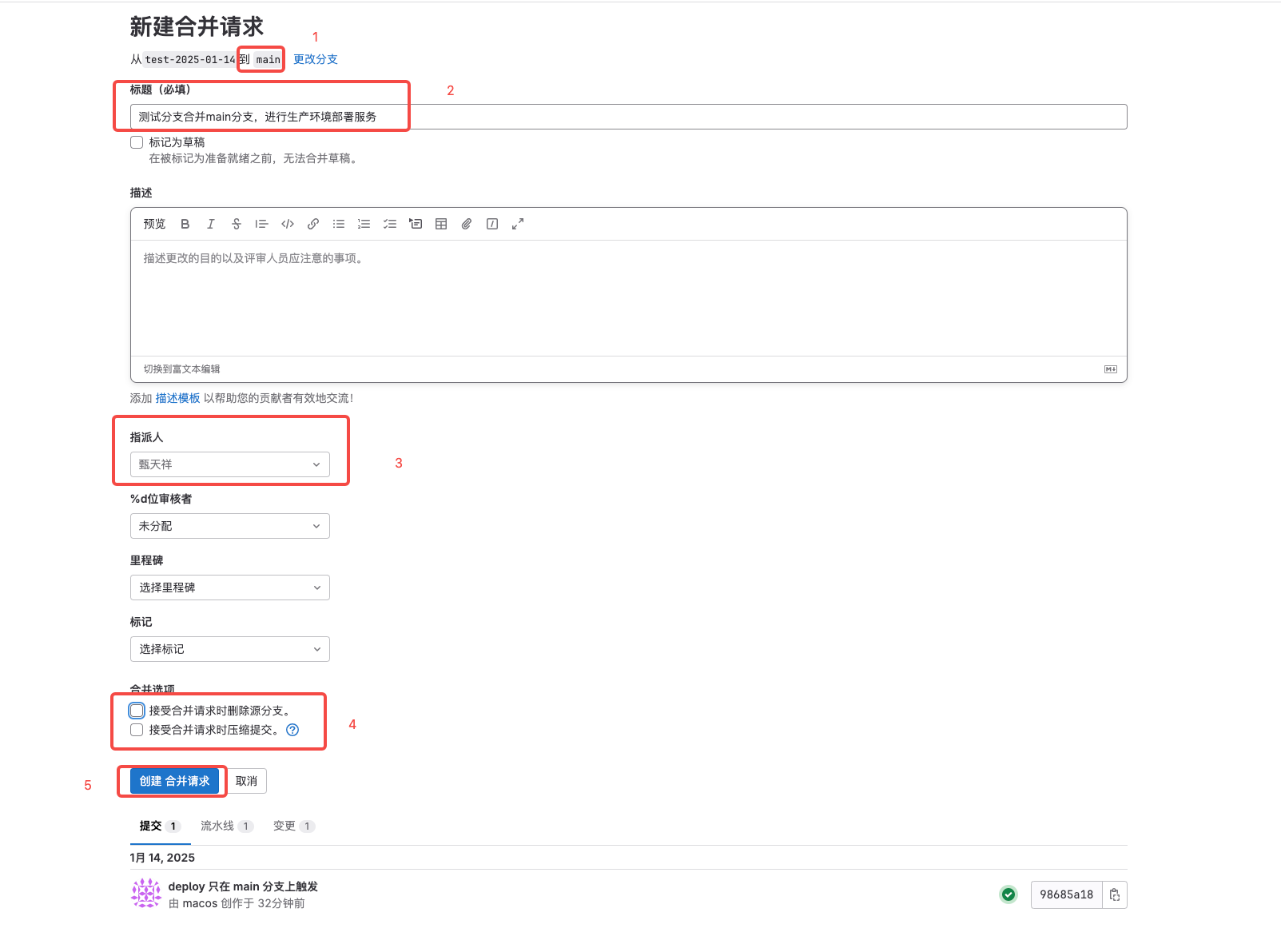

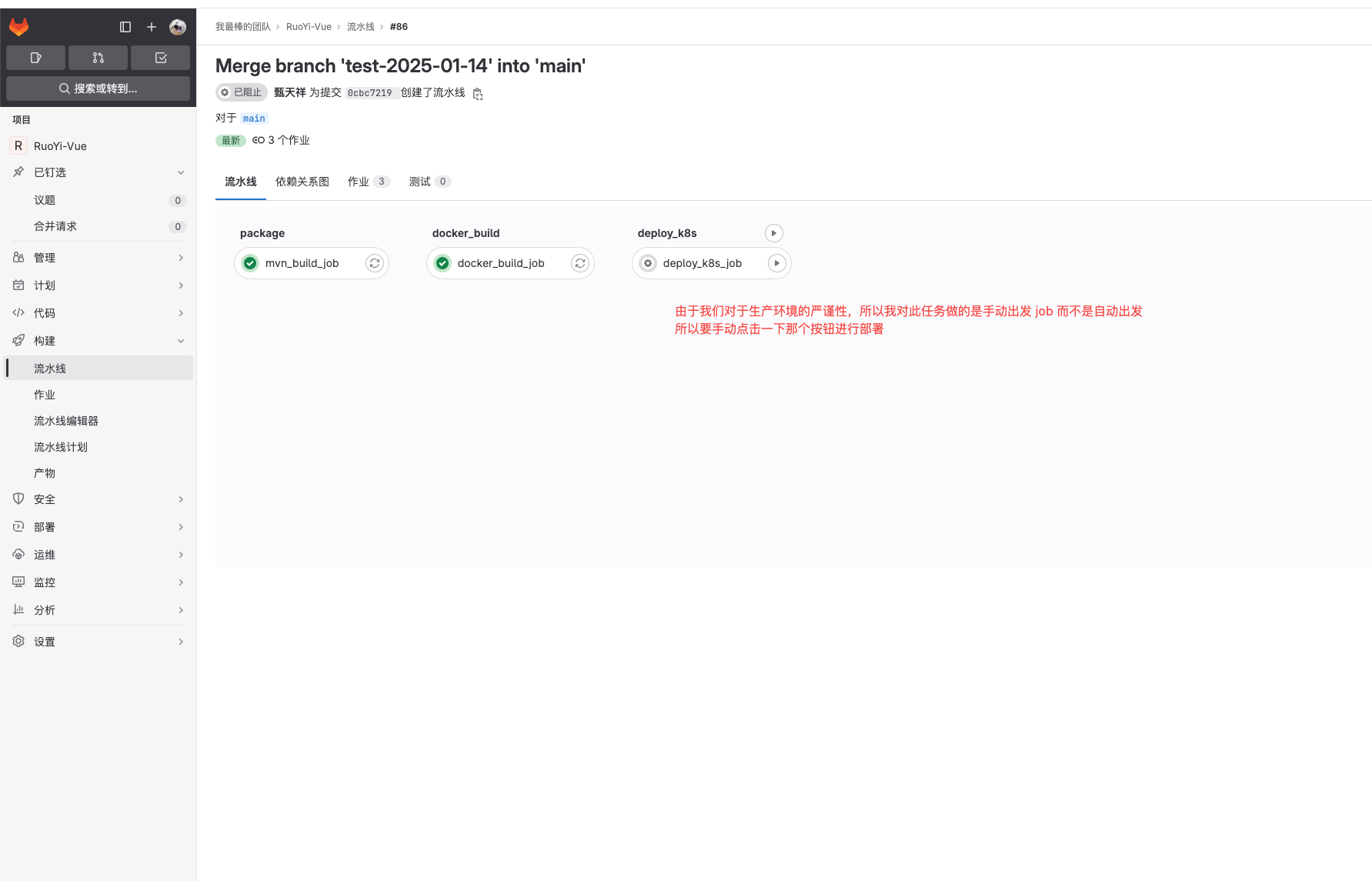

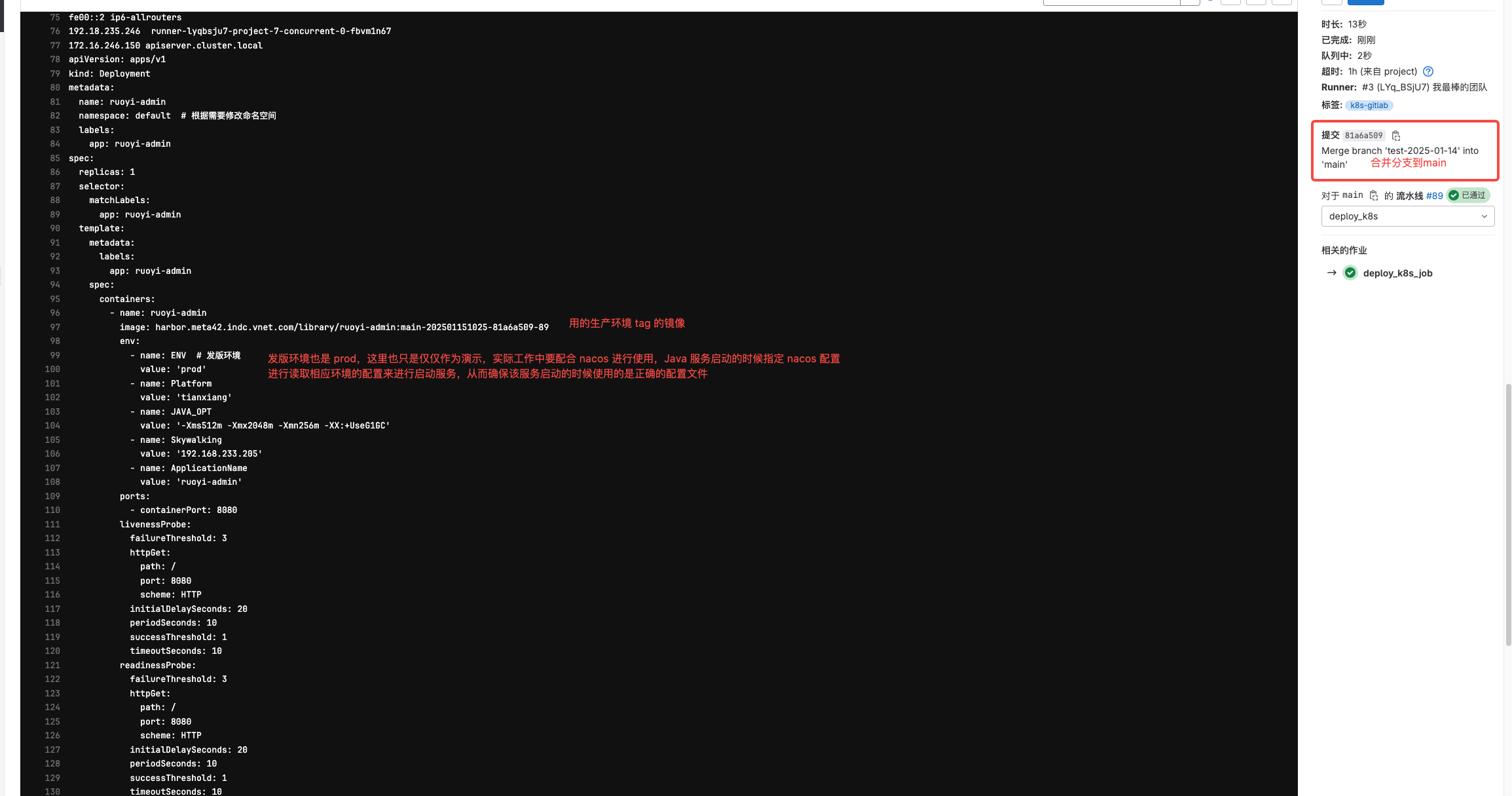

4. 分支合并生产环境部署

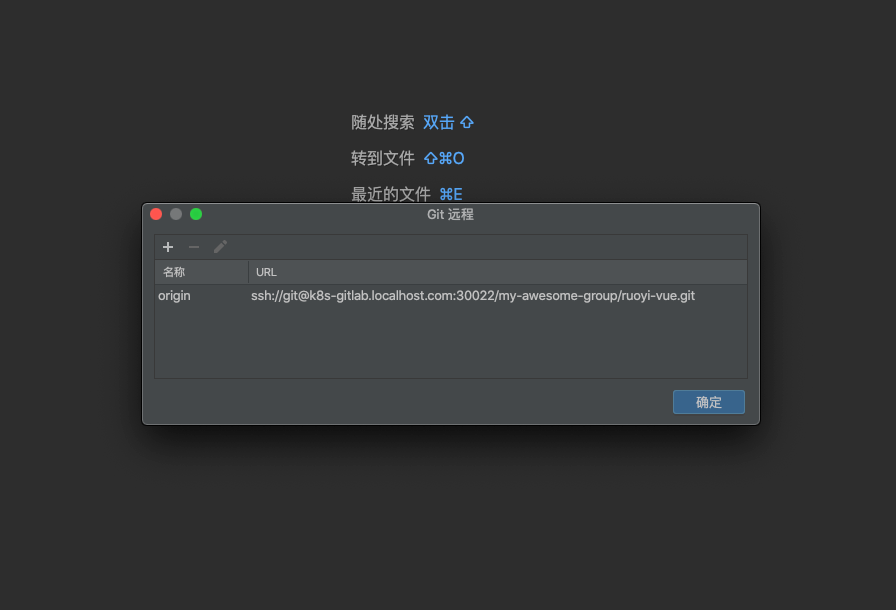

由于我的流水线中判断多个分支,当前流水线任务在哪个分支中运行,就执行哪个分支的动作,即所以,当分支合并到 main 分支中,就会出发 main 分支的流水线,服务自然也就部署到了生产环境中。