Kubernetes 安装部署 MySQL-Operater

一、简介

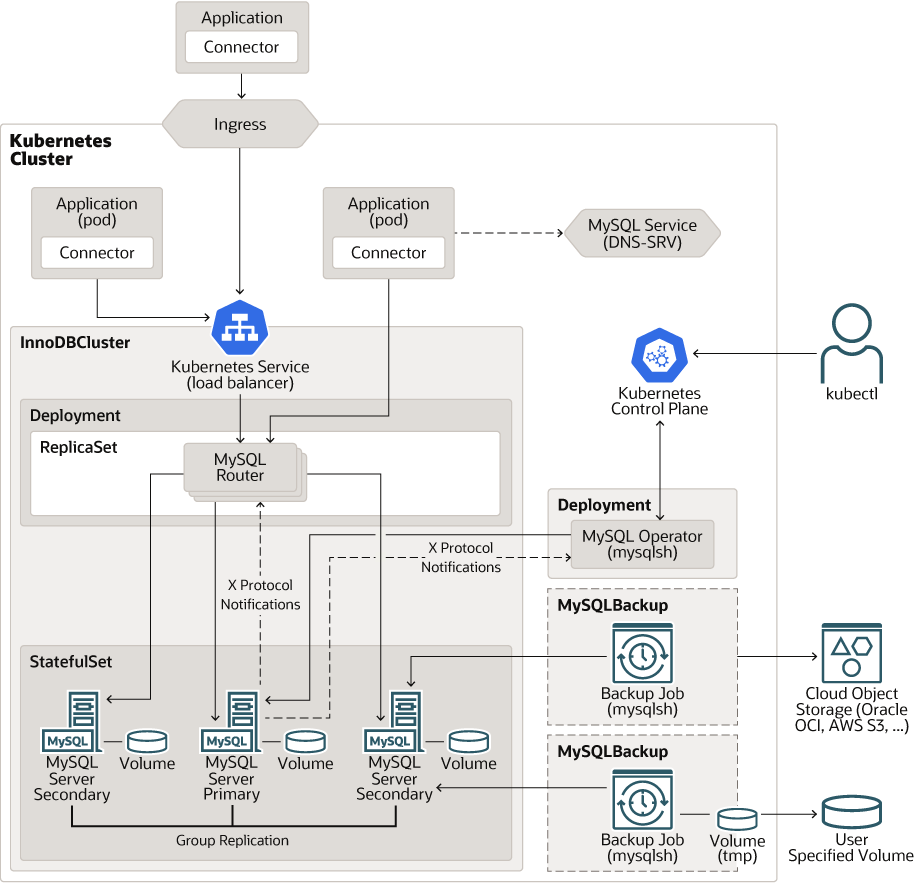

适用于 Kubernetes 的 MySQL Operator 是一个专注于管理一个或多个 MySQL InnoDB 的 Operator 由一组 MySQL 服务器和 MySQL 组成的集群 路由器。MySQL Operator 本身在 Kubernetes 集群中运行 并由 Kubernetes 控制 Deployment 以确保 MySQL Operator 保持 可用且正在运行。

MySQL Operator 部署在Kubernetes的Namespace 中;并监视所有 InnoDB 集群和相关 资源。要执行这些任务, 操作员订阅 Kubernetes API 服务器以更新事件 并根据需要连接到托管的 MySQL Server 实例。在Kubernetes控制器之上,操作员配置MySQL服务器,使用MySQL组复制和MySQL路由器进行复制。

二、部署

1. helm 部署

1. 配置仓库

[root@k8s-master1 mysql-cluster]# helm repo add mysql-operator https://mysql.github.io/mysql-operator/

[root@k8s-master1 mysql-cluster]# helm repo update2. 下载离线包

[root@k8s-master1 mysql-cluster]# helm search repo mysql --versions

NAME CHART VERSION APP VERSION DESCRIPTION

mysql-operator/mysql-innodbcluster 2.2.5 9.4.0 MySQL InnoDB Cluster Helm Chart for deploying M...

mysql-operator/mysql-innodbcluster 2.1.8 8.4.6 MySQL InnoDB Cluster Helm Chart for deploying M...

mysql-operator/mysql-innodbcluster 2.0.19 8.0.43 MySQL InnoDB Cluster Helm Chart for deploying M...

mysql-operator/mysql-operator 2.2.5 9.4.0-2.2.5 MySQL Operator Helm Chart for deploying MySQL I...

mysql-operator/mysql-operator 2.1.8 8.4.6-2.1.8 MySQL Operator Helm Chart for deploying MySQL I...

mysql-operator/mysql-operator 2.0.19 8.0.43-2.0.19 MySQL Operator Helm Chart for deploying MySQL I...

[root@k8s-master1 mysql-cluster]# helm pull mysql-operator/mysql-operator --version=2.2.25 --untar

[root@k8s-master1 mysql-cluster]# helm pull mysql-operator/mysql-innodbcluster --version=2.2.25 --untar3. 修改配置文件启动服务

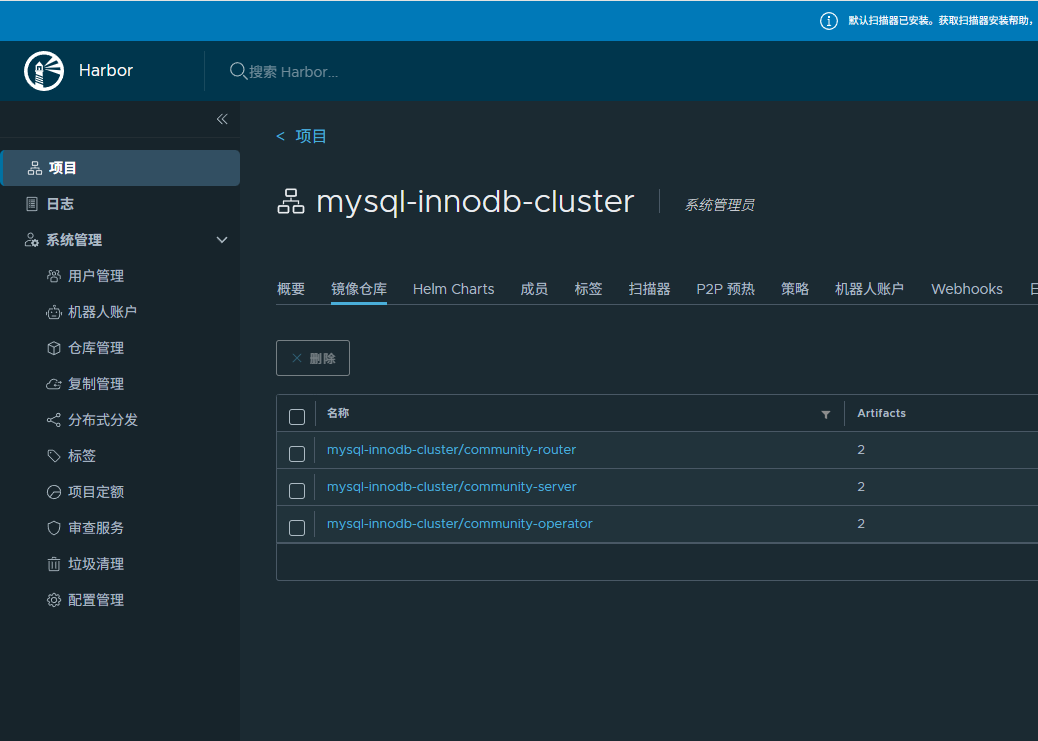

镜像命名必须如下,否则 helm 启动找不到对应的

修改镜像源,如果网络很好的话不需要

[root@k8s-master1 mysql-cluster]# cd mysql-operator/

[root@k8s-master1 mysql-operator]# vim values.yaml

image:

registry: harbor.tianxiang.love:30443

repository: mysql-innodb-cluster

name: community-operator

pullPolicy: IfNotPresent

# Overrides the image tag whose default is the chart appVersion.

tag: "9.4.0-2.2.5"

pullSecrets:

enabled: false

secretName:

envs:

imagesPullPolicy: IfNotPresent

imagesDefaultRegistry:

imagesDefaultRepository:

k8sClusterDomain: cluster.local # 这里填写自己 k8s 的 ClusterDomain

# 其他的默认即可4. 启动 operater 服务

[root@k8s-master1 mysql-operator]# helm -n middleware upgrade --install mysql-operator ./ -f values.yaml5. 启动 mysql-innodb

同样也是修改镜像地址,网络好的话就算了

然后就是必要的对 pod 资源请求做个限制,以及调度策略,和配置文件优化

[root@k8s-master1 mysql-innodbcluster]# vim values.yaml

image:

registry: harbor.tianxiang.love:30443

repository: mysql-innodb-cluster

pullPolicy: IfNotPresent

tag: "9.4.0"

pullSecrets:

enabled: false

secretName:

# ====================== 主服务器配置 ==========60行左右============

# 重要: podSpec 初始设置后不可更改

podSpec:

# 容器资源配置

containers:

- name: mysql

resources:

requests:

memory: "2Gi"

cpu: "2"

limits:

memory: "4Gi"

cpu: "4"

# 存活探针配置

livenessProbe:

initialDelaySeconds: 30

periodSeconds: 10

timeoutSeconds: 5

failureThreshold: 3

# 就绪探针配置

readinessProbe:

initialDelaySeconds: 5

periodSeconds: 5

timeoutSeconds: 3

failureThreshold: 3

# 容器调度策略

#tolerations:

# - key: "middleware"

# operator: "Equal"

# value: "true"

# effect: "NoSchedule"

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: "middleware"

operator: In

values:

- "true"

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: "app.kubernetes.io/name"

operator: In

values:

- "mysql-innodbcluster-mysql-server"

topologyKey: "kubernetes.io/hostname"

serverConfig:

mycnf: |

[mysqld]

# 服务基本配置

user = mysql

bind-address = 0.0.0.0

character_set_server = utf8mb4

collation-server = utf8mb4_unicode_ci

default-time_zone = '+8:00'

lower_case_table_names = 1

# interactive_timeout 针对交互式客户端(如命令行)

interactive_timeout = 3000

# wait_timeout 针对非交互式客户端(如应用程序连接)

wait_timeout = 1800

# 事件调度器

event_scheduler = ON

# 连接与数据包大小

max_connections = 2000

max_allowed_packet = 500M

# 禁用不常用引擎

disabled_storage_engines = "MyISAM,BLACKHOLE,FEDERATED,ARCHIVE,MEMORY"

# 日志相关设置

log_error = /var/lib/mysql/mysqld.log

pid_file = /var/run/mysqld/mysqld.pid

log_bin = /var/lib/mysql/bin-log

relay_log = mysql-relay-bin

relay_log_index = mysql-relay-bin.index

relay_log_recovery = ON

log_bin_trust_function_creators = 1

log_slave_updates = ON

# 二进制日志

binlog_format = ROW

binlog_expire_logs_seconds = 2592000

binlog_checksum = NONE

# MGR复制优化

replica_parallel_type = LOGICAL_CLOCK

replica_parallel_workers = 4

replica_preserve_commit_order = ON

replica_net_timeout = 60

# 事务和InnoDB参数

transaction_isolation = REPEATABLE-READ

innodb_flush_log_at_trx_commit = 1

innodb_file_per_table = 1

innodb_flush_method = O_DIRECT

innodb_flush_neighbors = 2

# 缓存与日志大小

innodb_buffer_pool_size = 8G

innodb_buffer_pool_instances = 8

innodb_log_file_size = 1G

innodb_log_files_in_group = 3

innodb_log_buffer_size = 32M

max_binlog_cache_size = 1024M

# IO与并发

innodb_io_capacity = 800

innodb_io_capacity_max = 1600

innodb_write_io_threads = 4

innodb_read_io_threads = 4

innodb_purge_threads = 2

innodb_adaptive_flushing = ON

# LRU策略

innodb_lru_scan_depth = 1000

innodb_old_blocks_pct = 35

innodb_change_buffer_max_size = 50

# 锁与回滚

innodb_lock_wait_timeout = 35

innodb_rollback_on_timeout = ON

# 启动关闭

innodb_fast_shutdown = 0

innodb_force_recovery = 0

innodb_buffer_pool_dump_at_shutdown = 1

innodb_buffer_pool_load_at_startup = 1

# 并发线程

thread_cache_size = 500

innodb_thread_concurrency = 0

innodb_spin_wait_delay = 30

innodb_sync_spin_loops = 100

# 内存缓冲

read_buffer_size = 8M

read_rnd_buffer_size = 8M

bulk_insert_buffer_size = 128M

# 优化器设置

optimizer_switch = "index_condition_pushdown=on,mrr=on,mrr_cost_based=on,batched_key_access=off,block_nested_loop=on"

# 拼接长度

group_concat_max_len = 102400

# 表缓存

table_open_cache = 2048

# GTID支持

enforce_gtid_consistency = ON

gtid_mode = ON

# 自增主键偏移

auto_increment_increment = 1

auto_increment_offset = 2

# 安全配置

allow-suspicious-udfs = OFF

local_infile = OFF

skip-grant-tables = OFF

safe-user-create

# 文件路径

datadir = /var/lib/mysql

socket=/var/run/mysqld/mysql.sock # 必须是这个

# SQL模式

sql_mode = 'STRICT_TRANS_TABLES,NO_ZERO_IN_DATE,NO_ZERO_DATE,ERROR_FOR_DIVISION_BY_ZERO,NO_ENGINE_SUBSTITUTION'

# 慢查询日志(可选)

# slow_query_log = 1

# slow_query_log_file = /var/lib/mysql/slow_query.log

# log_queries_not_using_indexes = ON

# 密码策略(可选)

# plugin_load_add = 'validate_password.so'

# validate_password_policy = MEDIUM

# validate_password_length = 14

# SSL加密(可选)

# ssl_ca = "/etc/cert/ca.pem"

# ssl_cert = "/etc/cert/server.crt"

# ssl_key = "/etc/cert/server.key"

# require_secure_transport = ON

# tls_version = TLSv1.2[root@k8s-master1 mysql-innodbcluster]# helm -n middleware upgrade --install mysql-cluster ./ -f values.yaml \

--set tls.useSelfSigned=true \

--set credentials.root.user='root' \

--set credentials.root.password='123456' \

--set credentials.root.host='%' \

--set serverInstances=3 \

--set routerInstances=2 \

--set datadirVolumeClaimTemplate.storageClassName='openebs-hostpath' \

--set datadirVolumeClaimTemplate.resources.requests.storage='100Gi'

[root@k8s-master1 mysql-innodbcluster]# helm -n middleware list

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

mysql-cluster middleware 1 2025-08-03 17:11:55.154994333 +0800 CST deployed mysql-innodbcluster-2.2.5 9.4.0

mysql-operator middleware 1 2025-08-03 16:04:48.309565516 +0800 CST deployed mysql-operator-2.2.5 9.4.0-2.2.5注意📛注意📛注意📛,如果你下载的是 8.0.43 版本的那么需要修改如下:

mysql-innodbcluster chart 包的模板修改:

直接复制粘贴我的就行

{{- $disable_lookups:= .Values.disableLookups }}

{{- $cluster_name := default "mycluster" .Release.Name }}

{{- $use_self_signed := default false ((.Values.tls).useSelfSigned) }}

{{- $minimalVersion := "8.0.27" }}

{{- $forbiddenVersions := list "8.0.29" }}

{{- $imagePullPolicies := list "ifnotpresent" "always" "never" }}

{{- $serverVersion := .Values.serverVersion | default .Chart.AppVersion }}

{{- if and ((.Values).routerInstances) (((.Values).router).instances) }}

{{- if ne ((.Values).routerInstances) (((.Values).router).instances) }}

{{- $err := printf "routerInstances and router.instances both are specified and have different values %d and %d. Use only one" ((.Values).routerInstances) (((.Values).router).instances) }}

{{- fail $err }}

{{- end }}

{{- end }}

{{- $routerInstances := coalesce ((.Values).routerInstances) (((.Values).router).instances) }}

{{- if lt $serverVersion $minimalVersion }}

{{- $err := printf "It is not possible to use MySQL version %s . Please, use %s or above" $serverVersion $minimalVersion }}

{{- fail $err }}

{{- end }}

{{- if has $serverVersion $forbiddenVersions }}

{{- $err := printf "It is not possible to use MySQL version %s . Please, use %s or above except %v" $serverVersion $minimalVersion $forbiddenVersions }}

{{- fail $err }}

{{- end }}

{{- if (((.Values).image).pullPolicy) }}

{{- if not (has (lower (((.Values).image).pullPolicy)) ($imagePullPolicies)) }}

{{- $err := printf "Unknown image pull policy %s. Must be one of %v" (((.Values).image).pullPolicy) $imagePullPolicies }}

{{- fail $err }}

{{- end }}

{{- else }}

{{ fail "image.pullPolicy is required" }}

{{- end }}

apiVersion: mysql.oracle.com/v2

kind: InnoDBCluster

metadata:

name: {{ $cluster_name }}

namespace: {{ .Release.Namespace }}

spec:

instances: {{ required "serverInstances is required" .Values.serverInstances }}

tlsUseSelfSigned: {{ $use_self_signed }}

router:

instances: {{ required "router.instances is required" $routerInstances }}

{{- if (((.Values).router).podSpec) }}

podSpec: {{ toYaml (((.Values).router).podSpec) | nindent 6 }}

{{- end }}

{{ if (((.Values).router).podLabels) }}

podLabels: {{ toYaml (((.Values).router).podLabels) | nindent 6 }}

{{ end }}

{{ if (((.Values).router).podAnnotations) }}

podAnnotations: {{ toYaml (((.Values).router).podAnnotations) | nindent 6 }}

{{ end }}

{{- if not $use_self_signed }}

{{- if and (((.Values).tls).routerCertAndPKsecretName) (((.Values).router).certAndPKsecretName) }}

{{- if ne (((.Values).tls).routerCertAndPKsecretName) (((.Values).router).certAndPKsecretName) }}

{{- $err := printf "tls.routerCertAndPKsecretName and router.certAndPKsecretName are both specified and have different values %s and %s. Use only one" (((.Values).tls).routerCertAndPKsecretName) (((.Values).router).certAndPKsecretName) }}

{{- fail $err }}

{{- end }}

{{- end }}

{{- $default_secret_name := printf "%s-router-tls" $cluster_name }}

{{- $secret_name := coalesce ((.Values.tls).routerCertAndPKsecretName) ((.Values.router).certAndPKsecretName) $default_secret_name}}

{{- if and (not $disable_lookups) (not (lookup "v1" "Secret" .Release.Namespace $secret_name)) }}

{{- $err := printf "tls.routerCertAndPKsecretName: secret '%s' not found in namespace '%s'" $secret_name .Release.Namespace }}

{{- fail $err }}

{{- end }}

tlsSecretName: {{ $secret_name }}

{{- end }}

# secretName: {{ .Release.Name }}-cluster-secret

# imagePullPolicy : {{ .Values.image.pullPolicy }}

# baseServerId: {{ required "baseServerId is required" .Values.baseServerId }}

# version: {{ .Values.serverVersion | default .Chart.AppVersion }}

# {{- if ((.Values).edition) }}

# edition: {{ .Values.edition | quote }}

# {{- end }}

# serviceAccountName: {{ .Release.Name }}-sa

#

#{{- if not $use_self_signed }}

# {{- $default_secret_name := printf "%s-ca" $cluster_name }}

# {{- $secret_name := default $default_secret_name ((.Values.tls).caSecretName) }}

# {{- if and (not $disable_lookups) (not (lookup "v1" "Secret" .Release.Namespace $secret_name)) }}

# {{- $err := printf "tls.caSecretName: secret '%s' not found in namespace '%s'" $secret_name .Release.Namespace }}

# {{- fail $err }}

# {{- end }}

# tlsCASecretName: {{ $secret_name }}

#

# {{- $default_secret_name := printf "%s-tls" $cluster_name }}

# {{- $secret_name := default $default_secret_name ((.Values.tls).serverCertAndPKsecretName) }}

# {{- if and (not $disable_lookups) (not (lookup "v1" "Secret" .Release.Namespace $secret_name)) }}

# {{- $err := printf "tls.serverCertAndPKsecretName: secret '%s' not found in namespace '%s'" $secret_name .Release.Namespace }}

# {{- fail $err }}

# {{- end }}

# tlsSecretName: {{ $secret_name }}

#{{- end }}

secretName: {{ .Release.Name }}-cluster-secret

imagePullPolicy : {{ .Values.image.pullPolicy }}

baseServerId: {{ required "baseServerId is required" .Values.baseServerId | toString | atoi }}

version: {{ .Values.serverVersion | default .Chart.AppVersion }}

{{- if ((.Values).edition) }}

edition: {{ .Values.edition | quote }}

{{- end }}

serviceAccountName: {{ .Release.Name }}-sa

#imageRepository

{{- if and (not (((.Values).image).registry)) (not (((.Values).image).repository)) }}

## Neither registry nor repository provided - OK

{{- else if (((.Values).image).registry) }}

## registry provided

{{- if (((.Values).image).repository) }}

## repository provided

{{- end }}

imageRepository: {{ trimSuffix "/" .Values.image.registry }}{{- if (((.Values).image).repository) }}/{{ trimSuffix "/" .Values.image.repository | trimPrefix "/" }}{{ end }}

{{- else if (((.Values).image).repository) }}

{{- fail "image.repository provided but image.registry is not or is empty" }}

{{- end }}

# imagePullSecrets

{{- if ((((.Values).image).pullSecrets).enabled) }}

imagePullSecrets:

{{- $secret_name := .Values.image.pullSecrets.secretName }}

{{- if not $secret_name }}

{{- fail "image.pullSecrets.secretName is required when pull secrets are enabled" }}

{{- end }}

{{- if and (not $disable_lookups) (not (lookup "v1" "Secret" .Release.Namespace $secret_name)) }}

{{- $err := printf "image.pullSecrets.secretName: secret '%s' not found in namespace '%s'" $secret_name .Release.Namespace }}

{{- fail $err }}

{{- end }}

- name: {{ $secret_name }}

{{- end }}

{{- if ((.Values).serverConfig) }}

{{- if (((.Values).serverConfig).mycnf) }}

mycnf: |

{{- if not (hasPrefix "[mysqld]" (((.Values).serverConfig).mycnf) ) }}

[mysqld]

{{- end }}

{{ (((.Values).serverConfig).mycnf) | indent 4 }}

{{- end }}

{{- end }}

{{- if .Values.datadirVolumeClaimTemplate }}

{{- with .Values.datadirVolumeClaimTemplate }}

datadirVolumeClaimTemplate:

{{- if .storageClassName }}

storageClassName: {{ .storageClassName | quote }}

{{- end}}

{{- if .accessModes }}

accessModes: [ "{{ .accessModes }}" ]

{{- end }}

{{- if .resources.requests.storage }}

resources:

requests:

storage: "{{ .resources.requests.storage }}"

{{- end }}

{{- end }}

{{- end }}

{{- if (or (((.Values).keyring).file) (((.Values).keyring).encryptedFile) (((.Values).keyring).oci) ) }}

keyring:

{{- $keyringAlreadySpecified := "" }}

{{- if (((.Values).keyring).file) }}

{{- if $keyringAlreadySpecified }}

{{- $err := printf "Keyring '%s' already specified" $keyringAlreadySpecified }}

{{- fail $err }}

{{- end }}

{{- $keyringAlreadySpecified = "file" }}

{{- with .Values.keyring.file }}

file:

fileName: {{ required "keyring.file.fileName is required" .fileName | quote }}

{{- if .readOnly }}

readOnly: {{ .readOnly }}

{{- end }}

storage: {{ toYaml .storage | nindent 8 }}

{{- end }}

{{- end }}

{{- if (((.Values).keyring).encryptedFile) }}

{{- if $keyringAlreadySpecified }}

{{- $err := printf "Keyring '%s' already specified" $keyringAlreadySpecified | quote }}

{{- fail $err }}

{{- end }}

{{- $keyringAlreadySpecified = "encryptedFile" }}

{{- with .Values.keyring.encryptedFile }}

encryptedFile:

fileName: {{ required "keyring.encryptedFile.fileName is required" .fileName | quote }}

{{- if .readOnly }}

readOnly: {{ .readOnly }}

{{- end }}

password: {{ required "keyring.encryptedFile.password is required" .password | quote }}

storage: {{ toYaml .storage | nindent 8 }}

{{- end }}

{{- end }}

{{- if (((.Values).keyring).oci) }}

{{- if $keyringAlreadySpecified }}

{{- $err := printf "Keyring '%s' already specified" $keyringAlreadySpecified }}

{{- fail $err }}

{{- end }}

{{- $keyringAlreadySpecified = "oci" }}

{{- with .Values.keyring.oci }}

oci:

user: {{ required "keyring.oci.user is required" .user | quote}}

keySecret: {{ required "keyring.oci.keySecret is required" .keySecret | quote}}

keyFingerprint: {{ required "keyring.oci.keyFingerprint is required" .keyFingerprint | quote }}

tenancy: {{ required "keyring.oci.tenancy is required" .tenancy | quote}}

{{- if .compartment}}

compartment: {{ .compartment | quote }}

{{- end }}

{{- if .virtualVault}}

virtualVault: {{ .virtualVault | quote}}

{{- end }}

{{- if .masterKey}}

masterKey: {{ .masterKey | quote}}

{{- end }}

{{- if .caCertificate}}

caCertificate: {{ .caCertificate | quote}}

{{- end }}

{{- if .endpoints}}

endpoints:

{{- if ((.endpoints).encryption) }}

encryption: {{ ((.endpoints).encryption) | quote}}

{{- end }}

{{- if ((.endpoints).management) }}

management: {{ ((.endpoints).management) | quote}}

{{- end }}

{{- if ((.endpoints).vaults) }}

vaults: {{ ((.endpoints).vaults) | quote}}

{{- end }}

{{- if ((.endpoints).secrets) }}

secrets: {{ ((.endpoints).secrets) | quote}}

{{- end }}

{{- end }}

{{- end }}

{{- end }}

{{- end }}

{{- if .Values.initDB }}

{{- if and (and .Values.initDB.dump .Values.initDB.dump.name) (and .Values.initDB.clone .Values.initDB.donorUrl) }}

{{- fail "Dump and Clone are mutually exclusive" }}

{{- end }}

{{- if (((.Values).initDB).clone) }}

{{- with .Values.initDB.clone }}

initDB:

clone:

donorUrl: {{ required "initDB.clone.donorUrl is required" .donorUrl }}

rootUser: {{ .rootUser | default "root" }}

secretKeyRef:

name: {{ required "initDB.clone.credentials is required" .credentials }}

{{- end }}

{{- end }}

{{- if (((.Values).initDB).dump) }}

{{- with .Values.initDB.dump }}

{{- if and .name (or .ociObjectStorage .s3 .azure .persistentVolumeClaim .options) }}

initDB:

dump:

{{- if .name }}

name: {{ .name }}

{{- end }}

{{- if .path }}

path: {{ .path }}

{{- end }}

{{- if .options }}

options: {{ toYaml .options | nindent 8 }}

{{- end }}

storage:

{{- if .ociObjectStorage }}

ociObjectStorage:

prefix: {{ required "initDB.dump.ociObjectStorage.prefix is required" .ociObjectStorage.prefix }}

bucketName: {{ required "initDB.dump.ociObjectStorage.bucketName is required" .ociObjectStorage.bucketName }}

credentials: {{ required "initDB.dump.ociObjectStorage.credentials is required" .ociObjectStorage.credentials }}

{{- end }}

{{- if .s3 }}

s3:

prefix: {{ required "initDB.dump.s3.prefix is required" .s3.prefix }}

bucketName: {{ required "initDB.dump.s3.bucketName is required" .s3.bucketName }}

config: {{ required "initDB.dump.s3.config is required" .s3.config }}

{{- if .s3.profile }}

profile: {{ .s3.profile }}

{{- end }}

{{- if .s3.endpoint }}

endpoint: {{ .s3.endpoint }}

{{- end }}

{{- end }}

{{- if .azure }}

azure:

prefix: {{ required "initDB.dump.azure.prefix is required" .azure.prefix }}

containerName: {{ required "initDB.dump.azure.containerName is required" .azure.containerName }}

config: {{ required "initDB.dump.azure.config is required" .azure.config }}

{{- end }}

{{- if .persistentVolumeClaim }}

persistentVolumeClaim: {{ toYaml .persistentVolumeClaim | nindent 10}}

{{- end }}

{{- end }}

{{- end }}

{{- end }}

{{- end }}

{{- if .Values.backupProfiles }}

backupProfiles:

{{- $isDumpInstance := false }}

{{- $isSnapshot := false }}

{{- range $_, $profile := .Values.backupProfiles }}

{{- if $profile.name }}

- name: {{ $profile.name -}}

{{- if hasKey $profile "podAnnotations" }}

podAnnotations: {{ toYaml $profile.podAnnotations | nindent 6 }}

{{- end }}

{{- if hasKey $profile "podLabels" }}

podLabels: {{ toYaml $profile.podLabels | nindent 6 }}

{{- end }}

{{- $isDumpInstance = hasKey $profile "dumpInstance" }}

{{- $isSnapshot = hasKey $profile "snapshot" }}

{{- if or $isDumpInstance $isSnapshot }}

{{- $backupProfile := ternary $profile.dumpInstance $profile.snapshot $isDumpInstance }}

{{- if $isDumpInstance }}

dumpInstance:

{{- else if $isSnapshot }}

snapshot:

{{- else }}

{{- fail "Impossible backup type" }}

{{ end }}

{{- if not (hasKey $backupProfile "storage") }}

{{- fail "backup profile $profile.name has no storage section" }}

{{- else if hasKey $backupProfile.storage "ociObjectStorage" }}

storage:

ociObjectStorage:

{{- if $backupProfile.storage.ociObjectStorage.prefix }}

prefix: {{ $backupProfile.storage.ociObjectStorage.prefix }}

{{- end }}

bucketName: {{ required "bucketName is required" $backupProfile.storage.ociObjectStorage.bucketName }}

credentials: {{ required "credentials is required" $backupProfile.storage.ociObjectStorage.credentials }}

{{- else if hasKey $backupProfile.storage "s3" }}

storage:

s3:

{{- if $backupProfile.storage.s3.prefix }}

prefix: {{ $backupProfile.storage.s3.prefix }}

{{- end }}

bucketName: {{ required "bucketName is required" $backupProfile.storage.s3.bucketName }}

config: {{ required "config is required" $backupProfile.storage.s3.config }}

{{- if $backupProfile.storage.s3.profile }}

profile: {{ $backupProfile.storage.s3.profile }}

{{- end }}

{{- if $backupProfile.storage.s3.endpoint }}

endpoint: {{ $backupProfile.storage.s3.endpoint }}

{{- end }}

{{- else if hasKey $backupProfile.storage "azure" }}

storage:

azure:

{{- if $backupProfile.storage.azure.prefix }}

prefix: {{ $backupProfile.storage.azure.prefix }}

{{- end }}

containerName: {{ required "containerName is required" $backupProfile.storage.azure.containerName }}

config: {{ required "config is required" $backupProfile.storage.azure.config }}

{{- else if hasKey $backupProfile.storage "persistentVolumeClaim" }}

storage:

persistentVolumeClaim: {{ toYaml $backupProfile.storage.persistentVolumeClaim | nindent 12}}

{{- else -}}

{{- fail "dumpInstance backup profile $profile.name has empty storage section - neither ociObjectStorage nor persistentVolumeClaim defined" }}

{{- end -}}

{{- else }}

{{- fail "One of dumpInstance or snapshot must be methods of a backupProfile" }}

{{- end }}

{{- end }}

{{- end }}

{{- end }}

{{- if .Values.backupSchedules }}

backupSchedules:

{{- $isDumpInstance := false }}

{{- $isSnapshot := false }}

{{- range $_, $schedule := .Values.backupSchedules }}

- name: {{ $schedule.name }}

schedule: {{ quote $schedule.schedule }}

deleteBackupData: {{ $schedule.deleteBackupData }}

enabled: {{ $schedule.enabled }}

{{- if hasKey $schedule "backupProfileName" }}

backupProfileName: {{ $schedule.backupProfileName }}

{{- else if hasKey $schedule "backupProfile" }}

{{- $isDumpInstance = hasKey $schedule.backupProfile "dumpInstance" }}

{{- $isSnapshot = hasKey $schedule.backupProfile "snapshot" }}

{{- if or $isDumpInstance $isSnapshot }}

{{- $backupProfile := ternary $schedule.backupProfile.dumpInstance $schedule.backupProfile.snapshot $isDumpInstance }}

backupProfile:

{{- if hasKey $schedule.backupProfile "podAnnotations" }}

podAnnotations: {{ toYaml $schedule.backupProfile.podAnnotations | nindent 8 }}

{{- end }}

{{- if hasKey $schedule.backupProfile "podLabels" }}

podLabels: {{ toYaml $schedule.backupProfile.podLabels | nindent 8 }}

{{- end }}

{{- if $isDumpInstance }}

dumpInstance:

{{- else if $isSnapshot }}

snapshot:

{{- end }}

{{- if not (hasKey $backupProfile "storage") }}

{{- fail "schedule backup profile $schedule.name has no storage section" }}

{{- else if hasKey $backupProfile.storage "ociObjectStorage" }}

storage:

ociObjectStorage:

{{- if $backupProfile.storage.ociObjectStorage.prefix }}

prefix: {{ $backupProfile.storage.ociObjectStorage.prefix }}

{{- end }}

bucketName: {{ required "bucketName is required" $backupProfile.storage.ociObjectStorage.bucketName }}

credentials: {{ required "credentials is required" $backupProfile.storage.ociObjectStorage.credentials }}

{{- else if hasKey $backupProfile.storage "s3" }}

storage:

s3:

{{- if $backupProfile.storage.s3.prefix }}

prefix: {{ $backupProfile.storage.s3.prefix }}

{{- end }}

bucketName: {{ required "bucketName is required" $backupProfile.storage.s3.bucketName }}

config: {{ required "config is required" $backupProfile.storage.s3.config }}

{{- if $backupProfile.storage.s3.profile }}

profile: {{ $backupProfile.storage.s3.profile }}

{{- end }}

{{- if $backupProfile.storage.s3.endpoint }}

endpoint: {{ $backupProfile.storage.s3.endpoint }}

{{- end }}

{{- else if hasKey $backupProfile.storage "azure" }}

storage:

azure:

{{- if $backupProfile.storage.azure.prefix }}

prefix: {{ $backupProfile.storage.azure.prefix }}

{{- end }}

containerName: {{ required "containerName is required" $backupProfile.storage.azure.containerName }}

config: {{ required "config is required" $backupProfile.storage.azure.config }}

{{- else if hasKey $backupProfile.storage "persistentVolumeClaim" }}

storage:

persistentVolumeClaim: {{ toYaml $backupProfile.storage.persistentVolumeClaim | nindent 12}}

{{- else -}}

{{- fail "dumpInstance backup profile $profile.name has empty storage section - neither ociObjectStorage nor persistentVolumeClaim defined" }}

{{- end -}}

{{- else }}

{{- fail "Impossible backup type for a schedule" }}

{{- end }}

{{- else }}

{{- fail "Neither backupProfileName nor backupProfile provided for a schedule" }}

{{- end }}

{{- end }}

{{- end }}

{{ if ((.Values).podSpec) }}

podSpec: {{ toYaml ((.Values).podSpec) | nindent 4 }}

{{ end }}

{{- if ((.Values).podLabels) }}

podLabels: {{ toYaml ((.Values).podLabels) | nindent 4 }}

{{- end }}

{{- if ((.Values).podAnnotations) }}

podAnnotations: {{ toYaml ((.Values).podAnnotations) | nindent 4 }}

{{- end }}回到 values.yaml 文件修改顶部 image

image:

registry: harbor.tianxiang.love:30443

repository: mysql-innodb-cluster

pullPolicy: IfNotPresent

tag: "8.0.43"

pullSecrets:

enabled: false

secretName:2. 查看服务启动情况

1. 查看 pod

[root@k8s-master1 mysql-innodbcluster]# kubectl get pod -n middleware

NAME READY STATUS RESTARTS AGE

mysql-backup-minio-84bb5f45dc-5jz5n 1/1 Running 0 3h20m

mysql-cluster-0 2/2 Running 0 87m

mysql-cluster-1 2/2 Running 0 106m

mysql-cluster-2 2/2 Running 0 93m

mysql-cluster-router-5dc5c46c54-sqkx7 1/1 Running 0 103m

mysql-cluster-router-5dc5c46c54-vf5zt 1/1 Running 0 102m

mysql-operator-5f8cd46f56-8nd2d 1/1 Running 0 173m2. 查看 pvc

[root@k8s-master1 mysql-innodbcluster]# kubectl get pvc -n middleware

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

datadir-mysql-cluster-0 Bound pvc-f8993ec5-d6d1-4185-bf4e-2edd99fe72ae 100Gi RWO openebs-hostpath 107m

datadir-mysql-cluster-1 Bound pvc-0f15b69a-c281-41fc-89cc-9aa3fb423925 100Gi RWO openebs-hostpath 107m

datadir-mysql-cluster-2 Bound pvc-f2d90e8b-300b-4c65-8368-0aa84c879ae2 100Gi RWO openebs-hostpath 107m

mysql-backup-minio-pvc Bound pvc-9353d0b2-a742-4095-8c7a-0034b7929513 50Gi RWO openebs-hostpath 3h21m3. 查看日志

[root@k8s-master1 mysql-innodbcluster]# kubectl -n middleware logs --tail=100 -f mysql-cluster-router-5dc5c46c54-vf5zt

2025-08-03 09:32:14 metadata_cache INFO [7f0dc0601640] Metadata for cluster 'mysql_cluster' has 3 member(s), single-primary:

2025-08-03 09:32:14 metadata_cache INFO [7f0dc0601640] mysql-cluster-1.mysql-cluster-instances.middleware.svc.cluster.local:3306 / 33060 - mode=RW

2025-08-03 09:32:14 metadata_cache INFO [7f0dc0601640] mysql-cluster-2.mysql-cluster-instances.middleware.svc.cluster.local:3306 / 33060 - mode=RO

2025-08-03 09:32:14 metadata_cache INFO [7f0dc0601640] mysql-cluster-0.mysql-cluster-instances.middleware.svc.cluster.local:3306 / 33060 - mode=RO

2025-08-03 09:32:14 metadata_cache INFO [7f0dc0601640] Enabling GR notices for cluster 'mysql_cluster' changes on node mysql-cluster-0.mysql-cluster-instances.middleware.svc.cluster.local:33060

4. 查看集群信息

[root@k8s-master1 mysql-innodbcluster]# kubectl -n middleware exec -it mysql-cluster-0 -c mysql -- mysqlsh -uri root:123456@127.0.0.1 -- cluster status

Cannot set LC_ALL to locale en_US.UTF-8: No such file or directory

WARNING: Using a password on the command line interface can be insecure.

{

"clusterName": "mysql_cluster",

"defaultReplicaSet": {

"name": "default",

"primary": "mysql-cluster-0.mysql-cluster-instances.middleware.svc.cluster.local:3306",

"ssl": "REQUIRED",

"status": "OK",

"statusText": "Cluster is ONLINE and can tolerate up to ONE failure.",

"topology": {

"mysql-cluster-0.mysql-cluster-instances.middleware.svc.cluster.local:3306": {

"address": "mysql-cluster-0.mysql-cluster-instances.middleware.svc.cluster.local:3306",

"memberRole": "PRIMARY",

"mode": "R/W",

"readReplicas": {},

"replicationLag": "applier_queue_applied",

"role": "HA",

"status": "ONLINE",

"version": "8.0.43"

},

"mysql-cluster-1.mysql-cluster-instances.middleware.svc.cluster.local:3306": {

"address": "mysql-cluster-1.mysql-cluster-instances.middleware.svc.cluster.local:3306",

"memberRole": "SECONDARY",

"mode": "R/O",

"readReplicas": {},

"replicationLag": "applier_queue_applied",

"role": "HA",

"status": "ONLINE",

"version": "8.0.43"

},

"mysql-cluster-2.mysql-cluster-instances.middleware.svc.cluster.local:3306": {

"address": "mysql-cluster-2.mysql-cluster-instances.middleware.svc.cluster.local:3306",

"memberRole": "SECONDARY",

"mode": "R/O",

"readReplicas": {},

"replicationLag": "applier_queue_applied",

"role": "HA",

"status": "ONLINE",

"version": "8.0.43"

}

},

"topologyMode": "Single-Primary"

},

"groupInformationSourceMember": "mysql-cluster-0.mysql-cluster-instances.middleware.svc.cluster.local:3306"

}[root@k8s-master1 mysql-innodbcluster]# kubectl -n middleware exec -it mysql-cluster-0 -c mysql -- mysqlsh -uri root:123456@127.0.0.1

MySQL 127.0.0.1:33060+ ssl SQL > SELECT * FROM performance_schema.replication_group_members;

+---------------------------+--------------------------------------+----------------------------------------------------------------------+-------------+--------------+-------------+----------------+----------------------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION | MEMBER_COMMUNICATION_STACK |

+---------------------------+--------------------------------------+----------------------------------------------------------------------+-------------+--------------+-------------+----------------+----------------------------+

| group_replication_applier | 5c3f8680-7063-11f0-81d5-ea8021331483 | mysql-cluster-0.mysql-cluster-instances.middleware.svc.cluster.local | 3306 | ONLINE | PRIMARY | 8.0.43 | MySQL |

| group_replication_applier | 5c57f2bc-7063-11f0-823f-7a34affb5934 | mysql-cluster-1.mysql-cluster-instances.middleware.svc.cluster.local | 3306 | ONLINE | SECONDARY | 8.0.43 | MySQL |

| group_replication_applier | 5f38f9c1-7063-11f0-819d-9e4a184cd4f9 | mysql-cluster-2.mysql-cluster-instances.middleware.svc.cluster.local | 3306 | ONLINE | SECONDARY | 8.0.43 | MySQL |

+---------------------------+--------------------------------------+----------------------------------------------------------------------+-------------+--------------+-------------+----------------+----------------------------+

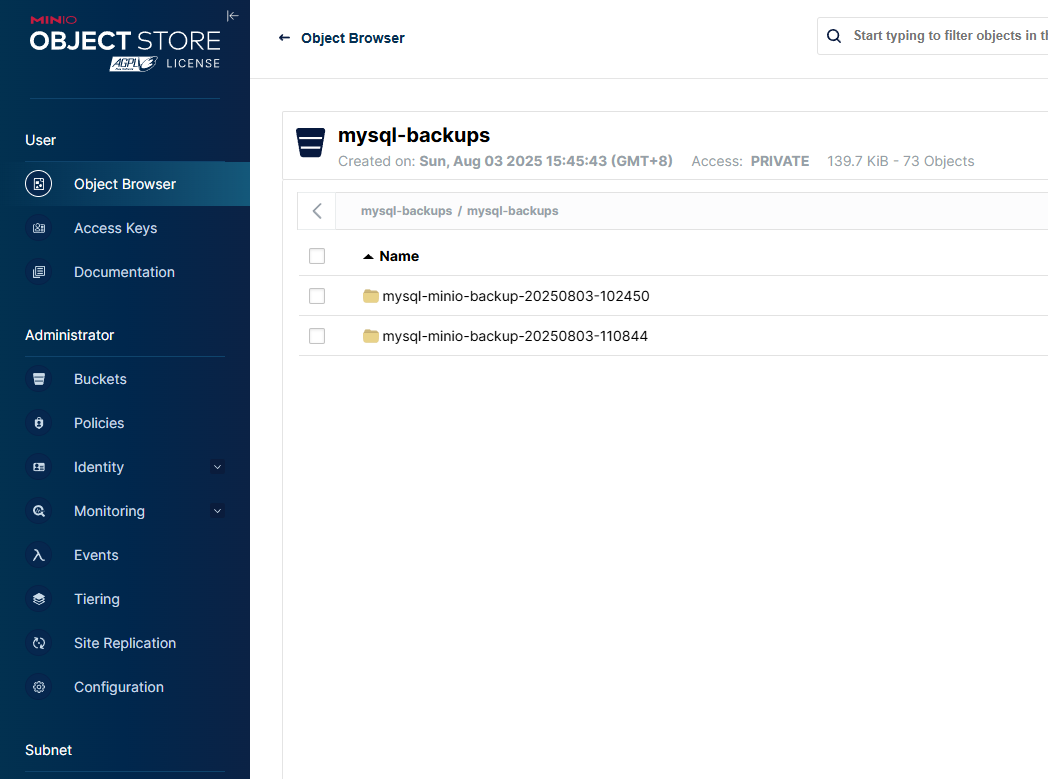

3 rows in set (0.0010 sec)3. 配置 MySQL 自动备份

MySQL Operator 不支持 MySQLBackupSchedule 资源,所以说我们要想自动备份就要借助 Linux cron 计划任务

1. 部署 minio 存储

[root@k8s-master1 mysql-cluster]# cat minio/deployment.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mysql-backup-minio-pvc

namespace: middleware

spec:

storageClassName: "openebs-hostpath"

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 50Gi

---

apiVersion: v1

kind: Service

metadata:

name: mysql-backup-minio

namespace: middleware

spec:

type: NodePort

ports:

- name: 9090-tcp

protocol: TCP

port: 9090

targetPort: 9090

nodePort: 32307

- name: 9000-tcp

protocol: TCP

port: 9000

targetPort: 9000

nodePort: 32308

selector:

app: minio

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: mysql-backup-minio

namespace: middleware

annotations:

nginx.ingress.kubernetes.io/proxy-body-size: "0"

labels:

name: mysql-backup-minio

spec:

ingressClassName: nginx

rules:

- host: mysql-backup.tianxiang.love

http:

paths:

- pathType: Prefix

path: "/"

backend:

service:

name: mysql-backup-minio

port:

number: 9090

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

name: minio

name: mysql-backup-minio

namespace: middleware

spec:

replicas: 1

selector:

matchLabels:

name: minio

template:

metadata:

labels:

app: minio

name: minio

spec:

containers:

- name: minio

image: swr.cn-north-4.myhuaweicloud.com/ddn-k8s/quay.io/minio/minio:RELEASE.2024-05-28T17-19-04Z

imagePullPolicy: IfNotPresent

command:

- "/bin/bash"

- "-c"

- |

minio server /data --console-address :9090 --address :9000

ports:

- containerPort: 9090

name: console-address

- containerPort: 9000

name: address

env:

- name: MINIO_ACCESS_KEY

value: "admin"

- name: MINIO_SECRET_KEY

value: "admin@123456"

readinessProbe:

failureThreshold: 3

httpGet:

path: /minio/health/ready

port: 9000

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 5

volumeMounts:

- mountPath: /data

name: data

volumes:

- name: data

persistentVolumeClaim:

claimName: mysql-backup-minio-pvc启动完毕后要创建一个 mysql-backups Bucket 桶,然后创建 ACCESS KEY 和 SECEETS KEY

2. 创建 secret

别问为什么要写两个文件,问就是官方要求这么写

# 里面填写 ACCESS KEY 和 SECEETS KEY

[root@k8s-master1 mysql-backup]# cat credentials

[default]

aws_access_key_id = hLTEOoGxsFzhJk78bFtQ

aws_secret_access_key = LYQz29W3w5LYwO2mSqoaMkDOgNNbYIuJGCFJnaz0

# 自定义一个区域

[root@k8s-master1 mysql-backup]# cat config

[default]

region = us-east-1创建

[root@k8s-master1 mysql-backup]# kubectl create secret generic mysql-backup-minio-credentials -n middleware \

--from-file=credentials=credentials \

--from-file=config=config3. 创建备份

[root@k8s-master1 mysql-backup]# cat minio-backup.yaml

apiVersion: mysql.oracle.com/v2

kind: MySQLBackup

metadata:

name: mysql-minio-backup

namespace: middleware

spec:

clusterName: mysql-cluster

backupProfile:

name: minio-backup-profile

dumpInstance:

storage:

s3:

bucketName: mysql-backups # MinIO 桶名称

config: mysql-backup-minio-credentials # 引用包含accessKey/secretKey的Secret

endpoint: http://mysql-backup-minio.middleware:9000 # MinIO服务地址

prefix: /mysql-backups/ # 备份文件前缀路径

profile: default # 使用默认profile

forcePathStyle: true # MinIO必须的配置[root@k8s-master1 mysql-backup]# kubectl get mysqlbackups.mysql.oracle.com -n middleware

NAME CLUSTER STATUS OUTPUT AGE

mysql-minio-backup mysql-cluster Completed mysql-minio-backup-20250803-110844 2m50s

4. 计划任务

[root@k8s-master1 mysql-backup]# cat mysql-backup.sh

#!/bin/bash

NAMESPACE="middleware"

TIMESTAMP=$(date +%Y%m%d-%H%M%S)

BACKUP_NAME="mysql-minio-backup-${TIMESTAMP}"

echo "[INFO] Starting backup: ${BACKUP_NAME}"

kubectl apply -f - <<EOF

apiVersion: mysql.oracle.com/v2

kind: MySQLBackup

metadata:

name: ${BACKUP_NAME}

namespace: ${NAMESPACE}

spec:

clusterName: mysql-cluster

deleteBackupData: false

incremental: false

addTimestampToBackupDirectory: true

backupProfile:

name: minio-backup-profile

dumpInstance:

storage:

s3:

bucketName: mysql-backups

endpoint: http://mysql-backup-minio.middleware:9000

prefix: /mysql-backups/

profile: default

config: mysql-backup-minio-credentials

EOF

echo "[INFO] Cleaning old backups (7 days ago and earlier)..."

# 清理 7 天前的备份

kubectl get mysqlbackups.mysql.oracle.com -n ${NAMESPACE} -o json | jq -r '

.items[] | select(.metadata.creationTimestamp < "'$(date -d "7 days ago" --iso-8601=seconds)'") | .metadata.name' \

| xargs -r -n1 -I {} kubectl delete mysqlbackup.mysql.oracle.com -n ${NAMESPACE} {}[root@k8s-master1 mysql-backup]# chmod +x /home/tianxiang/mysql-cluster/mysql-backup/mysql-backup.sh

[root@k8s-master1 mysql-backup]# crontab -l

0 2 * * * /home/tianxiang/mysql-cluster/mysql-backup/mysql-backup.sh >> /var/log/mysql-backup.log 2>&1关于 minio 的删除可以参考一下 mc 客户端命令,然后写个脚本自动删除 7 天前的

4. 解决 sidecar 容器报错

容器报错提示集群账户没有权限访问这个资源 customresourcedefinitions.apiextensions.k8s.io

[root@k8s-master1 mysql-innodbcluster]# kubectl -n middleware logs --tail=100 -f mysql-cluster-0 sidecar

[2025-08-03 12:14:27,611] kopf._cogs.clients.w [ERROR ] Request attempt #1/9 failed; will retry: GET https://10.96.0.1:443/apis/apiextensions.k8s.io/v1/customresourcedefinitions -> APIForbiddenError('customresourcedefinitions.apiextensions.k8s.io is forbidden: User "system:serviceaccount:middleware:mysql-cluster-sa" cannot list resource "customresourcedefinitions" in API group "apiextensions.k8s.io" at the cluster scope', {'kind': 'Status', 'apiVersion': 'v1', 'metadata': {}, 'status': 'Failure', 'message': 'customresourcedefinitions.apiextensions.k8s.io is forbidden: User "system:serviceaccount:middleware:mysql-cluster-sa" cannot list resource "customresourcedefinitions" in API group "apiextensions.k8s.io" at the cluster scope', 'reason': 'Forbidden', 'details': {'group': 'apiextensions.k8s.io', 'kind': 'customresourcedefinitions'}, 'code': 403})搜索 mysql-cluster-sa 所使用的集群角色

[root@k8s-master1 mysql-innodbcluster]# kubectl get rolebinding -n middleware -o wide | grep mysql-cluster-sa

mysql-cluster-sidecar-rb ClusterRole/mysql-sidecar 13m /mysql-cluster-sa

[root@k8s-master1 mysql-innodbcluster]# kubectl describe clusterrole mysql-sidecar

Name: mysql-sidecar

Labels: app.kubernetes.io/managed-by=Helm

Annotations: meta.helm.sh/release-name: mysql-operator

meta.helm.sh/release-namespace: middleware

PolicyRule:

Resources Non-Resource URLs Resource Names Verbs

--------- ----------------- -------------- -----

mysqlbackups.mysql.oracle.com [] [] [create get list patch update watch delete]

events [] [] [create patch update]

configmaps [] [] [get create list watch patch]

secrets [] [] [get create list watch patch]

services [] [] [get create list]

serviceaccounts [] [] [get create]

pods [] [] [get list watch patch]

pods/status [] [] [get patch update watch]

mysqlbackups.mysql.oracle.com/status [] [] [get patch update watch]

deployments.apps [] [] [get patch]

innodbclusters.mysql.oracle.com [] [] [get watch list]添加权限,单独创建一个绑定到服务账户上

[root@k8s-master1 mysql-innodbcluster]# vim crd-rbac.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: crd-reader

rules:

- apiGroups: ["apiextensions.k8s.io"]

resources: ["customresourcedefinitions"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: crd-reader-binding

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: crd-reader

subjects:

- kind: ServiceAccount

name: mysql-cluster-sa

namespace: middleware[root@k8s-master1 mysql-innodbcluster]# kubectl apply -f crd-rbac.yaml

clusterrole.rbac.authorization.k8s.io/crd-reader created

clusterrolebinding.rbac.authorization.k8s.io/crd-reader-binding created5. 卸载删除集群

如果长时间删除不了,则可以强制删除

[root@k8s-master1 mysql-innodbcluster]# helm -n middleware uninstall mysql-cluster

release "mysql-cluster" uninstalled

[root@k8s-master1 mysql-innodbcluster]# helm -n middleware uninstall mysql-operator

release "mysql-operator" uninstalled=

[root@k8s-master1 mysql-innodbcluster]# kubectl get pod -n middleware

NAME READY STATUS RESTARTS AGE

mysql-backup-minio-84bb5f45dc-5jz5n 1/1 Running 0 3h42m

mysql-cluster-0 2/2 Terminating 0 109m

mysql-cluster-1 2/2 Terminating 0 128m

mysql-cluster-2 2/2 Terminating 0 115m

mysql-minio-backup-20250803-110844-7zgvz 0/1 Completed 0 11m

mysql-operator-5f8cd46f56-8nd2d 1/1 Terminating 0 3h19m[root@k8s-master1 mysql-innodbcluster]# kubectl get pod -n middleware

NAME READY STATUS RESTARTS AGE

mysql-backup-minio-84bb5f45dc-5jz5n 1/1 Running 0 3h46m

mysql-minio-backup-20250803-110844-7zgvz 0/1 Completed 0 15m# 如果这个还存在,使用如下命令

[root@k8s-master1 mysql-innodbcluster]# kubectl patch innodbcluster mysql-cluster -n middleware \

-p '{"metadata":{"finalizers":[]}}' --type=merge

[root@k8s-master1 mysql-innodbcluster]# kubectl get innodbclusters -n middleware

No resources found in middleware namespace.删除 pvc

[root@k8s-master1 mysql-cluster]# kubectl -n middleware delete pvc -l mysql.oracle.com/cluster=mysql-cluster

persistentvolumeclaim "datadir-mysql-cluster-0" deleted

persistentvolumeclaim "datadir-mysql-cluster-1" deleted

persistentvolumeclaim "datadir-mysql-cluster-2" deleted